Title: OpenScholar: Synthesizing Scientific Literature with Retrieval-augmented LMs

Authors: Akari Asai, Jacqueline He, Rulin Shao, Weijia Shi, Amanpreet Singh, Joseph Chee Chang, Kyle Lo, Luca Soldaini, Sergey Feldman, Mike D’arcy, David Wadden, Matt Latzke, Minyang Tian, Pan Ji, Shengyan Liu, Hao Tong, Bohao Wu, Yanyu Xiong, Luke Zettlemoyer, Graham Neubig, Dan Weld, Doug Downey, Wen-tau Yih, Pang Wei Koh, Hannaneh Hajishirzi

Published: 21st November 2024 (Thursday) @ 15:07:42

Link: http://arxiv.org/abs/2411.14199v1

Abstract

Scientific progress depends on researchers’ ability to synthesize the growing body of literature. Can large language models (LMs) assist scientists in this task? We introduce OpenScholar, a specialized retrieval-augmented LM that answers scientific queries by identifying relevant passages from 45 million open-access papers and synthesizing citation-backed responses. To evaluate OpenScholar, we develop ScholarQABench, the first large-scale multi-domain benchmark for literature search, comprising 2,967 expert-written queries and 208 long-form answers across computer science, physics, neuroscience, and biomedicine. On ScholarQABench, OpenScholar-8B outperforms GPT-4o by 5% and PaperQA2 by 7% in correctness, despite being a smaller, open model. While GPT4o hallucinates citations 78 to 90% of the time, OpenScholar achieves citation accuracy on par with human experts. OpenScholar’s datastore, retriever, and self-feedback inference loop also improves off-the-shelf LMs: for instance, OpenScholar-GPT4o improves GPT-4o’s correctness by 12%. In human evaluations, experts preferred OpenScholar-8B and OpenScholar-GPT4o responses over expert-written ones 51% and 70% of the time, respectively, compared to GPT4o’s 32%. We open-source all of our code, models, datastore, data and a public demo.

- Demo: https://openscholar.allen.ai/

- Paper: https://openscholar.allen.ai/paper

- OpenScholar code: https://github.com/AkariAsai/OpenScholar

- ScholarQABench code: https://github.com/AkariAsai/ScholarQABench

- Expert evaluation code: https://github.com/AkariAsai/OpenScholar_ExpertEval

- Model checkpoints, index, data: https://huggingface.co/collections/OpenScholar/openscholar-v1-67376a89f6a80f448da411a6

OpenScholar-8B

OpenScholar-8B (OS-8B) system comprises the following components:

- OpenScholar Datastore: A collection of more than 45M papers from Semantic Scholar and ~250M corresponding passage embeddings. The underlying data comes from an updated version of peS2o (Soldaini et al., 2024) i.e. the Dolma paper that consists of papers up to October 2024.

- Specialized Retrievers and Rerankers: These tools are trained specifically to identify relevant passages from our scientific literature datastore.

- Specialized 8B Language Model: An 8B-parameter LM optimized for scientific literature synthesis tasks, balancing performance with computational efficiency. To train this, we fine-tune Llama 3.1 8B on synthetic data generated from our iterative self-feedback generation pipeline, described below.

- Iterative Self-Feedback Generation: At inference, we use iterative self-feedback to refine model outputs through natural language feedback. Each iteration involves additionally retrieving more papers, allowing us to improve quality and close citation gaps.

ScholarQABench

ScholarQABench consists of seven datasets—three existing datasets that focus on single-paper evaluations, and four newly collected datasets of questions that require synthesis over multiple papers:

- ScholarQA-Bio: 1,451 biomedical research questions sourced from experts with PhDs in relevant areas.

- ScholarQA-Neuro: 1,308 neuroscience research questions sourced from experts with PhDs in relevant areas.

- ScholarQA-CS: 100 computer science research questions, each with a set of rubric criteria that answers should meet, and sourced from experts with PhDs in computer science.

- ScholarQA-Multi: 108 questions and answers with citations, sourced from experts in Computer Science, Physics, and Biomedicine. Each expert spent about 1 hour on average writing comprehensive answers to each question.

Evaluating long-form answers in expert domains is challenging. For ScholarQABench, we developed automated metrics to evaluate Correctness, Citation F1 (how many statements are sufficiently and precisely supported by their citations?), Coverage, Relevance, and Organization. To assess the quality of answers, we compare model output to the expert-written rubrics in Scholar-CS and the expert-written responses in Scholar-Multi.

Performance

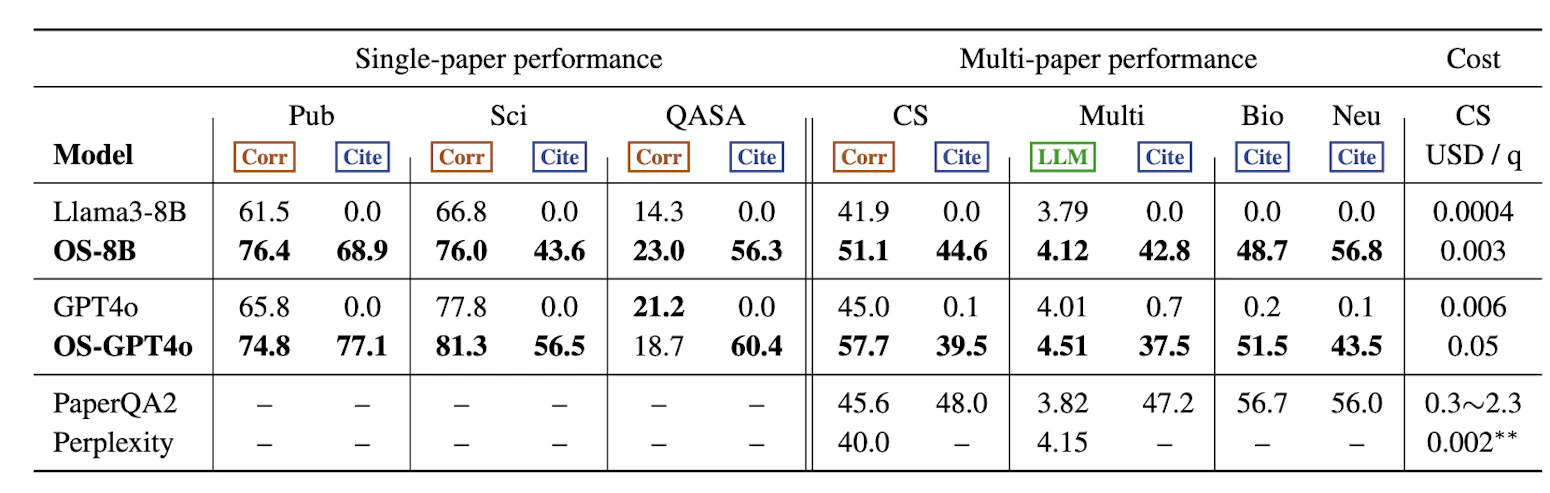

Table 1: Performance of models on ScholarQABench. Corr indicates Correctness, Cite indicates Citation F1, and LLM indicates the average scores of Coverage, Relevance and Organization rated on a 1–5 scale by Prometheus v2. We compute the cost based on API pricing at https://openai.com/api/pricing/ for GPT4o based models (PaperQA2, GPT4o baselines) and https://www.together.ai/pricing for Llama 3.1 based models to simulate the cost of inference for open models. Note that we ran our evaluations of Llama 3.1 8B and OS-8B locally.

- Overall generation quality of OpenScholar-8B: OS-8B outperforms much larger proprietary or open models such as GPT4o by 6.1% as well as models more specialized for scientific literature and/or retrieval, such as PaperQA2 (Skarlinski et al., 2024), which uses GPT4o throughout its pipeline, by 5.5%.

- Citation generation: When answering open-ended research questions, we found that GPT-4o and other models generated inaccurate or nonexistent citations in 80–95% of cases, with near-zero citation F1. In contrast, OS-8B significantly improved citation F1.

- Applying the OpenScholar pipeline to GPT4o: We applied our OpenScholar datastore, retriever and reranker, and self-feedback generation pipeline on top of GPT-4o. Compared to the base GPT-4o model on ScholarQA-CS, this improves correctness by 12% and dramatically improves citation F1 from 0.1 to 39.5.

- Cost efficiency: OS-8B is 100x more cost-efficient than concurrent systems such as PaperQA2, which relies on GPT4o for reranking, summarization, and answer generation, by employing smaller yet competitive retrievers and generator LMs.

❗️ The citation F1 scores are insane. GPT-4o is not doing RAG on scientific literature so unfair comparison but approx. 40% citation F1 seems like a good baseline for these new model types.

Limitations of parametric LMs. On both single-paper and multi-paper tasks, we observe that non-retrieval augmented baselines struggle and retrieval is almost always conducive to achieving better performance, and models without any retrieval often struggle to generate correct citations and show limited coverage on multi-paper tasks. As shown in Table 3, the proportion of cited papers that actually exist is strikingly low. In particular, while models such as GPTo and Llama can generate plausible reference lists, we find that 78-98% of the cited papers are fabricated, and the issue is exacerbated in biomedical domains. Even when citations refer to real papers, the majority are not substantiated by the corresponding abstracts, resulting in near-zero citation accuracy.

Expert evaluations

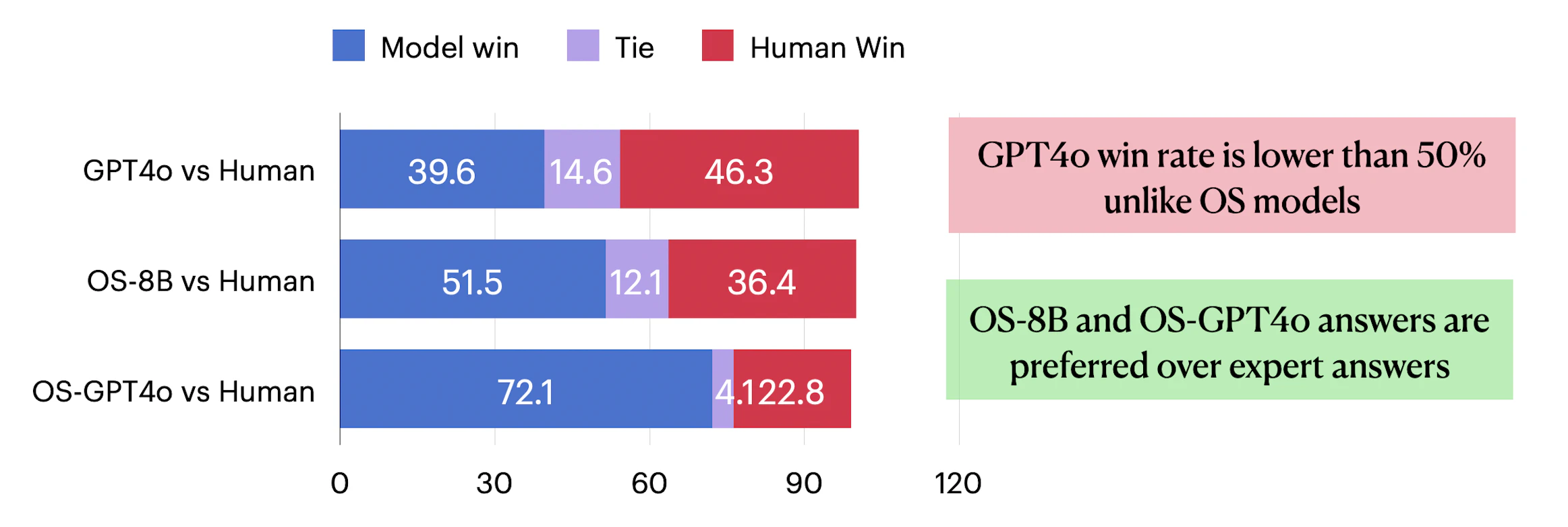

We recruited 20 experts from diverse fields, including computer science, physics, and biomedicine, to evaluate OpenScholar’s responses on the Scholar-Multi dataset. These experts compared OpenScholar’s outputs against corresponding human-written responses, each of which required approximately one hour for a scientist (who either has or is working toward a Ph.D.) to compose. Together, the experts completed over 500 fine-grained evaluations and pairwise comparisons. We found that:

- Experts preferred OpenScholar responses: Participants preferred OpenScholar’s outputs 70% of the time, noting that they were more comprehensive, well-organized, and useful for literature synthesis.

- Other models were limited: Models without retrieval capabilities, such as GPT-4o, struggled with information coverage and were judged to be less helpful than human experts.

Retrieval and Re-ranking Pipeline

Retrieval

Retrieve initial paragraphs. We retrieve passages from three sources:

- the peS2o datastore using our trained retriever,

- we first generate embeddings of each passage in D using the passage bi-encoder Obi, which processes text chunks (e.g., queries or passages) into dense vectors (Karpukhin et al., 2020) offline

- publicly available abstract from papers returned via the Semantic Scholar API (Kinney et al., 2023) based on search keywords, and

- we first generate keywords from the query x using a generator LM. These keywords are then used to retrieve the top 10 papers for each, as ranked by citation count, via the Semantic Scholar Search API. This approach addresses a limitation of the Semantic Scholar API, which cannot effectively handle long, question-like search queries

- publicly available texts from papers retrieved through a web search engine using the original query x.

- we obtain the top 10 search results using the You.com retrieval API restricting the search to academic platforms such as ArXiv and PubMed

Off-the-shelf retrieval models often struggle in out-of-domain scenarios (Thakur et al., 2021). To overcome this limitations, we develop t by continually pre-training Contriever (Izacard et al., 2022 👉 Unsupervised Dense Information Retrieval with Contrastive Learning) on the peS2o datastore in an unsupervised fashion to improve domain-specific retrieval performance (see Appendix C.1 for details).

- During inference, we encode the query using Obi and retrieve the top 100 passages through a nearest neighbor search (Karpukhin et al., 2020).

- If the papers are open-access, we extract and add their full texts to the candidate pool; otherwise, we include only their abstracts.

Re-ranking

- Rerank and finalize top N paragraphs. After the initial stage, we have gathered over 100, or even a thousand of relevant passages per query. However, passages retrieved by the bi-encoder may include unhelpful context due to deep interactions between a query and passages, as they are encoded separately (Asai et al., 2023).

- Feeding a large number of documents that might including irrelevant content to LLMs can cause efficiency and performance issues, even with state-of-the-art models (Liu et al., 2024; Xu et al., 2023a).

- To overcome these challenges, we use a cross-encoder reranker (Nogueira & Cho, 2019; Xiao et al., 2023), denoted as cross.

- For each candidate paragraph, the cross-encoder reranker jointly encodes and computes the relevance score between the input query and each of the passages.

- We then use the relevance score to rank the passages accordingly.

- To train Ocross for scientific domains, we

- fine-tune a BGE-reranker (Xiao et al., 2023) using synthetic data generated by Llama 3 70B Instruct.

- Specifically, we randomly generate queries based on abstracts from peS2o and retrieve the top 10 passages. Llama 3 70B Instruct then assigns relevance scores from 1 to 5 for these passages, where we consider scores of 4 or 5 as positive, and scores of 1 or 2 as negative. Passages with a score of 3 are discarded. More details of cross training are in Appendix C.2.

During reranking and finalization of top N passages, we also implement additional meta-filtering, which includes:

- limiting the number of passages per paper to three passages, and

- incorporating normalized citation counts into relevance scores predicted by the crossencoder

Metrics

- Citation metric(s) // Citation F1

- makes a lot of sense - love this

- main performance metric I find interesting from the accuracy/factuality perspective

- Content quality and organisation - lots of sub-criteria

What is the Correctness metric

Correctness (Corr). Correctness evaluates the degree of overlap or matching of a model-generated answer and human-annotated reference.

- This metric is only applied to tasks with human-annotated reference answers.

- For short-form generation tasks given a fixed set of answer classes, namely SciFact and PubMedQA, we use accuracy as the correctness metric.

- question what is “accuracy”?

- For QASA, we use ROUGE-L as an evaluation metric, following Lee et al. (2023)

- For SCHOLARQA-CS, we develop a new long-form evaluation pipeline, which employs expert-annotated rubrics. Each rubric has two criteria:

- general (accounting for 40% of the score) - cover the evaluation of length, expertise, citations, and excerpts

- annotation-driven (60%) - assessing the presence of specific key ingredients identified by annotators

- GPT40-turbo assigns scores for each criterion, and a weighted sum is computed to obtain a final score. More details are in appendix B.3.1

- question how?