Title: Unsupervised Learning of Visual Features by Contrasting Cluster Assignments

Authors: Mathilde Caron, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, Armand Joulin

Published: 17th June 2020 (Wednesday) @ 14:00:42

Link: http://arxiv.org/abs/2006.09882v5

Abstract

Unsupervised image representations have significantly reduced the gap with supervised pretraining, notably with the recent achievements of contrastive learning methods. These contrastive methods typically work online and rely on a large number of explicit pairwise feature comparisons, which is computationally challenging. In this paper, we propose an online algorithm, SwAV, that takes advantage of contrastive methods without requiring to compute pairwise comparisons. Specifically, our method simultaneously clusters the data while enforcing consistency between cluster assignments produced for different augmentations (or views) of the same image, instead of comparing features directly as in contrastive learning. Simply put, we use a swapped prediction mechanism where we predict the cluster assignment of a view from the representation of another view. Our method can be trained with large and small batches and can scale to unlimited amounts of data. Compared to previous contrastive methods, our method is more memory efficient since it does not require a large memory bank or a special momentum network. In addition, we also propose a new data augmentation strategy, multi-crop, that uses a mix of views with different resolutions in place of two full-resolution views, without increasing the memory or compute requirements much. We validate our findings by achieving 75.3% top-1 accuracy on ImageNet with ResNet-50, as well as surpassing supervised pretraining on all the considered transfer tasks.

Notes on SwAV: Unsupervised Learning of Visual Features by Contrasting Cluster Assignments by Mathilde Caron and colleagues.

- Paper (arXiv): https://arxiv.org/pdf/2006.09882.pdf

- FAIR Blog Post: High-performance self-supervised image classification with contrastive clustering published on July 20, 2020 (link)

- Code: https://github.com/facebookresearch/swav

Related: See Caron et al.’s previous work DeepCluster and Asano et al. (2019) Self-labelling via simultaneous clustering and representation learning (reference [2]).

See also Momentum Contrast for Unsupervised Visual Representation Learning and Self-Supervised Learning of Pretext-Invariant Representations.

Summary of Contributions

Core Explanations from the FAIR Post

Headlines and Significance

We’ve developed a new technique for self-supervised training of convolutional networks commonly used for image classification and other computer vision tasks. Our method now surpasses supervised approaches on most transfer tasks, and, when compared with previous self-supervised methods, models can be trained much more quickly to achieve high performance. For instance, our technique requires only 6 hours and 15 minutes to achieve 72.1 percent top-1 accuracy with a standard ResNet-50 on ImageNet, using 64 V100 16GB GPUs. Previous self-supervised methods required at least 6x more computing power and still achieved worse performance.

Method

In this work, we propose an alternative that does not require an explicit comparison between every image pair. We first compute features of cropped sections of two images and assign each of them to a cluster of images. These assignments are done independently and may not match; for example, the black-and-white image version of the cat image could be a match with an image cluster that contains some cat images, while its color version could be a match with a cluster that contains different cat images. We constrain the two cluster assignments to match over time, so the system eventually will discover that all the images of cats represent the same information. This is done by contrasting the cluster assignments, i.e., predicting the cluster of one version of the image with the other version.

In addition, we introduce a multicrop data augmentation for self-supervised learning that allows us to greatly increase the number of image comparisons made during training without having much of an impact on the memory or compute requirements. We simply replace the two full-size images by a mix of crops with different resolutions. We find that this simple transformation works across many self-supervised methods

Related Work

Very important summary of contrastive learning for self-supervised learning via the instance discrimination task, and those tasks that essentially build on it.

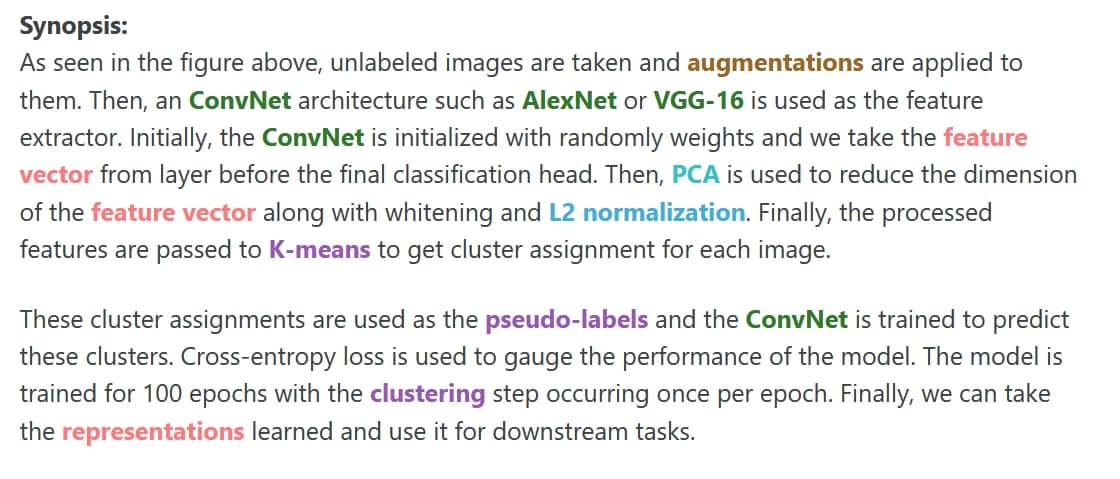

DeepCluster Paper Explained on Amit Chaudhary’s amazing blog https://amitness.com/2020/04/deepcluster/

Method

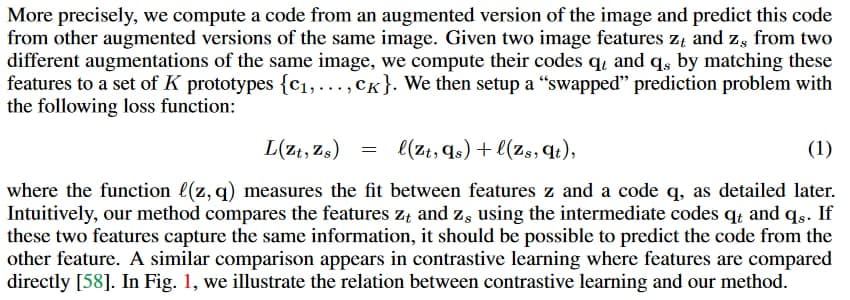

In the SwAV paper, Caron et al. say:

Caron et al. [7] show that k-means assignments can be used as pseudo-labels to learn visual representations. This method scales to large uncurated dataset and can be used for pre-training of supervised network.

They mention some theory which shows DeepCluster can be cast as the optimal transport problem (by this I understand some kind of Wasserstein distance type optimisation - minimisation - criterion across image views)

Caron et al. [7, 8] and Asano et al. [2], we obtain online assignments which allows our method to scale gracefully to any dataset size.

…but they do online cluster assignments of image views, which allows scaling, since you don’t have to pass the whole dataset through the clustering algorithm once to get the cluster “codes”, used as pseudo-labels

SwAV uses a very simple pretext task:

In contrast, our multi-crop strategy consists in simply sampling multiple random crops with two different sizes: a standard size and a smaller one.

Previous work is slow because it relies on alternating between a cluster assignment and training:

Typical clustering-based methods [2, 7] are offline in the sense that they alternate between a cluster assignment step where image features of the entire dataset are clustered, and a training step where the cluster assignments, i.e., “codes” are predicted for different image views.

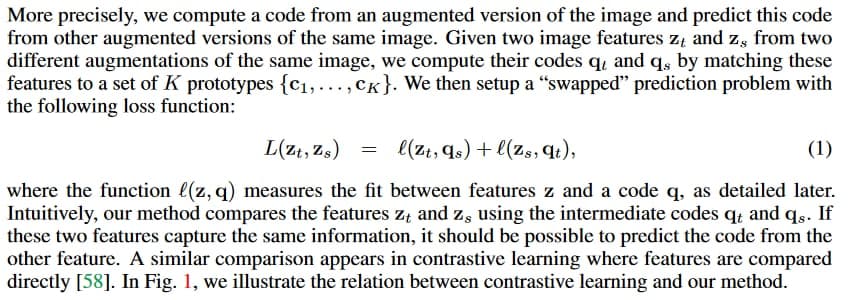

The crux of the SwAV method:

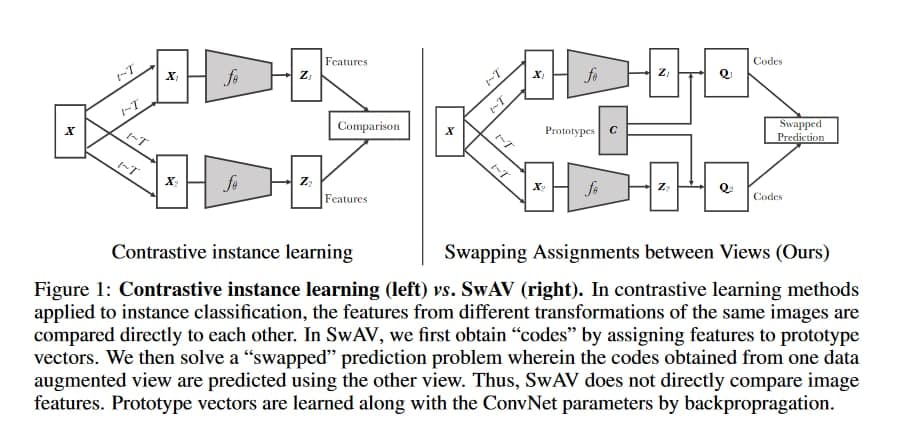

This solution is inspired by contrastive instance learning [58] as we do not consider the codes as a target, but only enforce consistent mapping between views of the same image. Our method can be interpreted as a way of contrasting between multiple image views by comparing their cluster assignments instead of their features.

…so in the end it is contrastive learning with multiple (image) views

This is the core method with z as features and q as code, predictable from the features if the two objects contain the same information:

This contrasts to contrastive learning since they use the swapped prediction problem (features predicting codes, given prototype codes) instead of directly comparing two sets of features:

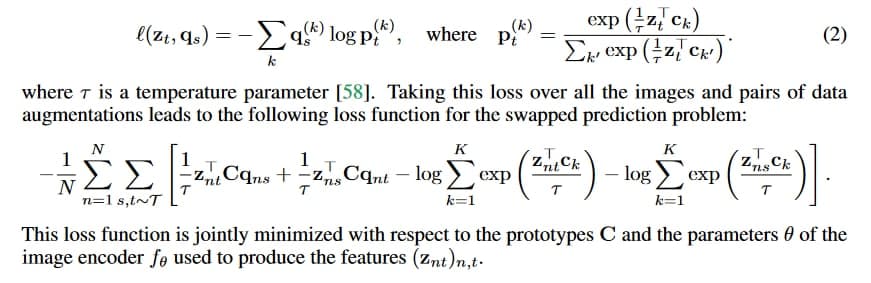

More specifically, the loss for the Swapped Prediction task is this.

Each term represents the cross entropy loss between the code and the probability obtained by taking a softmax of the dot products of z_i and all prototypes in C.

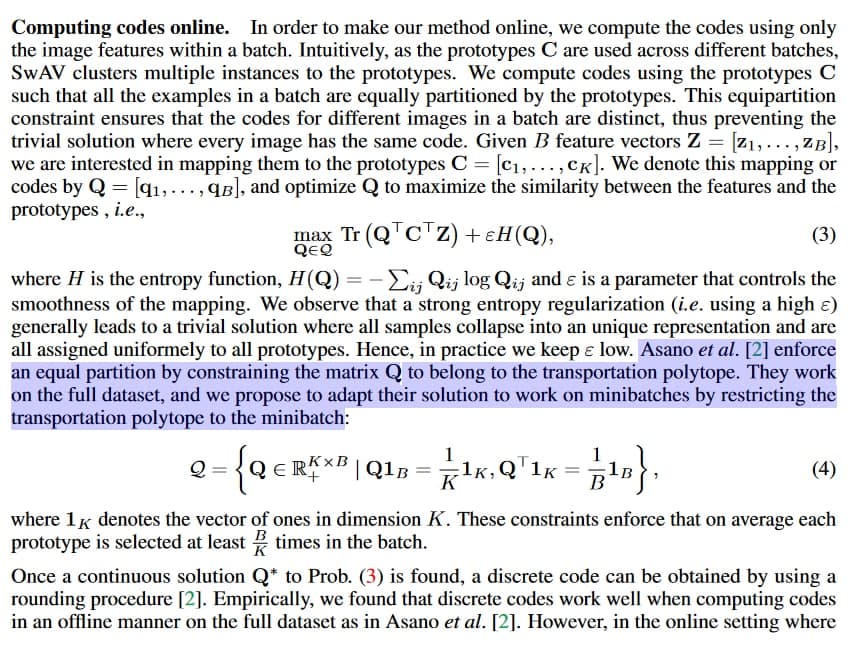

They use an equipartition constraint when distributing samples in a batch across prototype classes to avoid collapse.

In order to compute codes online, they compute the codes using only the image features within a batch.

As the prototypes C are used across different batches, SwAV clusters multiple instances to the prototypes, that is:

We compute codes using the prototypes C such that all the examples in a batch are equally partitioned by the prototypes. This equipartition constraint ensures that the codes for different images in a batch are distinct, thus preventing the trivial solution where every image has the same code.

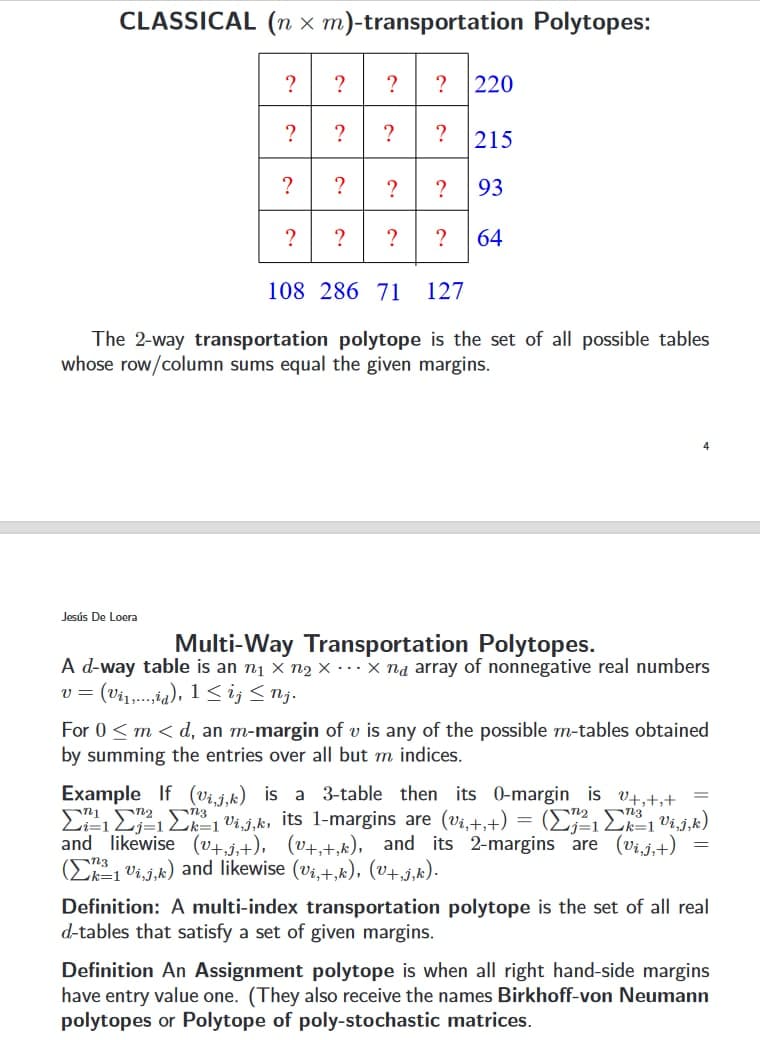

They enforce the equipartition constraint at the minibatch level using a transportation polytope:

What is a transportation polytope I hear you ask?

It’s just doing exactly what Caron et al. want to do, i.e. satisfy a set of margin constraints when assigning the samples across protoypes.

Have a look at these notes for more information: https://www.math.ucdavis.edu/~deloera/TALKS/20yearsafter.pdf

They would round the real polytope to get discrete prototype vectors, but this performs worse so they leave the soft targets to compute loss via cross-entropy.

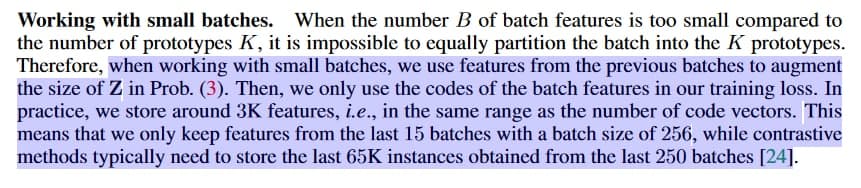

When using small batch sizes, they can’t satisfy equipartitioning, so they retain features e.g. from the last 5 batches with a batch size of 256, c.f. the last 65K instances from the last 250 batches for contrastive methods that work directly on features.

multi-crop increases the self-supervised encoder’s performance, whilst keeping computational and memory cost low, since the crops are small.

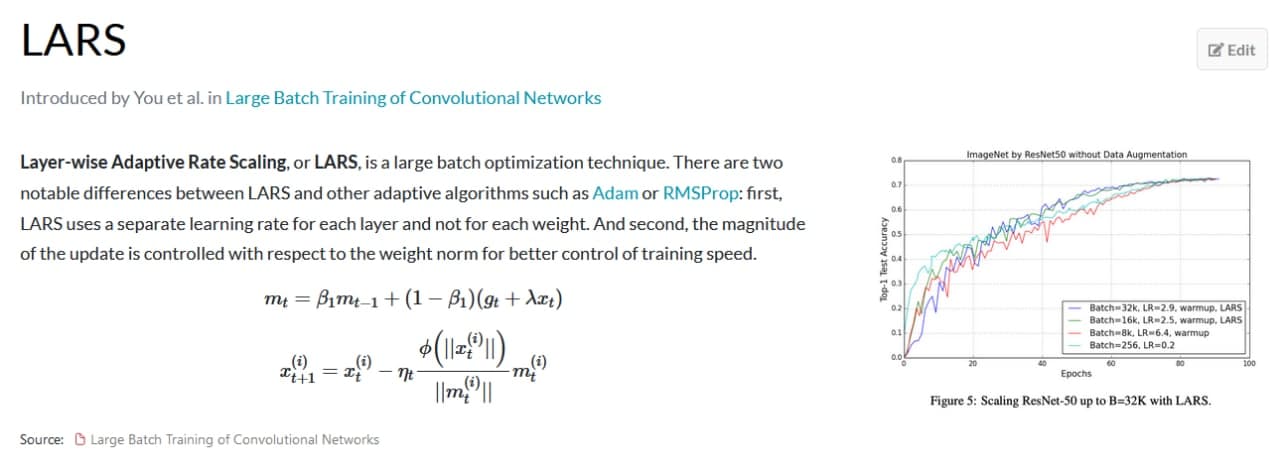

Experimentally they use LARS + cosine learning rate + MLP projection head.

For more on LARS see:

- Original paper https://arxiv.org/pdf/1708.03888.pdf

- LARS at Papers with Code https://paperswithcode.com/method/lars

Some additional scribbles I made when reading the Introduction.

Up to now, this section is a stub, focussing on the introduction, background and methods. See also results section and ablation studies.