Title: End-to-End Learning of Visual Representations from Uncurated Instructional Videos

Authors: Antoine Miech, Jean-Baptiste Alayrac, Lucas Smaira, Ivan Laptev, Josef Sivic, Andrew Zisserman

Published: 13th December 2019 (Friday) @ 11:59:58

Link: http://arxiv.org/abs/1912.06430v4

Abstract

Annotating videos is cumbersome, expensive and not scalable. Yet, many strong video models still rely on manually annotated data. With the recent introduction of the HowTo100M dataset, narrated videos now offer the possibility of learning video representations without manual supervision. In this work we propose a new learning approach, MIL-NCE, capable of addressing misalignments inherent to narrated videos. With this approach we are able to learn strong video representations from scratch, without the need for any manual annotation. We evaluate our representations on a wide range of four downstream tasks over eight datasets: action recognition (HMDB-51, UCF-101, Kinetics-700), text-to-video retrieval (YouCook2, MSR-VTT), action localization (YouTube-8M Segments, CrossTask) and action segmentation (COIN). Our method outperforms all published self-supervised approaches for these tasks as well as several fully supervised baselines.

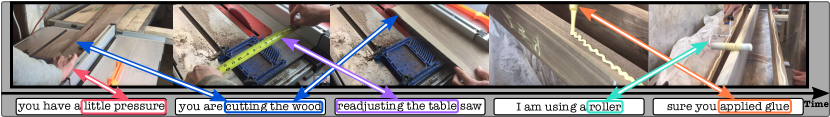

In this work, we propose a bespoke training loss, dubbed MIL-NCE as it inherits from Multiple Instance Learning (MIL) and Noise Contrastive Estimation (NCE). Our method is capable of addressing visually misaligned narrations from uncurated instructional videos as illustrated in Figure 1.

Figure 1: We describe an efficient approach to learn visual representations from misaligned and noisy narrations (bottom) automatically extracted from instructional videos (top). Our video representations are learnt from scratch without relying on any manually annotated visual dataset yet outperform all self-supervised and many fully-supervised methods on several video recognition benchmarks.

we demonstrate that the representations thus obtained are competitive with their strongly supervised counterparts on four downstream tasks over eight video datasets.

Self-supervised Proxy Tasks in Vision (circa December 2019)

Self-supervised approaches do not suffer from these issues as the idea is to define a supervised proxy task using labels directly generated from videos. Some of these tasks include: temporal ordering of video clips or frames [24, 46, 54, 86], predicting geometric transformations [37], maximizing the mutual information of multiple views [74], predicting motion and appearance [78], predicting the future, the past or a portion of masked input in the feature space [30, 71, 76], colorizing videos [77], predicting 3D geometry from synthetic data [25], predicting the audio in a feature space [7, 43] or tasks leveraging temporal cycle consistency [23, 81].

Vision, speech and language. A common alternative to training visual models using manually defined sets of labels is to exploit semantic supervision from natural language or speech. Numerous prior works [18, 21, 27, 28, 42, 51, 55, 60, 62, 88, 79, 80, 83, 84] have used image / video description datasets [48, 63, 65, 87, 90] to learn an embedding space where visual and textual data are close only if they are semantically similar.

…To avoid labeling visual data, several approaches have leveraged audio transcripts obtained from narrated videos using automatic speech recognition (ASR) as a way to supervise video models for object detection [3, 15, 56], captioning [34, 72], classification [2, 44, 49, 89], summarization [59] or retrieval [52] using large-scale narrated video datasets such as How2 [67] or HowTo100M [52]

Others [10, 31] have investigated learning from narrated videos by directly using the raw speech waveform instead of generating transcriptions. Most related to us is the work of Miech et al. [52] who trained a joint video and text embedding from uncurated instructional videos [52]

There’s no citation above for the “learning from narrated videos by directly using the raw speech waveform instead of generating transcriptions” but this is super interesting. What is this paper?question

They don’t use any manual annotation. In contrast, the key innovation of our work is that we demonstrate learning a generic video representation as well as a joint video-text embedding from scratch, without pre-training on manually annotated video or image datasets.

What is Multiple instance learning?

Multiple instance learning for video understanding. Multiple instance learning methods have been employed in many weakly-supervised video understanding problems including: person recognition in movies using scripts [11, 50, 61], anomaly detection [70], weakly supervised action classification [47, 68] and localization [16, 22, 82], co-reference resolution of characters in TV series [64] or object tracking [8]. These methods often rely on some form of maxpooling (i.e. MIL-SVM [4]) or discriminative clustering (i.e. DIFFRAC [9]) to resolve the label ambiguities, and have used mostly linear (or shallow) models. In this work, we present MIL-NCE, a new approach marrying the noise contrastive estimation (NCE) framework [29] with multiple instance learning [20].

OK so basically learning the occurrence / recurrence / appearance of the same entity multiple times (in a video in this context).

Leveraging Uncurated Instructional Videos (§3)

- In practice, a pair is composed of

- a short 3.2 seconds video clip (32 frames at 10 FPS)

- together with a small number of words (not exceeding 16) that correspond to what the person is saying in the video.

- For example, someone might be sanding wood while mentioning the action “sanding down” or the object “sander” as illustrated in Figure 2a.

- Given this input, our goal is to learn a joint embedding space where similarity between the narration and video embedding is high when the text and visual content are semantically similar and low otherwise

- and we wish to learn this starting from raw pixels in the video and text descriptions

Basically an improved version of the very simple model they present in HowTo100M Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips - which has multiple authors in common.

MIL-NCE objective is just a very simple NCE loss consisting of positive and negative video-text pairs.

Experiments

Implementation

Model & Inputs:

- For the 3D CNN backbone, we use the standard I3D implementation from [14]

- for all ablation studies and for the comparison to state-of-the-art, we report result on both I3D and S3D [85].

- We use the Google News self-supervised pre-trained word2vec (d=300) embedding from [53] for our word representation

- Each video clip at training contains 32 frames sampled at 10 fps (3.2 seconds) with a 200x200 resolution (224x224 at test time).

- For each narration, we take a maximum of 16 words

Training dataset: Positive and Negative Pairs

- For each clip-narration training pair (x, y) sampled, we construct the bag of positive candidate pairs P by considering the nearest captions in time to y as depicted in Figure 2a.

- For example, if we set the number of positive candidate pairs to 3, we would have P = {(x, y),(x, y1 ),(x, y2 )} where y 1 and y 2 are the 2 closest narrations in time to y.

- We work with batch containing B positive video-narration pairs {(xi , yi)}i∈[1,B] .

- We construct the set N by simply creating negative pairs from this batch by combining . Since all representations are already computed, computing negative scores is cheap and efficient.

Training:

- We train our model using Cloud TPUs v3 1 , each Cloud TPU having a batch size of 128 videos

Downstream tasks:

- Action Recognition: HMDB-51 [45], UCF-101 [69], Kinetics-700 [13].

- Text-to-Video retrieval: YouCook2 [90], MSR-VTT [87]

- Action Localization: YouTube-8M [1] Segments.

- Action Step Localization: CrossTask [91].

- Action Segmentation: COIN [73].