Calculating the Cost of a Google Deepmind Paper - 152334H

Excerpt

Recently, GDM released a great paper titled, Scaling Exponents Across Parameterizations and Optimizers, in which they conduct over 10,000 LLM training runs to obtain optimal hyperparameters under different regimes. After reading it (it was great), I wanted to test my understanding of the paper by tallying up all experiments conducted within, calculating the total compute cost it would take to replicate the paper.

Recently, GDM released a great paper titled, Scaling Exponents Across Parameterizations and Optimizers, in which they conduct over 10,000 LLM training runs to obtain optimal hyperparameters under different regimes.

After reading it (it was great), I wanted to test my understanding of the paper by tallying up all experiments conducted within, calculating the total compute cost it would take to replicate the paper.

Headline result

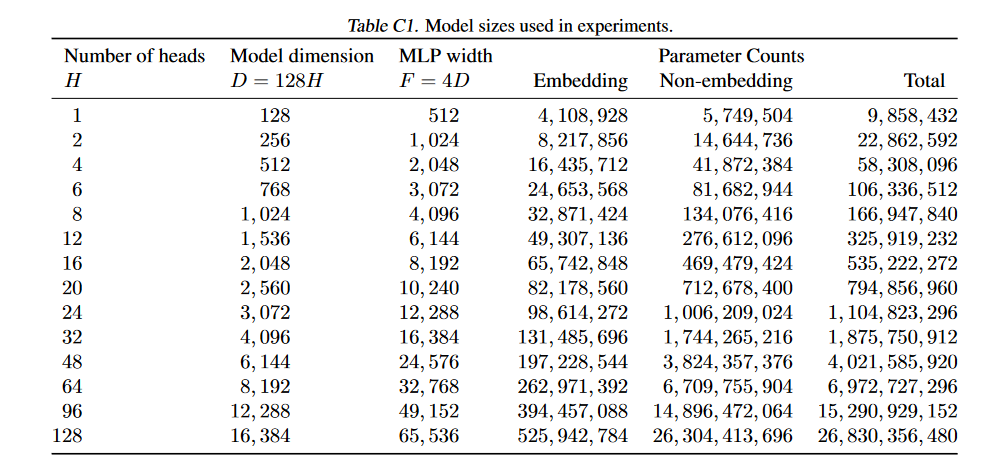

| Subset | Sources of uncertainty | FLOPs | Costs @ $3/H100/hr |

|---|---|---|---|

| Alignment | N/A | 3.7e20 | $888 |

| LR variants (+default) | LR-sweeps, bayes search | 7.99e23 | $1.90M |

| LR variants (+optimal) | LR-sweeps | 1.35e24 | $3.22M |

| Epslion (Heatmaps) | LR-sweeps, | 1.34e24 | $3.19M |

| Epslion (Full Sweeps) | LR-sweeps | 7.99e23 | $1.90M |

| Weight Decay | LR-sweeps | 1.33e23 | $317K |

| Adafactor vs Adam+PS | LR-sweeps, | 7.92e22 | $188.5K |

| Compute Optimals | LR-sweeps, | 7.52e23 | $1.79M |

| Total | too much | 5.42e24 | $12.9M |

Any corrections on the numbers here will be appreciated.

Although I have made significant efforts to vet these claims, if I have made significant mistakes in mathematics, these results could be off by magnitudes.

Sidenote: What’s an H100 worth?

Although it’s never stated, all experiments in the paper were almost certainly conducted with TPUs (because it’s from Google Deepmind). Furthermore, as there is no mention of int8 usage in their paper, it is most likely that all experiments were conducted with bfloat16 compute precision, per the nanodo default.

However, as a GPU user, I prefer to calculate compute in terms of H100 hours. Some basic facts:

- The H100-SXM is reported as having 989.40TFLOP/s of 16-bit tensor core operations.

- Also, 66.9TFLOP/s fp32 non-tensor, but I won’t consider non-tensor operations (such as softmax or hadamard products) in my analysis.

- Recent pytorch blogs and torchtitan both report single-node FSDP’d bf16 H100 MFU for reasonably mid sized models at (optimistically) 40%.

- the smaller models () in the paper are unlikely to have MFU that high.

- Although this is not hard to push higher with some manual tuning, the time spent tuning performance & engineering required to heuristically adjust for efficiency depending on setting is unlikely to be worth it.

- The cost of a H100 node (at the time of writing) is 2.85/hr/gpu from sfcompute, and ballpark $2/hr/gpu if you get a long term bulk contract.

If we pessimistically estimate the true average tensor FLOP/s provided by a H100 GPU on an average run as 3.5e14 (aka slightly above 35% MFU), and the cost of a H100 GPU as $3/hr, we get:

|

These numbers are fungible and you can choose to mentally halve (or double) them if you find it appropriate.

A summary of all experiments tried

There are a few different types of experiments done in the paper:

Experiment types

- Alignment experiments, which use a single global close-to-optimal LR, while varying

- 4x paramterizations

- 3x optimizers (Adam, SGD+momentum, Adafactor)

- Learning rate experiments, which vary:

-

3x optimizers (Adam, SGD+momentum, Adam+PS)

-

4x paramterizations

-

14x model widths . but this is really best described as scaling numheads

-

Global LR vs Per-layer Beta LR vs Per-layer Gamma LR + Per-layer No align LR

- The experiements are particularly complex to calculate, see point 3

-

LR by an indeterminate range – they sweep in intervals of and terminate rightwards when

- the LR leads to NaNs OR

- the eval loss for a given LR

i.e. the first (larger than optimal) LR to show either of those conditions is not plotted, and the LR or is.

…or at least, that is what the paper says is supposed to be the case. I explain my contentions later.

-

- Adam Epslion experiments, which vary

- over 4x parameterizations,

- at least over Adam, where

- at least 6x eps is tried

- at least constant vs per-layer is compared.

- at least 13x LR is tried. Appendix F: “learning rate sweep at each model dim for each value of epsilon or base epsilon”

- according to Appendix J/K, over all 14 model dims,

- For Adam, 4x (base eps, small const, good per-layer, atan2)

- technically, we double-count base EPS from the LR experiments, but we also neglect the extra no-align per-layer eps experiments, so this cancels out

- For Adam+PS, 2x (base eps, good per-layer)

- the double-neglect accounting argument applies here too

- For Adam, 4x (base eps, small const, good per-layer, atan2)

- at least over Adam, where

- over 4x parameterizations,

- extra weight decay experiments

- static: adam, per-layer, full alignment, decoupled 1e-4

- 4x parameterizations

- LR experiment-like sweep across all 14 model widths

- extra adafactor experiments

- 2x optim (Adafactor vs adam+ps)

- 2x setting (globalLR+default vs perlayer+optimal)

- 4x parameterizations

- LR experiment-like sweep across only 11x model widths up to due to FSDP.

- actually implemented as 12x but final results are 11x and I follow the latter.

- extra fixed step vs compute optimal

- the 50k fixed step experiments are not the same as any of the above; they use “default constant learning rate multipliers” and have different power laws.

- 3x optim (SGD+moment, adam, adafactor)

- 4x parameterizations

- LR experiment-like sweep across model width && LR.

- width only goes up to 11x, last 3 are missing on Compute Optimal.

- compute-optimal experiments use 20x tokens of non-embedding P as a heuristic.

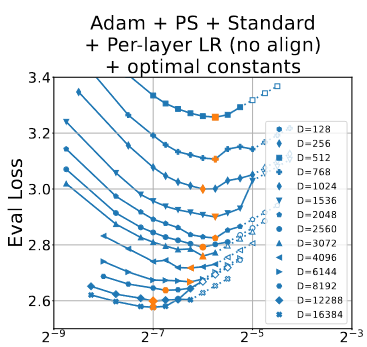

However, there are many problems with the experimental summary as given above.

Problems

-

It is not clear whether they re-executed the per-layerLR experiments for the two edge cases where per-layer constants lead to identical behavior to globalLR (where ):

- muP + SGD + full alignment, or

- Adafactor + any parameterization + no alignment

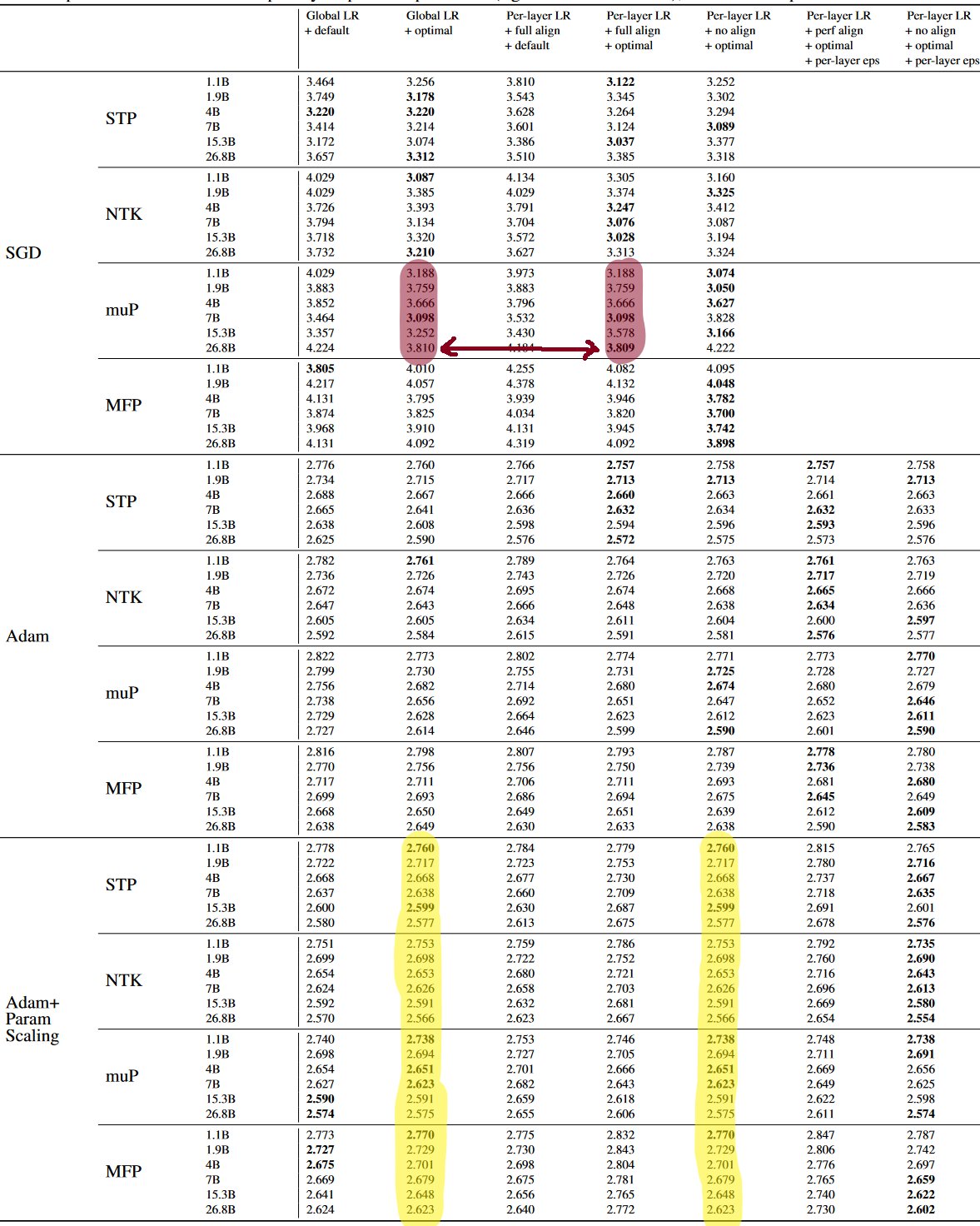

My expectation is that their experiments were repeated, because if you look at Table E1, you’ll see that the muP+SGD+full columns actually have a single diverging value (presumably caused by precision differences):

However, I was also given (private) notice that in some cases, the experiments with theoretically equivalent settings were merely executed once, with the eval losses copied twice. This makes the true extent of compute unknowable from the paper.

-

The LR experiments have indeterminate bounds, so I can’t directly figure out how many experiments were executed.

You can’t “just read the graphs” to figure out what the range of LRs used are either; they cut off the y/x axis:

Frankly, it doesn’t even look like the steps here are guaranteed to be split in intervals of .

After further inspection, it looks an awful lot like the runs have arbitrary LR ranges even for the same , optim, parameterization, and alignment. Or I just don’t understand the selection process (what are the unshaded shapes?).

-

In C.4., they state:

When tuning the per-layer constant multiplicative factors defined in Section 4.2, we use vizier to perform 3D hparam search for at . Recall that we define the learning rate in layer as and sweep one dimension at all model sizes to determine , so these values of define two ratios where any common factor can be absorbed by .

To be clear, that last segment means: “you can divide by any of the 3 values to obtain some tuple, the sweep will bring back to the correct value”. And so they say:

For each optimizer × parameterization, we run 800 trials with at most 100 trials in parallel with a range set to for each constant. If the optimal value for any of the constants is at or near the edge of the range after this first search, we extend the range of the sweep for that constant to 0.01 and 100x the optimal value found in the original sweep and repeat the same tuning procedure.

Upside: this gives 800 experiments as a lower bound for the experiments. Downside: We otherwise have no plotted information about the 3D experiments that were conducted. The actual plotted graphs just show final eval loss against base LR, under the assumption that the base line on the Optimal Constants graphs actually hide the extra work done to sweep values.

-

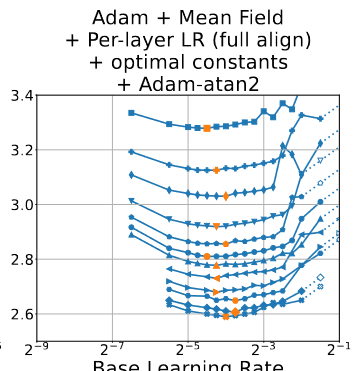

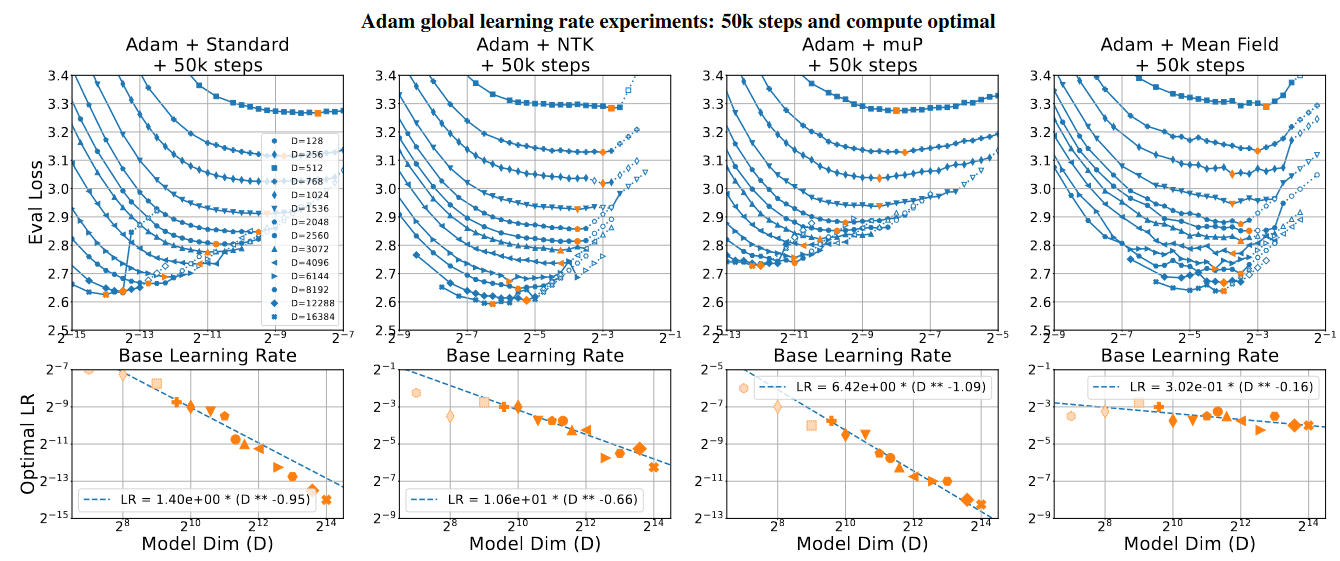

It is deeply unclear to me what is actually implemented for the fixed-step vs compute optimal runs. If we look at the 50k steps graph:

It looks extremely similar, but not identical to the original Adam+GlobalLR+default graphs:

I have no idea what the differences are supposed to be here. However, in the interest of sticking with the paper’s behaviour, I attempt to include the compute used for these psuedo-repeated experiments.

For each of these issues, I do my best to pick an approximation that makes sense to me in the later sections.

Transformer information

In Appendix C, the model is described as:

- decoder-only

- no bias on weights (including layernorm, which only has learnable scale)

- LPE, pre-LN, GeLU, no tied emb

- T5 Sentencepiece 32k + 1BOS + 100extra, i.e. . This is never stated to be padded.

- “Training inputs are sequence-packed, while evaluation inputs are padded”

- , , ,

- , .

with some extra details for later:

- no dropout

- mostly FSDP

- (this excludes the layernorm params () and the LPE ())

- “The compute optimal experiments include models up to or , and the fixed (50,000) step experiments include models up to .”

FLOPs per token

To start, we want to find , the number of FLOPs required per token for a training run.

Basic transformer math

As a reminder for any noam-like transformer, the tensor FLOPs required per token is approx:

In particular, assumes a causal mask halves the computation required (I assume flash-attn does this)

The paper does not describe the usage of any GQA/MQA, so I assume . This gives us

We have additional constants of , , and , so we write:

|

For all experiments except the compute-optimal series in Appendix I, we also have a hardcoded number of and global , making the total number of tokens seen per experiment by default.

Subproblem: Alignment experiments

I assume the alignment experiments got their optimal LRs from the later experiments, and didn’t do their own sweeps, so that would make the cost simply,

|

These experiments would take <US$1k to execute.

Subproblem: Table E1 experiments

Table E1 has a neat collection of many of the runs done for obtaining the best eval losses under any given parameterization/optimizer/setting (some combination of global vs per-layer vs -optimal vs -optimal).

This is an easier subproblem to tackle than the general issue of all LR sweeps, as the requirements are better known – though still not entirely determined, per the repetition ambiguity mentioned earlier. For that issue, I assume that all experiments were conducted, with no copied results, making the estimate here an upper bound.

We have the following schedule:

- 4x parameterizations

- 3x optimizers, where

- SGD only receives 5 experimental settings

- Adam & Adam+PS receives 7

|

These would’ve taken slightly below $400k in H100 compute to execute. Reasonably speaking, this is within the bounds of SWE life savings / big academic budgets / TPU Research Cloud upper-class. Technically replicable, albeit not cheap.

But the bulk of the compute used in the paper comes from the LR sweeps, so we have to start working on that.

Estimating LR sweep damage

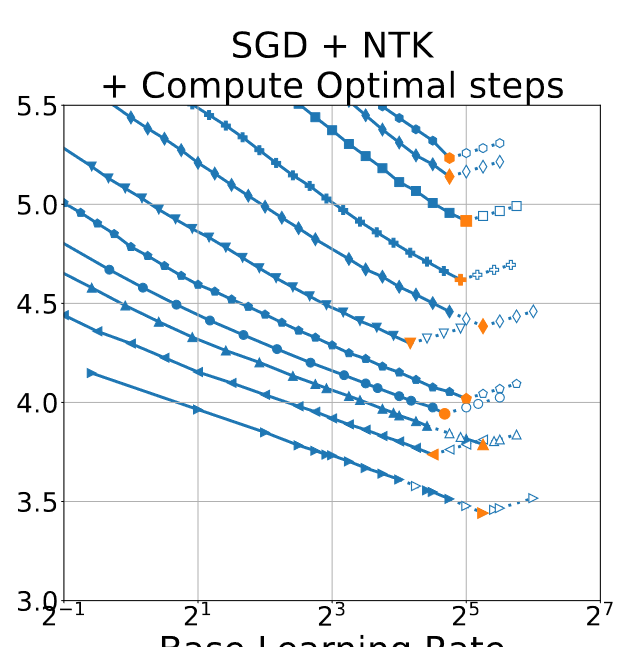

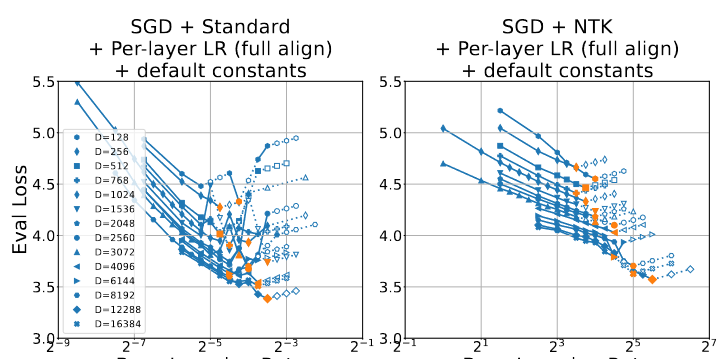

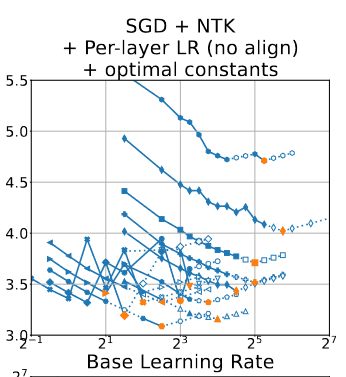

Here’s another graph:

[

](https://152334h.github.io/blog/scaling-exponents/Pasted%20image%2020240730050857.png “/blog/scaling-exponents/Pasted%20image%2020240730050857.png”)

And here’s a third one:

[

](https://152334h.github.io/blog/scaling-exponents/Pasted%20image%2020240730050917.png “/blog/scaling-exponents/Pasted%20image%2020240730050917.png”)

Guess what?

- There isn’t a constant num. of LRs sweeped for a given , or optim/parameterization/setting.

- Especially notable: number of runs seems inversely correlated with ; there are almost always less runs for the highest dim than the lowest.

- Neither is there an observable cutoff for when the runs stop – runs will spike up to 2x the optimal no problem.

- You can’t get the exact correct number of runs by graph-reading; in many cases the points are out-of-bounds.

The consistencies I do spot are that:

- there is typically a “starting LR” (smallest base) for any given line.

- the hollowed points are typically to the right – but sometimes left – of the optimal point.

so I think the mechanism worked this way:

- start a sweep with a starting LR and some expected jumpsizes of or .

- terminate it by the 20% / NaN heuristic.

- if the graph looks weird (optimal point somewhere odd), rerun to fill many intervals around the current optimal. These result in the plotted hollow points

I have no means of confirming this as the experimental procedure, as the authors of the paper stopped replying to me.

An arbitrary decision

Due to my desire to finish this blog post in a reasonable amount of time, I made the unprincipled decision of approximating the number of experiments-per-line in any given Eval Loss vs Base Learning Rate graph as 15.

Why 15? By eyeballing, the range of runs-per-line for the highest hovers around 10~15. Although the lines with smaller D tend to have far more points on average, the amount of compute spent per run scales by , so I think this is fair enough.

Feel free to suggest a more principled approach if you have one.

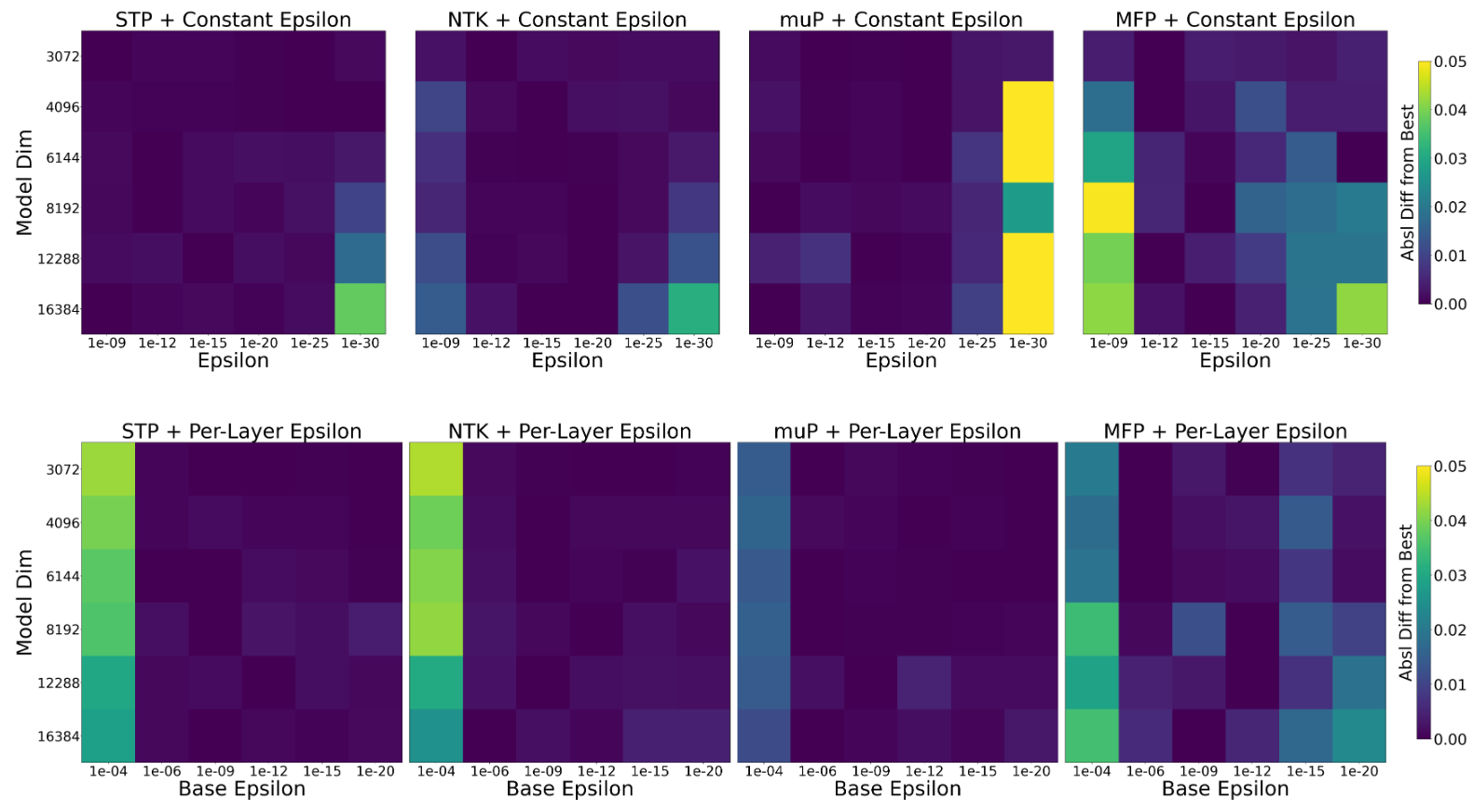

Main problem: Epslion

Much of the compute used up by the paper comes from Section 4.3, the Adam epslion experiments.

Optimal eps runs

Now that we have an estimate of LRs-per-line as 15, we can estimate the compute spent on the actual Adam epslion varying graphs:

|

Simple enough, right? Ignoring the ~$2M bill.

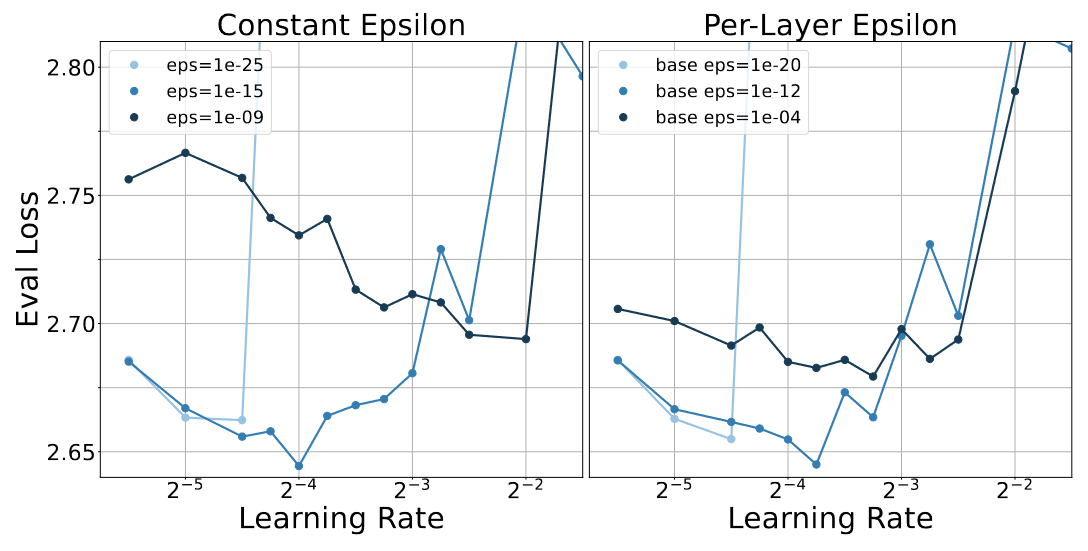

Epslion Heatmaps

There are two ways you could approach the expected sweep range for this problem:

-

assume the LR experiment sweep code was reused. All 14x , LR swept by arcane unknown ruleset.

-

Limit to the graphs. Only the last 6 values of were shown – assume only those were used. Plus, if we look at Figure 6:

Notice that the range of evaluated learning rates actually seems constant here, unlike in the normal Eval Loss vs Base LR plots.

I’m picking the latter because it’s simpler. Would be happy to be shown evidence that this is wrong.

|

These squares are worth US$3.2 Million

To be clear, this is supposed to be an underestimate of the budget required, because we model the average number of unique LRs used per heatmap square as a constant instead of the (typically higher) value used in variable LR sweeps.

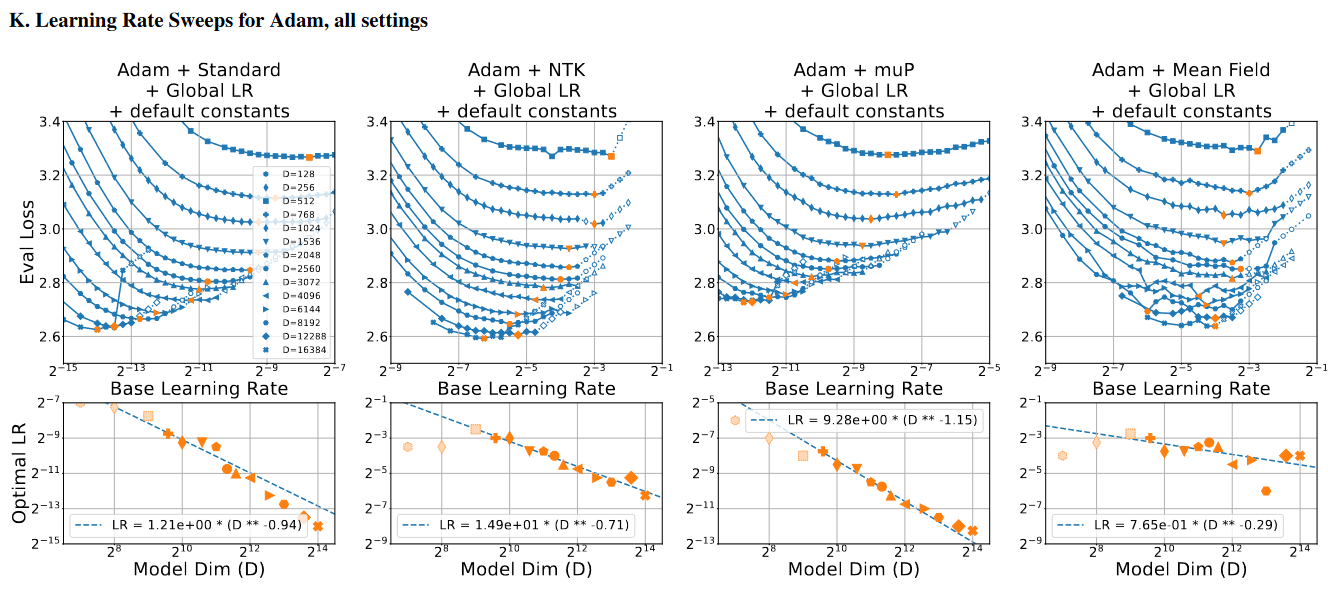

Main problem: LR Sweep Strategies

The other meat of the paper is in Section 4.2, the experiments.

-only experiments

“” refers to the empirically obtained base LR constant under the equation , also known as the +default experiments.

The paper sweeps this for 3x optimizers, 4x parameterizations, 14x widths, global vs per-layer , and of course unknown LR sweep counts.

|

Incidentally, this has an identical estimated cost to the epslion variants.

experiments

So, two issues.

- These experiments are “like” the -only experiments, but with 3x cases (GlobalLR, Perlayer-fullalign, Perlayer-nolign) instead of 2x (GlobalLR, Perlayer-fullalign).

- Specifically for , we have at least 800 extra runs, due to the 3D hparam search for .

We can combine those two as,

|

This is, once again, exceedingly close to that of the Adam heatmap experiments.

Sidenote: I may be understanding the per-layer aspect of the paper incorrectly; I expected the compute expenditure of this section to be larger.

Weight Decay

The WD experiments are simple enough. We repeat 4x parameterizations && do a single base-LR sweep on all

|

Incredibly cheap, I could afford that in some years.

Adafactor

As a reminder, I only count the first 11 , even though the report actually has 12 in one graph.

|

Compute Optimal

The paper states that,

The compute optimal experiments include models up to or , and the fixed (50,000) step experiments include models up to .

If you read the graphs in Appendix I, this is slightly wrong, because

-

50k experiments go to on Adafactor, and otherwise

-

all compute optimal experiments go up to only.

Note that a 4B param run requires 80B tokens by chinchilla, and C4 is less than 200B tokens, so they couldn’t have gone higher without changing the dataset.

This is honestly a bit complex, so let’s forgo the latex and just describe it in python:

|

Code summary

Here is the full script to get the estimates I created:

|

This gives the following:

|

|

In the grand scheme of things, 5.42e24 is “not that big”. After all, that’s not even 15% of the compute used for Llama 3; a 100k H100 cluster could accomplish all of these experiments in just 2 days.