Title: xTower: A Multilingual LLM for Explaining and Correcting Translation Errors

Authors: Marcos Treviso, Nuno M. Guerreiro, Sweta Agrawal, Ricardo Rei, José Pombal, Tania Vaz, Helena Wu, Beatriz Silva, Daan van Stigt, André F. T. Martins

Published: 27th June 2024 (Thursday) @ 18:51:46

Link: http://arxiv.org/abs/2406.19482v1

Abstract

While machine translation (MT) systems are achieving increasingly strong performance on benchmarks, they often produce translations with errors and anomalies. Understanding these errors can potentially help improve the translation quality and user experience. This paper introduces xTower, an open large language model (LLM) built on top of TowerBase designed to provide free-text explanations for translation errors in order to guide the generation of a corrected translation. The quality of the generated explanations by xTower are assessed via both intrinsic and extrinsic evaluation. We ask expert translators to evaluate the quality of the explanations across two dimensions: relatedness towards the error span being explained and helpfulness in error understanding and improving translation quality. Extrinsically, we test xTower across various experimental setups in generating translation corrections, demonstrating significant improvements in translation quality. Our findings highlight xTower’s potential towards not only producing plausible and helpful explanations of automatic translations, but also leveraging them to suggest corrected translations.

Model Weights: https://huggingface.co/sardinelab/xTower13B

Overview

Why previous metrics and tools aren’t enough

- BLEU and neural metrics Comet and BLEURT provide only a numerical score reflecting overall translation quality.

- Recent metrics like xComet (Guerreiro et al., 2023a) and AutoMQM (Fernandes et al., 2023) highlight error spans to justify their scores but do not offer explanations about the nature of these errors.

- InstructScore, a recent work by Xu et al. (2023), leverages large language models (LLMs) to provide a quality score conditioned on built-in error detection and explanations.

- InstructScore primarily functions as a reference-based metric, using explanations as a means to improve score estimates via meta-feedback/finetuning.

xTower explainer

- xTower: an LLM to produce high-quality explanations for translation errors and to utilize these explanations to suggest corrections through chain-of-thought prompting

- built on TowerBase 13B

- Referenceless - Unlike InstructScore

- agnostic about the source of error spans: human annotation or via automatic tools

- automatic case: xComet (Guerreiro et al., 2023a)

- employ human evaluation to score explanations on two dimensions

Figure 1: Approach Overview

Illustration of our approach. In this example, the input consisting of a source and a translation is passed to xComet, which annotates the translation with error spans and produces a (discretized) quality score. The full input, marked translation, and quality score are passed to xTower, which, in turn, produces an explanation for each error span along with a final suggestion for a new, corrected translation.

Contributions:

- Introduce xTower, a multilingual LLM that generates free-text explanations for translation errors and provides corrected translations.

- Conduct extensive human evaluations to assess the relatedness and helpfulness of xTower’s explanations, linking their results with dedicated qualitative analyses.

- Evaluate xTower’s corrected translations across multiple language pairs and experimental setups, showing significant improvements in translation quality.

Distillation Data

- use GPT-4 to generate explanations for samples annotated with MQM spans and to generate a final translation correction

- Dataset comprises samples from the WMT 2022 Metric shared task (Freitag et al., 2022)

- English→German (en-de)

- English→Russian (en-ru)

- Chinese→English (zh-en)

- Each error span is annotated by humans according to the MQM framework, which includes a severity rating such as minor or major

Overall, our distillation dataset consists of 33,442 samples containing 63,188 human-annotated error spans.

Finetuning

- finetuned TowerBase-13b on a dataset of:

- GPT-4 generated explanations described in §3.1

- machine translation data from TowerBlocks (dataset used to train TowerInstruct)

- combined all available data to train a single, multilingual model

- instead of training separate models for each language pair

- xTower can handle both referenceless and reference-based k-shot prompts because employed a mixed prompt setting (zero-shot, few-shot) during training

- Our training hyperparameters and configuration follows that used to train TowerInstruct (Alves et al., 2024)

Evaluation / Results

The evaluation comprises the following two dimensions:

- Relatedness: The extent to which the explanation is related to the content of the error span.

- Helpfulness: The extent to which the explanation helps in understanding the nature of the error and in guiding towards a translation correction.

Relatedness

-

Requested doc-level and explanation-level explanations from humans

-

Used the Direct Assessment + Scalar Quality Metric scheme (Kocmi 2022): 6-point Likert scale for relatedness (0 low; 6 high)

-

Used Upwork for the human evaluations

-

~0.5 spearman corr. coef. across human ratings - moderate agreements

- 0.51 (en-de) and 0.40 (zh-en) at the explanation-level

- 0.50 (en-de) and 0.37 (zh-en) at the document-level

-

human-annotated error spans, the overall relatedness scores range around 4.3

-

xComet spans the scores drop to around 3.2

This difference indicates that the quality of error spans heavily impacts the quality of their explanations.

Nonetheless, for both cases, human ratings are in the 3-5 range, indicating that xTower’s explanations are mostly related to the error spans.

Decrease in relatedness score for more spans for xComet but not for humans (see Figure 2) Comet might overpredict error spans

Figure 2: Relatedness by number of xComet or Human spans

Helpfulness

6-point Likert scale again for two questions:

- Q1: How helpful is the explanation in improving the understanding of the nature of the error?

- Q2: How helpful is the explanation in guiding towards writing a better translation?

Results / Take-home Messages

Is xTower effective at refining translations?

- xTower obtains similar results with human-annotated and xComet error spans

- using xComet spans: Comet deltas vary from 1 to 3 points, leading to significant quality improvements for en-de and zh-en.12

How does xTower compare to prompting LLMs?

Comparing best scores obtained by xTower (either from xComet or human spans) and TowerInstruct: xTower outperforms TowerInstruct on he-en and zh-en with a delta of 9 Comet points on the he-en

- Mixtral presents the lowest scores overall

- GPT 3.5T achieves the highest scores overall, outperforming xTower on all language pairs in terms of BLEURT and Comet

- GPT 3.5T displays a consistent drop of performance when refining translations, suggesting that it may not utilize error spans and explanations as effectively.

Are the error spans being fixed?

To assess how effectively the models address the errors highlighted in the prompt, we computed the percentage of fixed error spans with a string matching approach.

- Overall, xTower fixes 80% of the errors for en-de, 83% for he-en, and 84% for zh-en, while GPT 3.5T fixes 75% of the errors for en-de, 82% for he-en, and 80% for zh-en

- These results indicate that both GPT-3.5T and xTower can, to some degree, leverage error spans and explanations to fix a large portion of the errors, with xTower showing a consistent edge over GPT-3.5T.

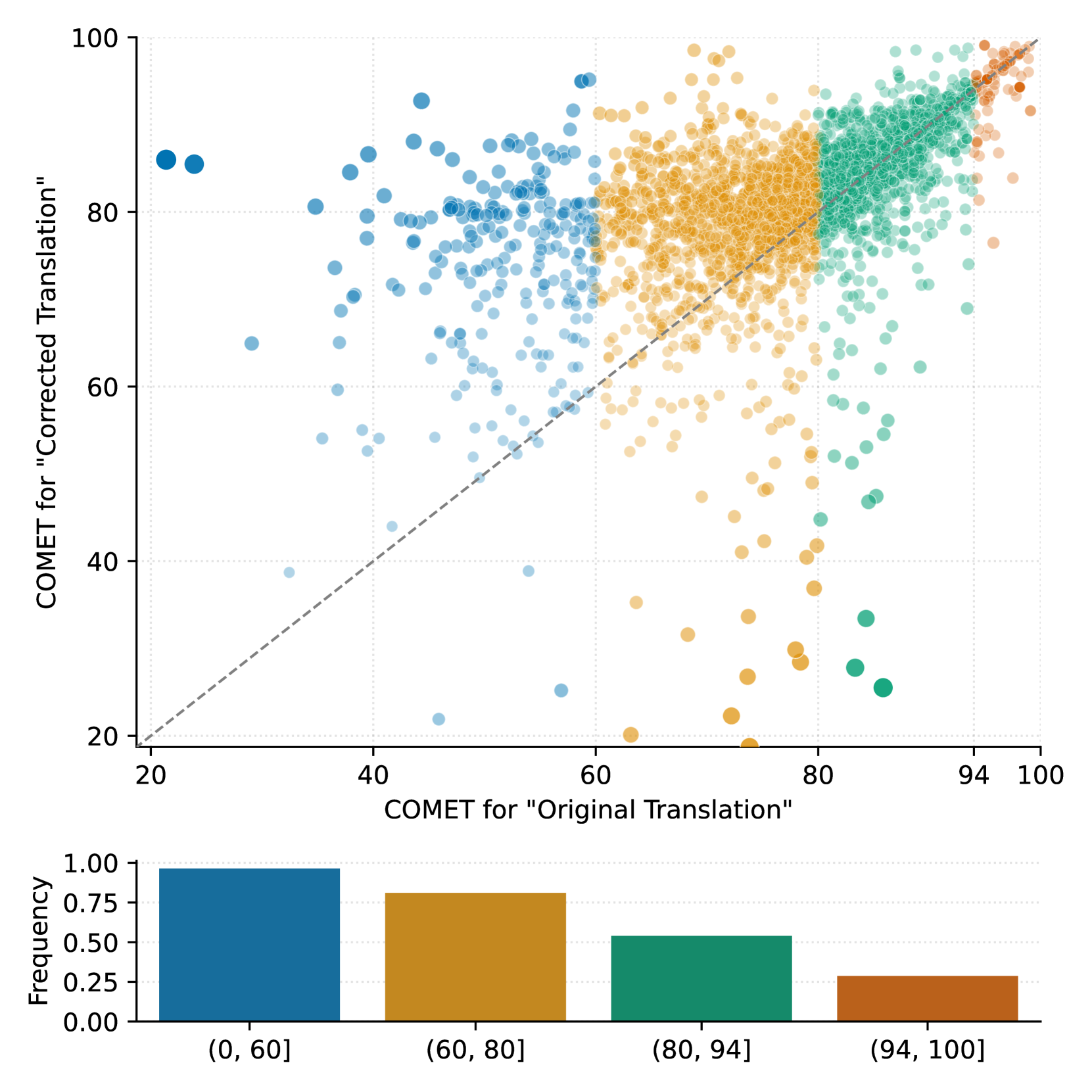

Hybrid approach - as demo’d by Figure 3

Propose a hybrid approach using CometKiwi IST-Unbabel 2022 Submission for the Quality Estimation Shared Task which is referenceless which involves:

- If original above some quality threshold, use original

- elif quality(correction) > quality(original), use corrected

- else use original

Figure 3: Comet original vs Comet post-edited

Spans are mostly being improved, esp. for low initial quality spans.

Figure 4: Comet vs relatedness

- negative Pearson correlation (r=−0.15) between explanations’ relatedness and original translations’ Comet scores

- higher quality explanations are often associated with poorer quality original translations