Title: How Should We Extract Discrete Audio Tokens from Self-Supervised Models?

Authors: Pooneh Mousavi, Jarod Duret, Salah Zaiem, Luca Della Libera, Artem Ploujnikov, Cem Subakan, Mirco Ravanelli

Published: 15th June 2024 (Saturday) @ 20:43:07

Link: http://arxiv.org/abs/2406.10735v1

Abstract

Discrete audio tokens have recently gained attention for their potential to bridge the gap between audio and language processing. Ideal audio tokens must preserve content, paralinguistic elements, speaker identity, and many other audio details. Current audio tokenization methods fall into two categories: Semantic tokens, acquired through quantization of Self-Supervised Learning (SSL) models, and Neural compression-based tokens (codecs). Although previous studies have benchmarked codec models to identify optimal configurations, the ideal setup for quantizing pretrained SSL models remains unclear. This paper explores the optimal configuration of semantic tokens across discriminative and generative tasks. We propose a scalable solution to train a universal vocoder across multiple SSL layers. Furthermore, an attention mechanism is employed to identify task-specific influential layers, enhancing the adaptability and performance of semantic tokens in diverse audio applications.

Overview

This paper addresses this gap by evaluating the effects of different heuristics required to derive semantic tokens for several discriminative and generative tasks, such as speech recognition, speaker recognition, emotion classification, speech enhancement, and text-to-speech.

We investigate various crucial aspects, including:

- impact of the number of clusters

- the selection of the intermediate layer of the SSL model to discretise

- the SSL model to use: they experiment with WavLM large and HuBERT large (both with 24 layers)

Layer choice found to be crucial and task-dependent, as early layers capture low-level information and higher layers encode content and semantic nuances.

Common strategies include using the middle layer: - Speech Resynthesis from Discrete Disentangled Self-Supervised Representations - SELM Speech Enhancement Using Discrete Tokens and Language Models

…or leveraging the last layer: Exploration of Efficient End-to-End ASR using Discretized Input from Self-Supervised Learning

Informed layer selection mechanism via attention

Instead of relying on partial information only, they introduce an informed layer selection mechanism. They propose to cluster all layers and inject their information into the acoustic models using learnable attention weights. This approach significantly boosts performance while also providing valuable insights into the importance of each layer.

- Combine (token/cluster) embeddings across layers via attention mechanism (time varying)

- Simple yet effective approach

- Allows interpretation: Attention weights indicate layer importance for tasks

Scalable Vocoder: Layer-agnostic Semantic Token Vocoder via Layer Dropout

There are no built-in decoders for semantic tokens - like there are for Encodec / Soundstream a vocoder model for converting the semantic tokens into audio must be trained [26,27].

Training such a vocoder is computationally demanding impractical to train a separate vocoder for each layer or combination of layers.

They propose a novel scalable vocoder capable of operating with various layer combinations at no additional cost. This is achieved through a layer dropout training scheme, inspired by the bitrate scalability mechanism used in SoundStream.

It works as follows:

- Randomly sample layers from the available layers (“from the range ”)

- Use attention to combine the layers ensures dimensionality is consistent irrespective of the number of “sampled” layers

- User can specify number of layers to sample at inference time

Results show that the scalable vocoder outperforms all vocoders trained on every specific layer. Finally, for a comprehensive comparison, we provide experimental evidence using both in-domain and out-of-domain datasets for training k-means.

Acoustic Model

- Train a model for multiple downstream tasks

- Discriminative: ASR, speaker identification, and emotion recognition

- Generative: text-to-speech; speech enhancement

- Jointly train the speech token embeddings, attention weights, and acoustic model

question How is this model trained to perform all downstream tasks jointly?

Evaluation

Discriminative:

- ASR: WER

- Speaker Identification (SID): accuracy

- ECAPA-TDNN model [34] on VoxCeleb1 [35]

- Emotion Recognition (ER): accuracy

- ECAPA-TDNN for emotion recognition [36] on the IEMOCAP dataset

- Predict one of the four considered classes: {happy, sad, angry, neutral}

Generative Tasks:

- Speech enhancement (intelligibility): differential word error rate (dWER) - measures the WER between the transcribed enhanced signal and the transcribed target signal

- Text-to-speech (speech quality): UTMOS

- UTokyo-SaruLab’s system submission for The VoiceMOS Challenge 2022

Results (Take-home messages)

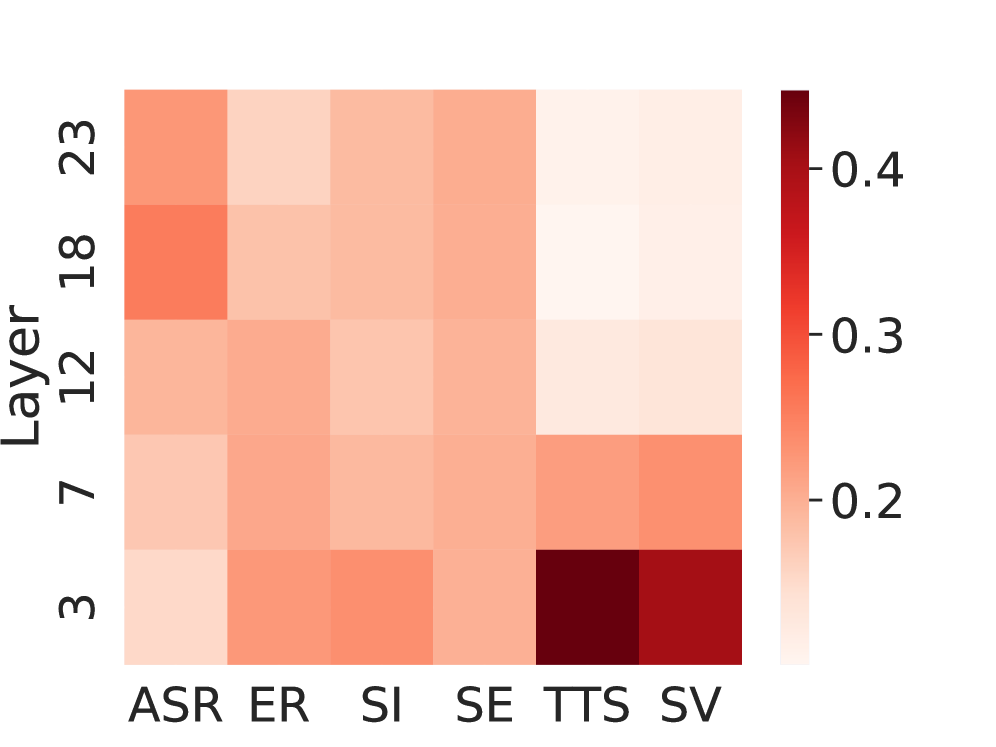

Layer weights computed by attention are telling ✨

- Lower-level layers / “acoustic” features are used more by TTS and the scalable vocoder (SV), which prioritize effective reconstruction

- Upper-level layers / “semantic” features are used more by ASR

- Emotion recognition (ER) and Speaker Identification (SID): 3rd layer receives the highest weight (but weights are more even across layers)

- findings align with the observed pattern in continuous representations: [45]. For SE, all layers are equally weighted, indicating the necessity of all hierarchical levels to achieve optimal audio quality while preserving the semantic content of the input.

Fig. 3 depicts the average weights assigned to different layers in the WavLM model across various downstream tasks on the test dataset.

Number of Clusters (more benefits ASR and ER)

ASR and Emotion Recognition (ER) benefit from more clusters (lower WER / higher accuracy). For all other tasks incl. Speaker ID, 1000 vs 2000 clusters / tokens doesn’t matter.

Embedding Initializations (Random vs Centroid)

- Discriminative tasks benefit from trainable embeddings for the speech tokens.

- Doesn’t make a big difference / is ambiguous for generative tasks (Speech Enhancement and Text-to-Speech)

They compared:

- Random initialization of the embedding layers

- Initialization of the embedding layer with the corresponding centroid’s embedding - frozen layer ❄️

- Initialization of the embedding layer with the corresponding centroid’s embedding - trainable layer 🏋️♂️

| Setting | ASR (EN) | ASR (FR) | SID | ER | SE | SE | TTS | TTS |

|---|---|---|---|---|---|---|---|---|

| WER | WER | DNSMOS | UTMOS | WER | ||||

| Effect of Number Of Clusters | ||||||||

| Effect of Embedding Initialization | ||||||||

| Random PreTrained & finetune PreTrained & freeze | 81.0 77.5 73.1 | 67.2 63.9 67.0 | 3.93 3.93 3.93 | 6.75 6.82 6.98 |

In-domain vs Out-of-domain

Discriminative tasks:

- In-domain: k-means models are trained using the same dataset employed for training acoustic models.

- Out-of-domain: k-means models are trained on train-clean-100, train-clean-360, and trainother-500.

Generative tasks:

- In-domain: k-means models are trained on LJSpeech

- Out-of-domain: k-means trained on LibriSpeech-960h

For all discriminative tasks, the in-domain tokenizer outperforms its OOD counterpart.

In all generative tasks, training the model using the OOD tokenizer does not adversely affect performance and, in some instances, even improves the results.

We speculate that this trend may arise because generative tasks primarily depend on tokens capturing low-level information, which tends to be more “universal” and transferable across different domains.

Critique: Training k-means on 960 hours LS vs 24 hours (LJ) is an unfair comparison.

| SSL Model | Tokenizer | SE | SE | TTS | TTS | Vocoder | Vocoder | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| DNSMOS | dWER | utmos | WER | utmos | dWER | ||||||

| HuBERT Large [4] | |||||||||||

| WavLM Large [3] |

Misc. Notes

- They implement in SpeechBrain (Mirco Ravanelli created SpeechBrain).

- Task types:

- Discriminative: involves transcription and classification

- Generative: involves producing audio

- question In Table 2, they show a TTS column as distinct from the Vocoder column. What does this TTS column report results for? Performance of the TTS (transformer) acoustic model they trained described in §3.2?

- Answer: This is training of the TTS model (penultimate column) vs training of the vocoder on LJSpeech vs LibriSpeech (final column): “As shown in Table 2 (last column), we do not observe any significant performance degradation when using the scalable vocoder in an out-of-domain condition.”

Critiques

- Their “out-of-domain” setting is LJSpeech vs LibriSpeech but these are both pretty much the same; audiobooks from the LibriVox project.

- LJSpeech is just a single speaker (their in-domain is very in domain).