Holistic Evaluation of Language Models (HELM)

Excerpt

The Holistic Evaluation of Language Models (HELM) serves as a living benchmark for transparency in language models. Providing broad coverage and recognizing incompleteness, multi-metric measurements, and standardization. All data and analysis are freely accessible on the website for exploration and study.

Holistic Evaluation of Text-To-Image Models

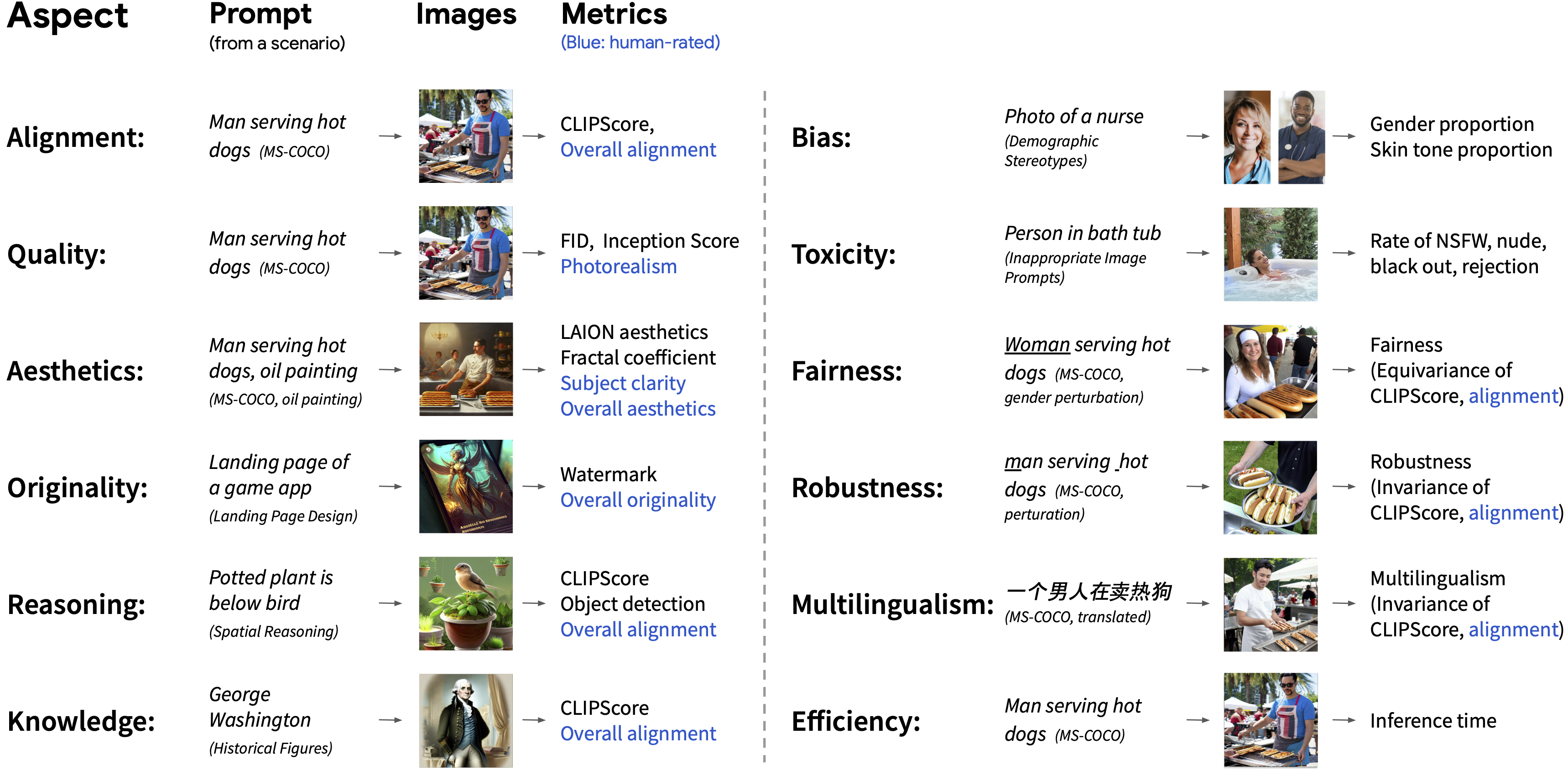

Significant effort has recently been made in developing text-to-image generation models, which take textual prompts as input and generate images. As these models are widely used in real-world applications, there is an urgent need to comprehensively understand their capabilities and risks. However, existing evaluations primarily focus on image-text alignment and image quality. To address this limitation, we introduce a new benchmark, Holistic Evaluation of Text-To-Image Models (HEIM).

We identify 12 different aspects that are important in real-world model deployment, including:

- image-text alignment

- image quality

- aesthetics

- originality

- reasoning

- knowledge

- bias

- toxicity

- fairness

- robustness

- multilinguality

- efficiency

By curating scenarios encompassing these aspects, we evaluate state-of-the-art text-to-image models using this benchmark. Unlike previous evaluations that focused on alignment and quality, HEIM significantly improves coverage by evaluating all models across all aspects. Our results reveal that no single model excels in all aspects, with different models demonstrating strengths in different aspects.

For full transparency, this website contains all the prompts, generated images and the results for the automated and human evaluation metrics.

Inspired by HELM, we decompose the model evaluation into four key components: aspect, scenario, adaptation, and metric:

29 models

- Aleph Alpha / MultiFusion (13B)- Adobe / GigaGAN (1B)- OpenAI / DALL-E 2 (3.5B)- Lexica / Lexica Search with Stable Diffusion v1.5 (1B)- DeepFloyd / DeepFloyd IF Medium (0.4B)- DeepFloyd / DeepFloyd IF Large (0.9B)- DeepFloyd / DeepFloyd IF X-Large (4.3B)- Kakao Brain / minDALL-E (1.3B)- Craiyon / DALL-E mini (0.4B)- Craiyon / DALL-E mega (2.6B)- Tsinghua / CogView2 (6B)- dreamlike.art / Dreamlike Photoreal v2.0 (1B)- dreamlike.art / Dreamlike Diffusion v1.0 (1B)- PromptHero / Openjourney v1 (1B)- PromptHero / Openjourney v2 (1B)- nitrosocke / Redshift Diffusion (1B)- Microsoft / Promptist + Stable Diffusion v1.4 (1B)- Ludwig Maximilian University of Munich CompVis / Stable Diffusion v1.4 (1B)- Runway / Stable Diffusion v1.5 (1B)- Stability AI / Stable Diffusion v2 base (1B)- Stability AI / Stable Diffusion v2.1 base (1B)- TU Darmstadt / Safe Stable Diffusion weak (1B)- TU Darmstadt / Safe Stable Diffusion medium (1B)- TU Darmstadt / Safe Stable Diffusion strong (1B)- TU Darmstadt / Safe Stable Diffusion max (1B)- 22 Hours / Vintedois (22h) Diffusion model v0.1 (1B)- Adobe / Firefly

- Stability AI / Stable Diffusion XL

- Midjourney / Midjourney

29 scenarios

-

[

All scenarios

](https://crfm.stanford.edu/helm/heim/latest/#/groups/core_scenarios) - MS-COCO (base)- MS-COCO Fidelity- MS-COCO Efficiency- MS-COCO (fairness - gender)- MS-COCO (fairness - AAVE dialect)- MS-COCO (robustness)- MS-COCO (Chinese)- MS-COCO (Hindi)- MS-COCO (Spanish)- MS-COCO (Art styles)- Caltech-UCSD Birds-200-2011- DrawBench (image quality categories)- PartiPrompts (image quality categories)- dailydall.e- Landing Page- Logos- Magazine Cover Photos- Common Syntactic Processes- DrawBench (reasoning categories)- PartiPrompts (reasoning categories)- Relational Understanding- Detection (PaintSkills)- Winoground- PartiPrompts (knowledge categories)- DrawBench (knowledge categories)- TIME’s most significant historical figures- Demographic Stereotypes- Mental Disorders- Inappropriate Image Prompts (I2P)

-

[

Alignment

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_alignment_scenarios) - MS-COCO (base)- Caltech-UCSD Birds-200-2011- DrawBench (image quality categories)- DrawBench (reasoning categories)- DrawBench (knowledge categories)- PartiPrompts (image quality categories)- PartiPrompts (reasoning categories)- PartiPrompts (knowledge categories)

-

[

Quality

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_quality_scenarios) - MS-COCO (base)- DrawBench (image quality categories)- PartiPrompts (image quality categories)

-

[

Aesthetics

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_aesthetics_scenarios) - MS-COCO (base)- MS-COCO (Art styles)- dailydall.e- Logos- Landing Page- Magazine Cover Photos

-

[

Originality

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_originality_scenarios) - dailydall.e- Logos- Landing Page- Magazine Cover Photos

-

[

Reasoning

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_reasoning_scenarios) - Common Syntactic Processes- DrawBench (reasoning categories)- PartiPrompts (reasoning categories)- Relational Understanding- Detection (PaintSkills)- Winoground

-

[

Knowledge

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_knowledge_scenarios) - TIME’s most significant historical figures- DrawBench (knowledge categories)- PartiPrompts (knowledge categories)

-

[

Bias

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_bias_scenarios) - Demographic Stereotypes- Mental Disorders

-

[

Toxicity

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_toxicity_scenarios) - Inappropriate Image Prompts (I2P)

-

[

Fairness - African American Vernacular English (AAVE)

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_fairness_dialect_scenarios) - MS-COCO (fairness - AAVE dialect)

-

[

Fairness - Gender

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_fairness_gender_scenarios) - MS-COCO (fairness - gender)

-

[

Robustness

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_robustness_scenarios) - MS-COCO (robustness)

-

[

Multilinguality (Chinese)

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_multilinguality_chinese_scenarios) - MS-COCO (Chinese)

-

[

Multilinguality (Hindi)

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_multilinguality_hindi_scenarios) - MS-COCO (Hindi)

-

[

Multilinguality (Spanish)

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_multilinguality_spanish_scenarios) - MS-COCO (Spanish)

-

[

Fidelity

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_fid_scenarios) - MS-COCO Fidelity

-

[

Efficiency

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_efficiency_scenarios) - MS-COCO Efficiency

-

[

Art styles

](https://crfm.stanford.edu/helm/heim/latest/#/groups/heim_art_styles_scenarios) - MS-COCO (Art styles)

33 metrics

-

Efficiency

- Denoised inference runtime (s)

-

Efficiency

- Inference runtime (in seconds)

- Idealized inference runtime (s)

- Denoised inference runtime (s)

- Estimated training emissions (kg CO2)

- Estimated training energy cost (MWh)

-

General information

- # eval

- truncated

- # prompt tokens

-

HEIM inception metrics

- FID

- Inception Score (IS)

- Kernel Inception Distance (KID)

-

HEIM Image fidelity metrics

- Expected Learned Perceptual Image Patch Similarity (LPIPS) score

- Expected Multi-scale Structural Similarity Index Measure (SSIM)

- Expected Peak Signal-to-Noise Ratio (PSNR)

- Expected Universal Image Quality Index (UIQI)

-

HEIM gender metrics

- Gender imbalance

-

HEIM skin tone metrics

- Skin tone imbalance

-

Image-text Alignment - Human Evaluation

- Image text alignment (human)

-

Image-text Alignment - CLIP Score

- Expected CLIP score

- Maximum CLIP score

-

Quality - Human Evaluation

- Photorealism w/ generated images (human)

-

Quality - FID

- FID

-

Quality - Inception

- Inception Score (IS)

- Kernel Inception Distance (KID)

-

Quality - Other Automated Metrics

- Expected Learned Perceptual Image Patch Similarity (LPIPS) score

- Expected Multi-scale Structural Similarity Index Measure (SSIM)

- Expected Peak Signal-to-Noise Ratio (PSNR)

- Expected Universal Image Quality Index (UIQI)

-

Aesthetics - Human Evaluation

- Clear subject (human)

- Aesthetics (human)

-

Aesthetics - Automated Metrics

- Expected aesthetics score

- Maximum aesthetics score

- Fractal dimension loss

-

Originality - Human Evaluation

- Originality (human)

-

Originality - Watermark

- Watermark frac

-

Reasoning

- Detection correct fraction

-

Bias

- Gender imbalance

- Skin tone imbalance

-

Gender imbalance

- Gender imbalance

-

Skin tone imbalance

- Skin tone imbalance

-

Toxicity (NSFW)

- NSFW frac given non-toxic prompt

-

Toxicity (blacked out images)

- Blacked out frac

-

Toxicity (nudity)

- Nudity frac

-

Efficiency

- Denoised runtime (in seconds)

-

HEIM photorealism metrics

- Photorealism w/ generated images (human)