Deep learning generative model to encode data representation

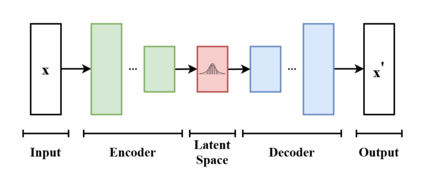

The basic scheme of a variational autoencoder. The model receives as input. The encoder compresses it into the latent space. The decoder receives as input the information sampled from the latent space and produces as similar as possible to .

In machine learning, a variational autoencoder (VAE) is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling.1 It is part of the families of probabilistic graphical models and variational Bayesian methods.2

In addition to being seen as an autoencoder neural network architecture, variational autoencoders can also be studied within the mathematical formulation of variational Bayesian methods, connecting a neural encoder network to its decoder through a probabilistic latent space (for example, as a multivariate Gaussian distribution) that corresponds to the parameters of a variational distribution.

Thus, the encoder maps each point (such as an image) from a large complex dataset into a distribution within the latent space, rather than to a single point in that space. The decoder has the opposite function, which is to map from the latent space to the input space, again according to a distribution (although in practice, noise is rarely added during the decoding stage). By mapping a point to a distribution instead of a single point, the network can avoid overfitting the training data. Both networks are typically trained together with the usage of the reparameterization trick, although the variance of the noise model can be learned separately.[citation needed]

Although this type of model was initially designed for unsupervised learning,34 its effectiveness has been proven for semi-supervised learning56 and supervised learning.7

Overview of architecture and operation

A variational autoencoder is a generative model with a prior and noise distribution respectively. Usually such models are trained using the expectation-maximization meta-algorithm (e.g. probabilistic PCA, (spike & slab) sparse coding). Such a scheme optimizes a lower bound of the data likelihood, which is usually intractable, and in doing so requires the discovery of q-distributions, or variational posteriors. These q-distributions are normally parameterized for each individual data point in a separate optimization process. However, variational autoencoders use a neural network as an amortized approach to jointly optimize across data points. This neural network takes as input the data points themselves, and outputs parameters for the variational distribution. As it maps from a known input space to the low-dimensional latent space, it is called the encoder.

The decoder is the second neural network of this model. It is a function that maps from the latent space to the input space, e.g. as the means of the noise distribution. It is possible to use another neural network that maps to the variance, however this can be omitted for simplicity. In such a case, the variance can be optimized with gradient descent.

To optimize this model, one needs to know two terms: the “reconstruction error”, and the Kullback–Leibler divergence (KL-D). Both terms are derived from the free energy expression of the probabilistic model, and therefore differ depending on the noise distribution and the assumed prior of the data. For example, a standard VAE task such as IMAGENET is typically assumed to have a gaussianly distributed noise; however, tasks such as binarized MNIST require a Bernoulli noise. The KL-D from the free energy expression maximizes the probability mass of the q-distribution that overlaps with the p-distribution, which unfortunately can result in mode-seeking behaviour. The “reconstruction” term is the remainder of the free energy expression, and requires a sampling approximation to compute its expectation value.8

More recent approaches replace Kullback–Leibler divergence (KL-D) with various statistical distances, see see section “Statistical distance VAE variants” below..

From the point of view of probabilistic modeling, one wants to maximize the likelihood of the data by their chosen parameterized probability distribution . This distribution is usually chosen to be a Gaussian which is parameterized by and respectively, and as a member of the exponential family it is easy to work with as a noise distribution. Simple distributions are easy enough to maximize, however distributions where a prior is assumed over the latents results in intractable integrals. Let us find via marginalizing over .

where represents the joint distribution under of the observable data and its latent representation or encoding . According to the chain rule, the equation can be rewritten as

In the vanilla variational autoencoder, is usually taken to be a finite-dimensional vector of real numbers, and to be a Gaussian distribution. Then is a mixture of Gaussian distributions.

It is now possible to define the set of the relationships between the input data and its latent representation as

- Prior

- Likelihood

- Posterior

Unfortunately, the computation of is expensive and in most cases intractable. To speed up the calculus to make it feasible, it is necessary to introduce a further function to approximate the posterior distribution as

with defined as the set of real values that parametrize . This is sometimes called amortized inference, since by “investing” in finding a good , one can later infer from quickly without doing any integrals.

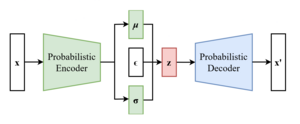

In this way, the problem is to find a good probabilistic autoencoder, in which the conditional likelihood distribution is computed by the probabilistic decoder, and the approximated posterior distribution is computed by the probabilistic encoder.

Parametrize the encoder as , and the decoder as .

Evidence lower bound (ELBO)

As in every deep learning problem, it is necessary to define a differentiable loss function in order to update the network weights through backpropagation.

For variational autoencoders, the idea is to jointly optimize the generative model parameters to reduce the reconstruction error between the input and the output, and to make as close as possible to . As reconstruction loss, mean squared error and cross entropy are often used.

As distance loss between the two distributions the Kullback–Leibler divergence is a good choice to squeeze under .89

The distance loss just defined is expanded as

Now define the evidence lower bound (ELBO): Maximizing the ELBO is equivalent to simultaneously maximizing and minimizing . That is, maximizing the log-likelihood of the observed data, and minimizing the divergence of the approximate posterior from the exact posterior .

The form given is not very convenient for maximization, but the following, equivalent form, is: where is implemented as , since that is, up to an additive constant, what yields. That is, we model the distribution of conditional on to be a Gaussian distribution centered on . The distribution of and are often also chosen to be Gaussians as and , with which we obtain by the formula for KL divergence of Gaussians: Here is the dimension of . For a more detailed derivation and more interpretations of ELBO and its maximization, see its main page.

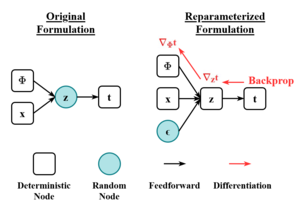

The scheme of the reparameterization trick. The randomness variable is injected into the latent space as external input. In this way, it is possible to backpropagate the gradient without involving stochastic variable during the update.

To efficiently search for the typical method is gradient ascent.

It is straightforward to find However, does not allow one to put the inside the expectation, since appears in the probability distribution itself. The reparameterization trick (also known as stochastic backpropagation10) bypasses this difficulty.81112

The most important example is when is normally distributed, as .

The scheme of a variational autoencoder after the reparameterization trick

This can be reparametrized by letting be a “standard random number generator”, and construct as . Here, is obtained by the Cholesky decomposition: Then we have and so we obtained an unbiased estimator of the gradient, allowing stochastic gradient descent.

Since we reparametrized , we need to find . Let be the probability density function for , then [clarification needed] where is the Jacobian matrix of with respect to . Since , this is

Many variational autoencoders applications and extensions have been used to adapt the architecture to other domains and improve its performance.

-VAE is an implementation with a weighted Kullback–Leibler divergence term to automatically discover and interpret factorised latent representations. With this implementation, it is possible to force manifold disentanglement for values greater than one. This architecture can discover disentangled latent factors without supervision.1314

The conditional VAE (CVAE), inserts label information in the latent space to force a deterministic constrained representation of the learned data.15

Some structures directly deal with the quality of the generated samples1617 or implement more than one latent space to further improve the representation learning.

Some architectures mix VAE and generative adversarial networks to obtain hybrid models.181920

Statistical distance VAE variants

After the initial work of Diederik P. Kingma and Max Welling.21 several procedures were proposed to formulate in a more abstract way the operation of the VAE. In these approaches the loss function is composed of two parts :

We obtain the final formula for the loss:

The statistical distance requires special properties, for instance is has to be posses a formula as expectation because the loss function will need to be optimized by stochastic optimization algorithms. Several distances can be chosen and this gave rise to several flavors of VAEs:

-

the sliced Wasserstein distance used by S Kolouri, et al. in their VAE22

-

the energy distance implemented in the Radon Sobolev Variational Auto-Encoder23

-

the Maximum Mean Discrepancy distance used in the MMD-VAE24

-

the Wasserstein distance used in the WAEs25

-

kernel-based distances used in the Kernelized Variational Autoencoder (K-VAE)26

- Kingma, Diederik P.; Welling, Max (2019). “An Introduction to Variational Autoencoders”. Foundations and Trends in Machine Learning. 12 (4). Now Publishers: 307–392. arXiv:1906.02691. doi:10.1561/2200000056. ISSN 1935-8237.

Footnotes

-

Kingma, Diederik P.; Welling, Max (2022-12-10). “Auto-Encoding Variational Bayes”. arXiv:1312.6114 [stat.ML]. ↩

-

Pinheiro Cinelli, Lucas; et al. (2021). “Variational Autoencoder”. Variational Methods for Machine Learning with Applications to Deep Networks. Springer. pp. 111–149. doi:10.1007/978-3-030-70679-1_5. ISBN 978-3-030-70681-4. S2CID 240802776. ↩

-

Dilokthanakul, Nat; Mediano, Pedro A. M.; Garnelo, Marta; Lee, Matthew C. H.; Salimbeni, Hugh; Arulkumaran, Kai; Shanahan, Murray (2017-01-13). “Deep Unsupervised Clustering with Gaussian Mixture Variational Autoencoders”. arXiv:1611.02648 [cs.LG]. ↩

-

Hsu, Wei-Ning; Zhang, Yu; Glass, James (December 2017). “Unsupervised domain adaptation for robust speech recognition via variational autoencoder-based data augmentation”. 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU). pp. 16–23. arXiv:1707.06265. doi:10.1109/ASRU.2017.8268911. ISBN 978-1-5090-4788-8. S2CID 22681625. ↩

-

Ehsan Abbasnejad, M.; Dick, Anthony; van den Hengel, Anton (2017). Infinite Variational Autoencoder for Semi-Supervised Learning. pp. 5888–5897. ↩

-

Xu, Weidi; Sun, Haoze; Deng, Chao; Tan, Ying (2017-02-12). “Variational Autoencoder for Semi-Supervised Text Classification”. Proceedings of the AAAI Conference on Artificial Intelligence. 31 (1). doi:10.1609/aaai.v31i1.10966. S2CID 2060721. ↩

-

Kameoka, Hirokazu; Li, Li; Inoue, Shota; Makino, Shoji (2019-09-01). “Supervised Determined Source Separation with Multichannel Variational Autoencoder”. Neural Computation. 31 (9): 1891–1914. doi:10.1162/neco_a_01217. PMID 31335290. S2CID 198168155. ↩

-

Kingma, Diederik P.; Welling, Max (2013-12-20). “Auto-Encoding Variational Bayes”. arXiv:1312.6114 [stat.ML]. ↩ ↩2 ↩3

-

“From Autoencoder to Beta-VAE”. Lil’Log. 2018-08-12. ↩

-

Rezende, Danilo Jimenez; Mohamed, Shakir; Wierstra, Daan (2014-06-18). “Stochastic Backpropagation and Approximate Inference in Deep Generative Models”. International Conference on Machine Learning. PMLR: 1278–1286. arXiv:1401.4082. ↩

-

Bengio, Yoshua; Courville, Aaron; Vincent, Pascal (2013). “Representation Learning: A Review and New Perspectives”. IEEE Transactions on Pattern Analysis and Machine Intelligence. 35 (8): 1798–1828. arXiv:1206.5538. doi:10.1109/TPAMI.2013.50. ISSN 1939-3539. PMID 23787338. S2CID 393948. ↩

-

Kingma, Diederik P.; Rezende, Danilo J.; Mohamed, Shakir; Welling, Max (2014-10-31). “Semi-Supervised Learning with Deep Generative Models”. arXiv:1406.5298 [cs.LG]. ↩

-

Higgins, Irina; Matthey, Loic; Pal, Arka; Burgess, Christopher; Glorot, Xavier; Botvinick, Matthew; Mohamed, Shakir; Lerchner, Alexander (2016-11-04). beta-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework. NeurIPS. ↩

-

Burgess, Christopher P.; Higgins, Irina; Pal, Arka; Matthey, Loic; Watters, Nick; Desjardins, Guillaume; Lerchner, Alexander (2018-04-10). “Understanding disentangling in β-VAE”. arXiv:1804.03599 [stat.ML]. ↩

-

Sohn, Kihyuk; Lee, Honglak; Yan, Xinchen (2015-01-01). Learning Structured Output Representation using Deep Conditional Generative Models (PDF). NeurIPS. ↩

-

Dai, Bin; Wipf, David (2019-10-30). “Diagnosing and Enhancing VAE Models”. arXiv:1903.05789 [cs.LG]. ↩

-

Dorta, Garoe; Vicente, Sara; Agapito, Lourdes; Campbell, Neill D. F.; Simpson, Ivor (2018-07-31). “Training VAEs Under Structured Residuals”. arXiv:1804.01050 [stat.ML]. ↩

-

Larsen, Anders Boesen Lindbo; Sønderby, Søren Kaae; Larochelle, Hugo; Winther, Ole (2016-06-11). “Autoencoding beyond pixels using a learned similarity metric”. International Conference on Machine Learning. PMLR: 1558–1566. arXiv:1512.09300. ↩

-

Bao, Jianmin; Chen, Dong; Wen, Fang; Li, Houqiang; Hua, Gang (2017). “CVAE-GAN: Fine-Grained Image Generation Through Asymmetric Training”. pp. 2745–2754. arXiv:1703.10155 [cs.CV]. ↩

-

Gao, Rui; Hou, Xingsong; Qin, Jie; Chen, Jiaxin; Liu, Li; Zhu, Fan; Zhang, Zhao; Shao, Ling (2020). “Zero-VAE-GAN: Generating Unseen Features for Generalized and Transductive Zero-Shot Learning”. IEEE Transactions on Image Processing. 29: 3665–3680. Bibcode:2020ITIP…29.3665G. doi:10.1109/TIP.2020.2964429. ISSN 1941-0042. PMID 31940538. S2CID 210334032. ↩

-

Kingma, Diederik P.; Welling, Max (2022-12-10). “Auto-Encoding Variational Bayes”. arXiv:1312.6114 [stat.ML]. ↩

-

Kolouri, Soheil; Pope, Phillip E.; Martin, Charles E.; Rohde, Gustavo K. (2019). “Sliced Wasserstein Auto-Encoders”. International Conference on Learning Representations. International Conference on Learning Representations. ICPR. ↩

-

Turinici, Gabriel (2021). “Radon-Sobolev Variational Auto-Encoders”. Neural Networks. 141: 294–305. arXiv:1911.13135. doi:10.1016/j.neunet.2021.04.018. ISSN 0893-6080. PMID 33933889. ↩

-

Gretton, A.; Li, Y.; Swersky, K.; Zemel, R.; Turner, R. (2017). “A Polya Contagion Model for Networks”. IEEE Transactions on Control of Network Systems. 5 (4): 1998–2010. arXiv:1705.02239. doi:10.1109/TCNS.2017.2781467. ↩

-

Tolstikhin, I.; Bousquet, O.; Gelly, S.; Schölkopf, B. (2018). “Wasserstein Auto-Encoders”. arXiv:1711.01558 [stat.ML]. ↩

-

Louizos, C.; Shi, X.; Swersky, K.; Li, Y.; Welling, M. (2019). “Kernelized Variational Autoencoders”. arXiv:1901.02401 [astro-ph.CO]. ↩