a surprising inability to repeat common non-English words

DeepSeek is unusual for retraining its tokenizer regularly. This, combined with R1’s huge size, makes running the under-trained token pipeline difficult. But curiosity got the better of me, so here’s a deep dive into its deep glitches.

Pre-tokenization

DeepSeek Math had a few unreachable tokens such as ®▁, which does not re-encode to itself, but can be reached when followed by other text. In R1 these problems are gone, and the regular expressions are slightly simpler. The pre-tokenizer splits off digits in groups of up to three, separates CJK characters, and then applies a complex pattern resembling GPT-4’s — though clearly optimized for DeepSeek’s own preferences.

Finding DeepSeek-R1 glitch tokens

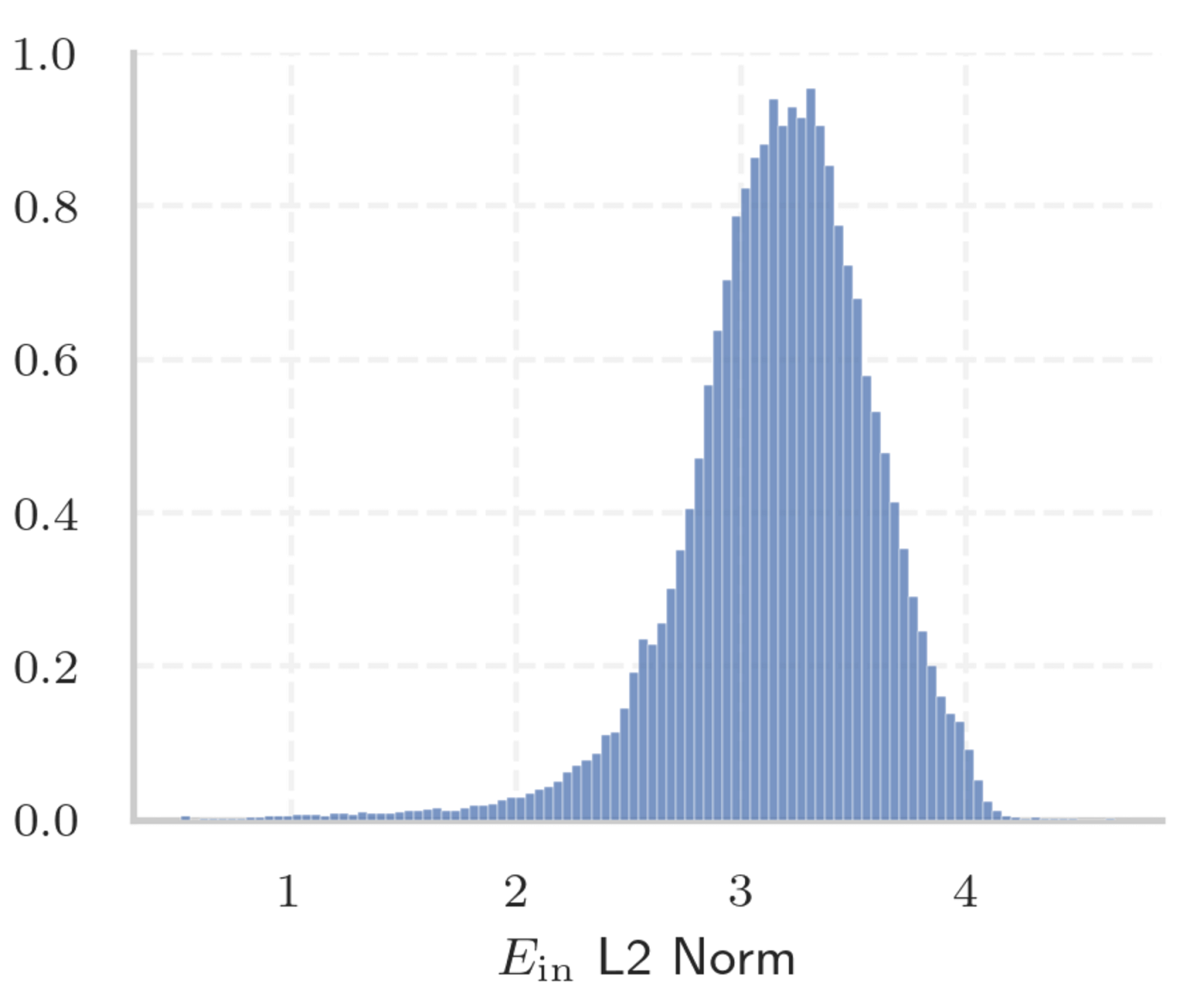

Although loading the entire model is impractical, we can still examine the input embeddings by loading only the Embedding tensor (see snippet at the end for details). This yields the following distribution for the input embeddings based indicator. :

Overall, this suggests a low proportion of glitch tokens, with only a small peak on the left. Since embedding norms never reach zero, it appears that either selective or no weight decay was used on this tensor.

There’s a small spike near 0.5, consisting of unused UTF-8 bytes and some untrained special tokens (e.g., tool calls). Just above this, the normal glitch tokens begin, with _kabungtor appearing at 0.57. Interestingly, the place_holder_no_### tokens cluster around 2.5, suggesting they received special treatment.

To verify candidates, we use API calls with the following prompt:

Change this code to use double quotes. Be very careful to not change the value, as it critical.

\`\`\`python

password = '0{token}'

\`\`\`The “0” ensures the token doesn’t stick to the opening quote, as the vocabulary includes tokens with mixed punctuation and letters.

Verifying glitchiness

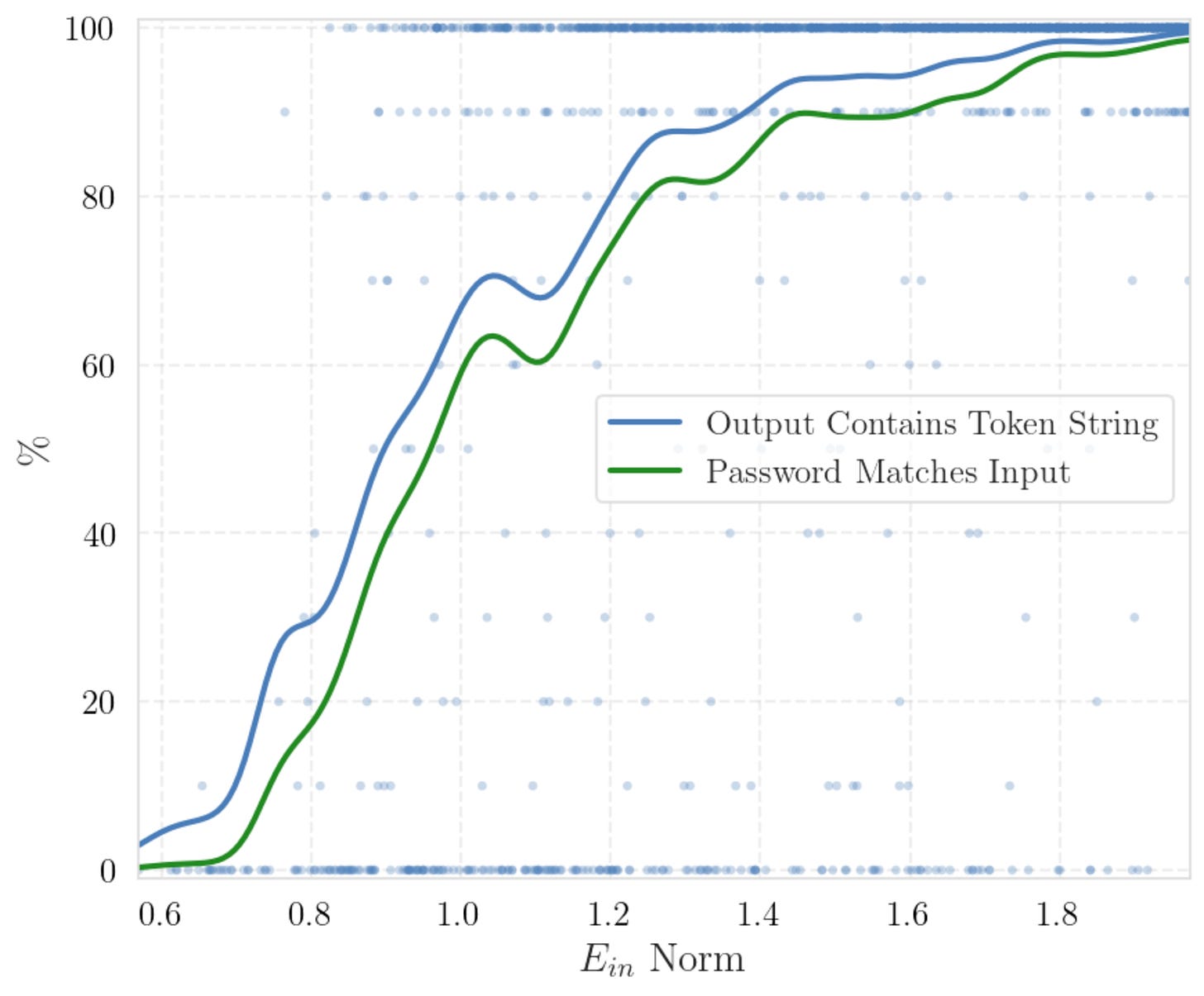

We test the top 2000 candidates, running 10 attempts each at different temperatures. The results are as follows:

Here’s how we evaluate glitchiness:

- Output Contains Token String: Accepts the token appearing anywhere in the output, even if it’s part of a different word (e.g., responding with

Kentuckywhen prompted withentucky). - Password Matches Input: Only accepts fully correct outputs.

The verification results align well with the embedding-based predictor. Yet, even at relatively high values, some tokens fail this simple task—even when they correspond to perfectly reasonable words.

For instance, asking “What is Vertaisarvioitu?” (Peer-reviewed) yields:

Okay, the user is asking about “CrossRef/DOI.” Let me start by breaking down what each part means.

Similarly, asking “Nasjonalitet means what?” (What does nationality mean?) results in:

Okay, the user is asking what “Vaterlandika” means. Wait, first I need to check if that’s the correct spelling. Maybe they meant “Vaterland” which is German for “fatherland.” But “Vaterlandika” doesn’t seem like a standard word. Could it be a typo? Let me think.

In general, responses tend to be loosely related to the topic but include excessive “checking what the user wrote,” spurious <think> and </think> tokens, and occasional shifts from correctly repeating a word to substituting it with an incorrect one.

Conclusion: Remarkable Fragility

Despite being a very strong model, DeepSeek-R1 exhibits remarkable fragility, producing catastrophically bad outputs in response to ordinary words. While nearly all models have some glitch tokens, what’s striking here is how easily sensible queries break the model — whereas many other models require highly unrealistic prompts to trigger similar failures.

Some thinking traces

Here are some extended outputs, selected for interestingness and reasonable length.

Token 127635: послено (part of ‘Запослено’, Employed in Serbian). The token with the second lowest embedding norm.

Okay, I need to change the single quotes in the Python code to double quotes. The original line is password = ‘0енско’. Wait, the user wrote ‘0енско’ but maybe that’s a typo. Let me check again. Oh, the code given is password = ‘0{enclose}‘. Wait, no, the user wrote the password as ‘0{enclose}‘. Wait, no, looking back, the code is password = ‘0{enclose}‘. Wait, no, the original code is password = ‘0{enclose}‘. Wait, the user’s code is written as password = ‘0{enclose}‘.

[large amount of repetitive rambling ommitted]

password = “0{enclose}”

Token 122041: Nasjonalitet (‘Nationality’ in Norwegian). In 9/10 cases it fails to repeat it, but in one instance it manages to start correctly, but then immediately changes the string, without noticing.

Okay, I need to change the string in this Python code from single quotes to double quotes. Let me look at the code: password = ‘0Nasjonalitet’

The original string is using single quotes. The task is to switch them to double quotes, but I must be careful not to alter the actual value. So I need to check if there are any characters inside the string that might interfere with double quotes. The current password is ‘0sterreich’. Let me see, the string doesn’t contain any double quotes inside. So replacing the single quotes with double quotes should be safe.

[…]

password = “0sterreich”

Token 32899: _Vertaisarvioitu **(‘**peer reviewed’ in Finnish). This token is surprisingly glitchy despite being a full reasonable term with a high indicator value.

Okay, I need to change the single quotes in the Python code to double quotes. The original line is password = ‘0 CrossRef**d’. Wait, let me check the exact string again. Oh right, it’s ‘0 CrossRef**d’—no, wait, the user wrote ‘0 Vis**ed’ but maybe I should look again. The original code is password = ‘0 Vis**ed’.

[…]

password = “0 Vis**ed”

Token 95625: المهنه (‘profession’ in Arabic)

Okay, I need to change the single quotes in the Python code to double quotes. The original code is password = ‘0xdeadbeef’. Wait, no, the user wrote ‘0しょうかん’? Wait, let me check again. The user provided the code as password = ‘0しょうかん’. Oh right, the actual password is a mix of a zero and some other characters.

[…]

password = “0しょうかん”

Bonus: loading only embeddings

Thanks to Sangwhan from the tokenization discord for providing this snippet.

Full results

You can get the full analysis outputs in this json file.