Title: HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips

Authors: Antoine Miech, Dimitri Zhukov, Jean-Baptiste Alayrac, Makarand Tapaswi, Ivan Laptev, Josef Sivic

Published: 7th June 2019 (Friday) @ 20:48:19

Link: http://arxiv.org/abs/1906.03327v2

Abstract

Learning text-video embeddings usually requires a dataset of video clips with manually provided captions. However, such datasets are expensive and time consuming to create and therefore difficult to obtain on a large scale. In this work, we propose instead to learn such embeddings from video data with readily available natural language annotations in the form of automatically transcribed narrations. The contributions of this work are three-fold. First, we introduce HowTo100M: a large-scale dataset of 136 million video clips sourced from 1.22M narrated instructional web videos depicting humans performing and describing over 23k different visual tasks. Our data collection procedure is fast, scalable and does not require any additional manual annotation. Second, we demonstrate that a text-video embedding trained on this data leads to state-of-the-art results for text-to-video retrieval and action localization on instructional video datasets such as YouCook2 or CrossTask. Finally, we show that this embedding transfers well to other domains: fine-tuning on generic Youtube videos (MSR-VTT dataset) and movies (LSMDC dataset) outperforms models trained on these datasets alone. Our dataset, code and models will be publicly available at: www.di.ens.fr/willow/research/howto100m/.

Overview from HowTo100M website

See HowTo100M Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips

HowTo100M:

- 136M video clips with captions sourced from 1.2M Youtube videos (15 years of video)

- 23k activities from domains such as cooking, hand crafting, personal care, gardening or fitness

Each video is associated with a narration available as subtitles automatically downloaded from Youtube.

Real-Time Natural Language search on HowTo100M:

We have implemented an online Text-to-Video retrieval demo that performs search and localization in videos using a simple Text-Video model trained on HowTo100M. The demo runs on a single CPU machine and implements FAISS approximate nearest neighbour search implementation.

Please note that to make the search through hundreds of millions of video clips run in real time, this demo uses a lighter (and less accurate) version of the model than the one described in the paper.

Query examples: Check voltage, Cut paper, Cut salmon, Measure window length, Animal dance — Source: https://www.di.ens.fr/willow/research/howto100m/

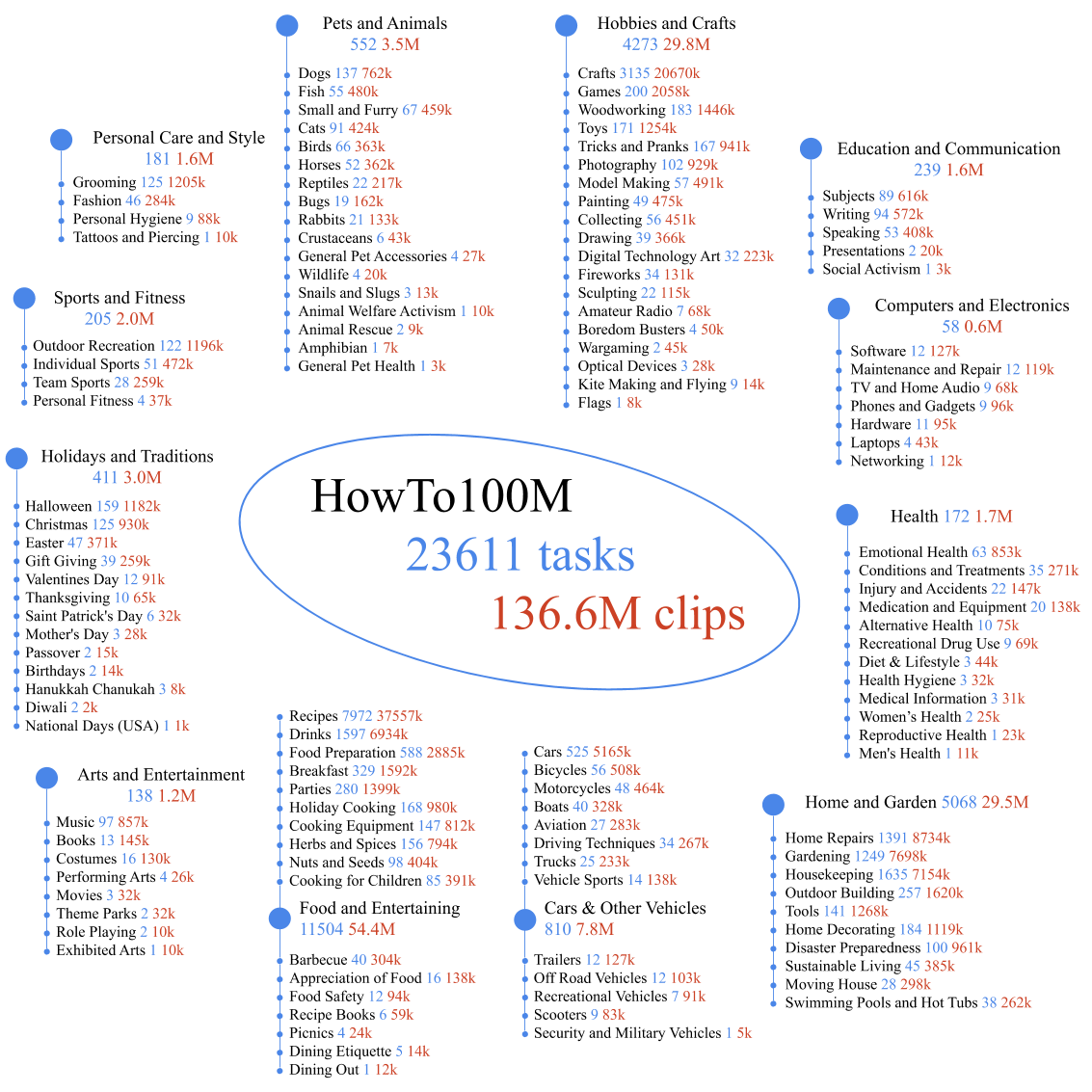

Dataset statistics graphic

Table 1: Comparison of existing video description datasets.

Table 1: Comparison of existing video description datasets. The size of our new HowTo100M dataset bypasses the size of largest available datasets by three orders of magnitude. M denotes million while k denotes thousand.

| Dataset | Clips Captions | Videos | Duration | Source | Year | |

|---|---|---|---|---|---|---|

| Charades [48] | 10k | 16k | 10,000 | 82h | Home | 2016 |

| MSR-VTT [58] | 10k | 200k | 7,180 | 40h | Youtube | 2016 |

| YouCook2 [67] | 14k | 14k | 2,000 | 176h | Youtube | 2018 |

| EPIC-KITCHENS [7] | 40k | 40k | 432 | 55h | Home | 2018 |

| DiDeMo [15] | 27k | 41k | 10,464 | 87h | Flickr | 2017 |

| M-VAD [52] | 49k | 56k | 92 | 84h | Movies | 2015 |

| MPI-MD [43] | 69k | 68k | 94 | 41h | Movies | 2015 |

| ANet Captions [26] | 100k | 100k | 20,000 | 849h | Youtube | 2017 |

| TGIF [27] | 102k | 126k | 102,068 | 103h | Tumblr | 2016 |

| LSMDC [44] | 128k | 128k | 200 | 150h | Movies | 2017 |

| How2 [45] | 185k | 185k | 13,168 | 298h | Youtube | 2018 |

| HowTo100M | 136M | 136M | 1.221M | 134,472h | Youtube | 2019 |

The HowTo100M dataset (§3)

Data collection (§3.1)

Visual tasks

- start by acquiring a large list of activities using WikiHow1 – an online resource that contains 120,000 articles on How to …

- for a variety of domains ranging from cooking to human relationships structured in a hierarchy

- We are primarily interested in “visual tasks” that involve some interaction with the physical world (e.g. Making peanut butter, Pruning a tree) as compared to others that are more abstract (e.g. Ending a toxic relationship, Choosing a gift).

- To obtain predominantly visual tasks, we limit them to one of 12 categories (listed in Table 2).

- We exclude categories such as Relationships and Finance and Business, that may be more abstract.

- We further refine the set of tasks, by filtering them in a semi-automatic way. In particular, we restrict the primary verb to physical actions, such as make, build and change, and discard non-physical verbs, such as be, accept and feel.

- This procedure yields 23,611 visual tasks in total.

Table 2: Number of tasks, videos and clips within each category

| Category | Tasks Videos | Clips | |

|---|---|---|---|

| Food and Entertaining | 11504 | 497k | 54.4M |

| Home and Garden | 5068 | 270k | 29.5M |

| Hobbies and Crafts | 4273 | 251k | 29.8M |

| Cars & Other Vehicles | 810 | 68k | 7.8M |

| Pets and Animals | 552 | 31k | 3.5M |

| Holidays and Traditions | 411 | 27k | 3.0M |

| Personal Care and Style | 181 | 16k | 1.6M |

| Sports and Fitness | 205 | 16k | 2.0M |

| Health | 172 | 15k | 1.7M |

| Education and Communications | 239 | 15k | 1.6M |

| Arts and Entertainment | 138 | 10k | 1.2M |

| Computers and Electronics | 58 | 5k | 0.6M |

| Total | 23.6k | 1.22M | 136.6M |

Instructional videos

- Search for YouTube videos related to the task by forming a query with how to preceding the task name (e.g. how to paint furniture).

- Choose videos that have English subtitles

- either uploaded manually, generated automatically by YouTube ASR, or generated automatically after translation from a different language by YouTube API

- Improve the quality and consistency of the dataset:

- restrict to the top 200 search results - latter may not be related to the query task

- Videos with less than 100 views are removed - often of poor quality or are amateurish

Paired video cliips and captions (§3.2)

Different from other datasets with clip-caption pairs (e.g. MSR-VTT), our captions are not manually annotated, but automatically obtained through the narration. Thus, they can be thought of as weakly paired.

Typical examples of incoherence include the content producer asking viewers to subscribe to their channel, talking about something unrelated to the video, or describing something before or after it happens.

HowTo100M is several orders of magnitude larger than existing datasets and contains an unprecedented duration (15 years) of video data. However, unlike previous datasets, HowTo100M does not have clean annotated captions. As the videos contain complex activities, they are relatively long with an average duration of 6.5 minutes. On average, a video produces 110 clip-caption pairs, with an average duration of 4 seconds per clip and 4 words (after excluding stop-words) per caption

Breakdown:

- average video duration of 6.5 minutes

- a video produces 110 clip-caption pairs (on average)

- average duration of 4 seconds per clip and 4 words (after excluding stop-words) per caption

They validate their base assumption that searching with How to queries on YouTube would result in mostly instructional videos: Randomly select 100 videos and label their type.

- 71% of the videos are found to be instructional

- 12% are vlogs

- another 7% are product reviews or advertisements

Note that vlogs, reviews and ads may also contain correspondences between visual content and narration. In particular, we noticed that objects shown on screen are often mentioned in narration. We do not discard such non-instructional videos, as they may still be useful for the learning the joint embedding.

Text-video joint embedding model

- Input: Given a set of video clips and associated captions

- and the and dimensional feature representation of a video clip and caption , respectively

- Clip and caption representation:

- The clip feature v consists of temporally max-pooled pre-extracted CNN features.

- The caption feature c is the output of a shallow 1D-CNN on top of pre-computed word embeddings.

- More details are given in Section 5.1.

- Goal: learn two mapping functions: and that respectively embed video and caption features into a common -dimensional space, such that the cosine similarity

is high when caption describes the video clip , and low otherwise.

- Model: In this work, we use the class of non-linear embedding functions used in [32], which are given by:

where , are learnable parameters, is an element-wise sigmoid activation and is the element-wise multiplication (Hadamard product).

In practice, and resulting in a model composed of 67 M parameters.

Note that the first term on the right-hand side in Equations (2) and (3) is a linear fully connected layer and the second term corresponds to a context gating function [31] with an output ranging between 0 and 1, which role is to modulate the output of the linear layer. As a result, this embedding function can model nonlinear multiplicative interactions between the dimensions of the input feature vector which has proven effective in other text-video embedding applications.

Loss. We train our embedding model using the max margin ranking loss [21, 32, 54, 55, 64]

Noise Contrastive Estimation (“Sampling strategy”): Similar to [15], we apply an intravideo negative sampling strategy to define N (i). We show in Section 5.3 that this approach is critical for good performance.

[this is NCE] More precisely, half of our negative pairs {(Vi , Cj ) : i 6= j}, are selected such that the video clip Vi and the caption Cj belong to the same original YouTube video (as (Vi , Ci)), while the other half are sampled from other YouTube videos.

Training time. Once the video and text features are extracted, training our embedding model on the full HowTo100M dataset is relatively fast and takes less than three days on a single Tesla P100 GPU.

Table 3: Impact of intra-video negative pairs during training. M: MSR-VTT, L: LSMDC, Y: YouCook2, C: CrossTask.

Negative sampling helps (M MSR-VTT difference is pretty marginal)

| Negative sampling | M (R®10) | L (R@10) | Y (R®10) | C (AVG Recall) |

|---|---|---|---|---|

| No intra-negative | 30.1 | 12.3 | 18.1 | 25.7 |

| With intra-negative | 29.6 | 14.0 | 24.8 | 33.6 |

Datasets and evaluation setups (§5.2)

- Action step localization

- CrossTask includes 18 tasks and 2.7k instructional videos with manually annotated action segments

- Videos from the test set of CrossTask are removed from the HowTo100M training set to ensure that they are not observed at training time

- Text-based video retrieval

- We evaluate our learned embedding using the standard recall metrics R@1, R@5, R@10 and the median rank (Median R).

- YouCook2

- MSR-VTT

- LSMDC