URGENT Challenge

Excerpt

Universality, Robustness, and Generalizability for EnhancemeNT

Interspeech URGENT 2025 Challenge

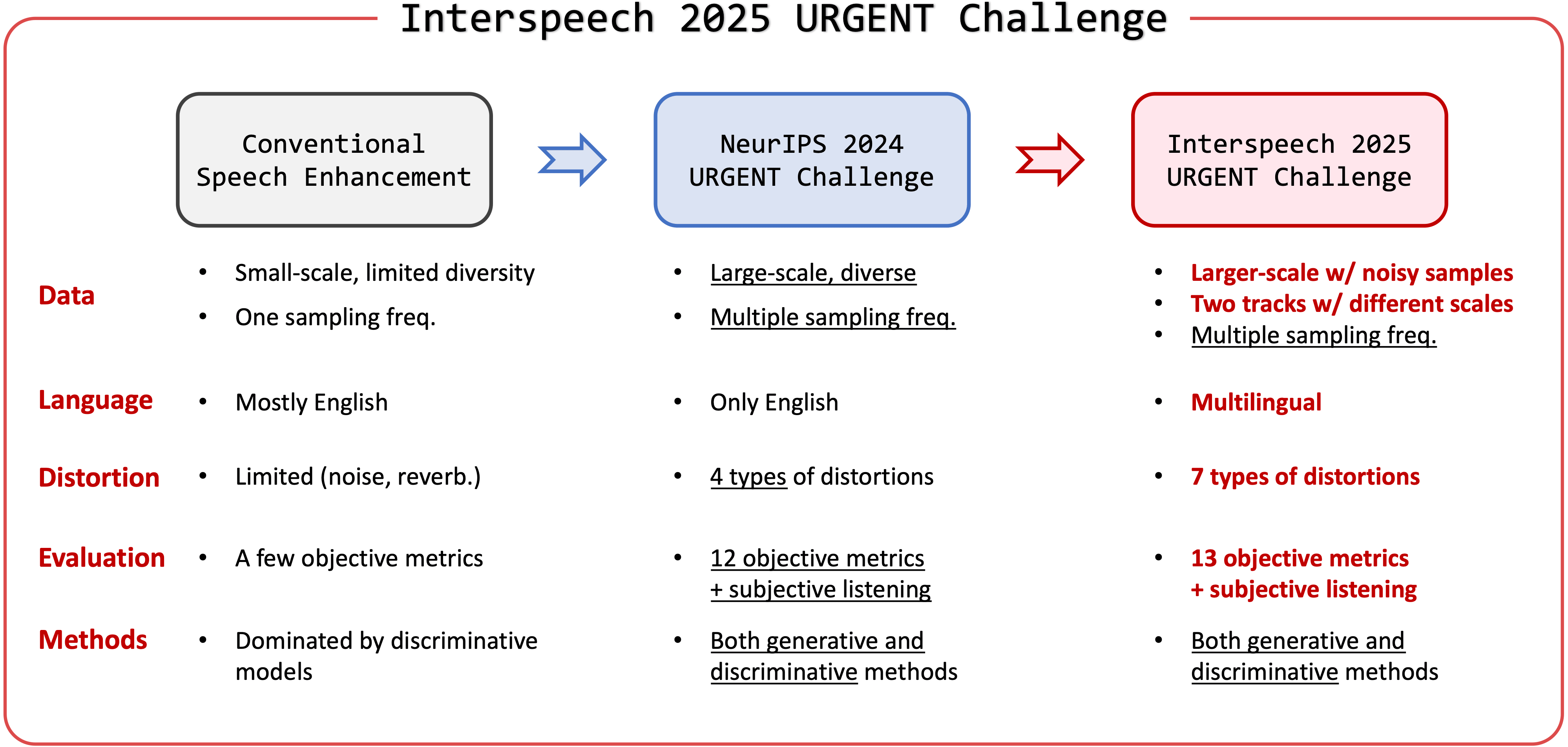

The Interspeech 2025 URGENT challenge (Universality, Robustness, and Generalizability for EnhancemeNT) is a speech enhancement challenge held at Interspeech 2025. We aim to build universal speech enhancement models for unifying speech processing in a wide variety of conditions.

Goal

The Interspeech 2025 URGENT challenge aims to bring more attention to constructing Universal, Robust and Generalizable speech EnhancemeNT models. This year’s challenge focuses on the following aspects:

- To adress 7 types of distortions

- To improve the universality of SE systems, we consider the following distortions: additive noise, reverberation, clipping, bandwidth extension, codec artifacts, packet loss, and wind noise.

- To build a robust model using multilingual data

- Mose SE research focuses on English data and language dependency of SE systems are still under-explored, particulary for generative models. This challenge includes 5 languages, English, German, French, Spanish, and Chinese.

- To leverage noisy but diverse data

- How to incorporate noisy speech in training is an important topic to scale up the data amount and diversity. We intentionally included some noisy dataset (CommonVoice) so that participants can investigate how to leverage such data (e,g, data filtering or semi-supervised learning).

- To handle inputs with multiple smapling rates

- As in the first NeurIPS 2024 URGENT challenge, the model has to accept audios with any sampling rates. The dataset includes 8k, 16k, 22.05k, 24k, 44.1k, and 48kHz data.

Task Introduction

The task of this challenge is to build a single speech enhancement system to adaptively handle input speech with different distortions (corresponding to different SE subtasks) and different input formats (e.g., sampling frequencies) in different acoustic environments (e.g., noise and reverberation).

The training data will consist of several public corpora of speech, noise, and RIRs. Only the specified set of data can be used during the challenge. We encourage participants to apply data augmentation techniques such as dynamic mixing to achieve the best generalizability. The data preparation scripts are released in our GitHub repositoryhttps://github.com/urgent-challenge/urgent2025_challenge/. Check the Data tab for more information.

We will evaluate enhanced audios with a variety of metrics to comprehensively understand the capacity of existing generative and discriminative methods. They include four different categories of metricsAn additional category (subjective SE metrics) will be added for the final blind test phase for evaluating the MOS score.:

- non-intrusive metrics (e.g., DNSMOS, NISQA) for reference-free speech quality evaluation.

- intrusive metrics (e.g., PESQ, STOI, SDR, MCD) for objective speech quality evaluation.

- downstream-task-independent metrics (e.g., Levenshtein phone similarity) for language-independent, speaker-independent, and task-independent evaluation.

- downstream-task-dependent metrics (e.g., speaker similarity, word accuracy or WAcc) for evaluation of compatibility with different downstream tasks.

More details about the evaluation plan can be found in the Rules tab.

Two tracks

The URGENT 2025 challenge has two tracks with different data scales. Participants may participate in track 1, track 2, or both. The same test set will be utilized in two tracks.

- Track 1: We limit the duration of some big corpora (MLS and CommonVoice). The first-track dataset has ~2.5k hours of speech and ~0.5k hours of noise.

- Track 2: We do not limit the duration of MLS and CommonVoice datasets, resulting in ~60k hours of speech.

We characterize the first track as a primary track to compare the techniques with the same data and the second track as a secondary one to provide insights into data scaling. Since the data scale in the Track 2 is huge, we encourage participants to participate in Track 1 first. However, if interested in data scaling or data-hungry methods (e.g., speechLM or SSL-based methods), the Track 2 welcomes you!

Note that although the dataset is large-scale, not all the data have to be used to train. You can participate in the track2 by incorporating some more data to train your best system developed in the track 1. Data selection techniques (e.g., based on diversity or estimated MOS scores) would be helpful to efficiently train the model.

Communication

Join our Slack workspace for real-time communication.

Motivation

Recent decades have witnessed rapid development of deep learning-based speech enhancement (SE) techniques, with impressive performance in matched conditions. However, most conventional speech enhancement approaches focus only on a limited range of conditions, such as single-channel, multi-channel, anechoic, and so on. In many existing works, researchers tend to only train SE models on one or two common datasets, such as the VoiceBank+DEMANDhttps://datashare.ed.ac.uk/handle/10283/2791 and Deep Noise Suppression (DNS) Challenge datasets.

The evaluation is often done only on simulated conditions that are similar to the training setting. Meanwhile, in earlier SE challenges such DNS series, the choice of training data was also often left to the participants. This led to the situation that models trained with a huge amount of private data were compared to models trained with a small public dataset. This greatly impedes understanding of the generalizability and robustness of SE methods comprehensively. In addition, the model design may be biased towards a specific limited condition if only a small amount of data is used. The resultant SE model may also have limited capacity to handle more complicated scenarios.

Apart from conventional discriminative methods, generative methods have also attracted much attention in recent years. They are good at handling different distortions with a single model and tend to generalize better than discriminative methods. However, their capability and universality have not yet been fully understood through a comprehensive benchmark.

Meanwhile, recent efforts have shown the possibility of building a single system to handle various input formats, such as different sampling frequencies and numbers of microphones. However, a well-established benchmark covering a wide range of conditions is still missing, and no systematic comparison has been made between state-of-the-art (SOTA) discriminative and generative methods regarding their generalizability.

Existing speech enhancement challenges have fostered the development of speech enhancement models for specific conditions, such as denoising and dereverberation, speech restoration, packet loss concealment, acoustic echo cancellation, hearing aids, 3D speech enhancement, far-field multi-channel speech enhancement for video conferencing, unsupervised domain adaptation for denoising, and audio-visual speech enhancement. These challenges have greatly enriched the corpora in speech enhancement studies. However, there still lacks a challenge that can benchmark the generalizability of speech enhancement systems in a wide range of conditions.

Similar issues can also be observed in other speech tasks such as automatic speech recognition (ASR), speech translation (ST), speaker verification (SV), and spoken language understanding (SLU). Among them, speech enhancement is particularly vulnerable to mismatches since it is heavily reliant on paired clean/noisy speech data to achieve strong performance. Unsupervised speech enhancement that does not require groundtruth clean speech has been proposed to address this issue, but often merely brings benefit in a final finetuning stage. Therefore, we focus on speech enhancement in this challenge to address the aforementioned problems.