Writing C for curl

It is a somewhat common question to me: how do we write C in curl to make it safe and secure for billions of installations? Some precautions we take and decisions we make. There is no silver bullet, just guidelines. As I think you can see for yourself below they are also neither strange nor surprising.

The ‘c’ in curl does not and never did stand for the C programming language, it stands for client.

Disclaimer

This text does in no way mean that we don’t occasionally merge security related bugs. We do. We are human. We do mistakes. Then we fix them.

Testing

We write as many tests as we can. We run all the static code analyzer tools we can on the code – frequently. We run fuzzers on the code non-stop.

C is not memory-safe

We are certainly not immune to memory related bugs, mistakes or vulnerabilities. We count about 40% of our security vulnerabilities to date to have been the direct result of us using C instead of a memory-safe language alternative. This is however a much lower number than the 60-70% that are commonly repeated, originating from a few big companies and projects. If this is because of a difference in counting or us actually having a lower amount of C problems, I cannot tell.

Over the last five years, we have received no reports identifying a critical vulnerability and only two of them were rated at severity high. The rest ( 60 something) have been at severity low or medium.

We currently have close to 180,000 lines of C89 production code (excluding blank lines). We stick to C89 for widest possible portability and because we believe in continuous non-stop iterating and polishing and never rewriting.

Readability

Code should be easy to read. It should be clear. No hiding code under clever constructs, fancy macros or overloading. Easy-to-read code is easy to review, easy to debug and easy to extend.

Smaller functions are easier to read and understand than longer ones, thus preferable.

Code should read as if it was written by a single human. There should be a consistent and uniform code style all over, as that helps us read code better. Wrong or inconsistent code style is a bug. We fix all bugs we find.

We have tooling that verify basic code style compliance.

Narrow code and short names

Code should be written narrow. It is hard on the eyes to read long lines, so we enforce a strict 80 column maximum line length. We use two-spaces indents to still allow us to do some amount of indent levels before the column limit becomes a problem. If the indent level becomes a problem, maybe it should be split up in several sub-functions instead?

Also related: (in particular local) identifiers and names should be short. Having long names make them hard to read, especially if there are multiple ones that are similar. Not to mention that they can get hard to fit within 80 columns after some amount of indents.

So many people will now joke and say something about wide screens being available and what not but the key here is readability. Wider code is harder to read. Period. The question could possibly be exactly where to draw the limit, and that’s a debate for every project to have.

Warning-free

While it should be natural to everyone already, we of course build all curl code entirely without any compiler warning in any of the 220+ CI jobs we perform. We build curl with all the most picky compiler options that exist with the set of compilers we use, and we silence every warning that appear. We treat every compiler warning as an error.

Avoid “bad” functions

There are some C functions that are just plain bad because of their lack of boundary controls or local state and we avoid them (gets, sprintf, strcat, strtok, localtime, etc).

There are some C functions that are complicated in other ways. They have too open ended functionality or do things that often end up problematic or just plain wrong; they easily lead us into doing mistakes. We avoid sscanf and strncpy for those reasons.

We have tooling that bans the use of these functions in our code. Trying to introduce use of one of them in a pull request causes CI jobs to turn red and alert the author about their mistake.

Buffer functions

Years ago we found ourselves having done several mistakes in code that were dealing with different dynamic buffers. We had too many separate implementations working on dynamically growing memory areas. We unified this handling with a new set of internal help functions for growing buffers and now made sure we only use these. This drastically reduces the need for realloc(), which helps us avoid mistakes related to that function.

Each dynamic buffer also has its own maximum size set, which in its simplicity also helps catching mistakes. In the current libcurl code, we have 80 something different dynamic buffers.

Parsing functions

I mentioned how we don’t like sscanf. It is a powerful function for parsing, but it often ends up parsing more than what the user wants (for example more than one space even if only one should be accepted) and it has weak (non-existing) handling of integer overflows. Lastly, it steers users into copying parsed results around unnecessarily, leading to superfluous uses of local stack buffers or short-lived heap allocations.

Instead we introduced another set of helper functions for string parsing, and over time we switch all parser code in curl over to using this set. It makes it easier to write strict parsers that only match exactly what we want them to match, avoid extra copies/mallocs and it does strict integer overflow and boundary checks better.

Monitor memory function use

Memory problems often involve a dynamic memory allocation followed by a copy of data into the allocated memory area. Or perhaps, if the allocation and the copy are both done correctly there is no problem but if either of them are wrong things can go bad. Therefor we aim toward minimizing that pattern. We rather favor strdup and memory duplication that allocates and copies data in the same call – or uses of the helper functions that may do these things behind their APIs. We run a daily updated graph in the curl dashboard that shows memory function call density in curl. Ideally, this plot will keep falling over time.

Memory function call density in curl production code over time

It can perhaps also be added that we avoid superfluous memory allocations, in particular in hot paths. A large download does not need any more allocations than a small one.

Double-check multiplications

Integer overflows is another area for concern. Every arithmetic operation done needs to be done with a certainty that it does not overflow. This is unfortunately still mostly a manual labor, left for human reviews to detect.

64-bit support guaranteed

In early 2023 we dropped support for building curl on systems without a functional 64-bit integer type. This simplifies a lot of code and logic. Integer overflows are less likely to trigger and there is no risk that authors accidentally believe they do 64-bit arithmetic while it could end up being 32-bit in some rare builds like could happen in the past. Overflows and mistakes can still happen if using the wrong type of course.

Maximum string length

To help us avoid mistakes on strings, in particular with integer overflows, but also with other logic, we have a general check of all string inputs to the library: they do not accept strings longer than a set limit. We deem that any string set that is longer is either just a blatant mistake or some kind of attempt (attack?) to trigger something weird inside the library. We return error on such calls. This maximum limit is right now eight megabytes, but we might adjust this in the future as the world and curl develop.

keep master golden

At no point in time is it allowed to break master. We only merge code into master that we believe is clean, fine and runs perfect. This still fails at times, but then we do our best at addressing the situation as quickly as possible.

Always check for and act on errors

In curl we always check for errors and we bail out without leaking any memory if (when!) they happen. This includes all memory operations, I/O, file operations and more. All calls.

Some developers are used to modern operating systems basically not being able to return error for some of those, but curl runs in many environments with varying behaviors. Also, a system library cannot exit or abort on errors, it needs to let the application take that decision.

APIs and ABIs

Every function and interface that is publicly accessible must never be changed in a way that risks breaking the API or ABI. For this reason and to make it easy to spot the functions that need this extra precautions, we have a strict rule: public functions are prefixed with “curl_” and no other functions use that prefix.

Everyone can do it

Thanks to the process of human reviewers, plenty of automatic tools and an elaborate and extensive test suite, everyone can (attempt to) write curl code. Assuming you know C of course. The risk that something bad would go in undetected, is roughly equal no matter who the author of the code is. The responsibility is shared.

Go ahead. You can do it!

Comments

Hacker news. Low Level on YouTube.

Welcome to another curl release.

Download it here.

Release presentation

Numbers

the 266th release

12 changes

48 days (total: 9,875)

305 bugfixes (total: 11,786)

499 commits (total: 34,782)

0 new public libcurl function (total: 96)

1 new curl_easy_setopt() option (total: 307)

1 new curl command line option (total: 268)

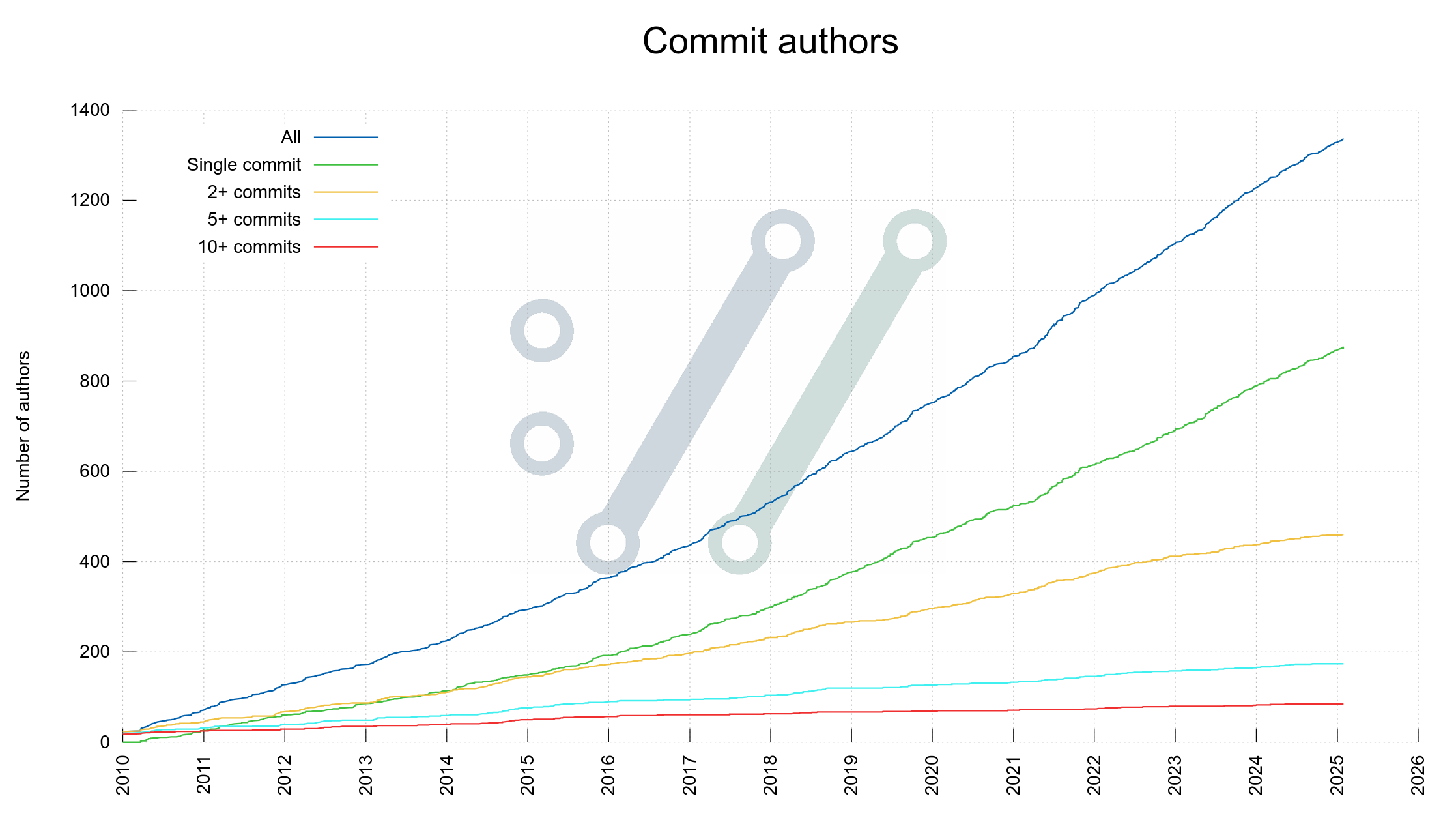

71 contributors, 37 new (total: 3,379)

41 authors, 16 new (total: 1,358)

0 security fixes (total: 164)

Changes

- curl: new write-out variable ‘tls_earlydata’

- curl: –url supports a file with URLs

- curl: add ’64dec’ function for base64 decoding

- IMAP: add CURLOPT_UPLOAD_FLAGS and –upload-flags

- add CURLFOLLOW_OBEYCODE and CURLFOLLOW_FIRSTONLY

- gnutls: set priority via –ciphers

- OpenSSL/quictls: support TLSv1.3 early data

- wolfSSL: support TLSv1.3 early data

- rustls: add support for CERTINFO

- rustls: add support for SSLKEYLOGFILE

- rustls: support ECH w/ DoH lookup for config

- rustls: support native platform verifier

Records

This release broke the old project record and is the first release ever to contain more than 300 bugfixes since the previous release. There were so many bugfixes landed that I decided to not even list my favorites in this blog post the way I have done in the past. Go read the full changelog, or watch the release video to see me talk about some of them.

Another project record broken in this release is the amount commits merged into the repository since the previous release: 501.

RFC 9460 describes a DNS Resource Record (RR) named HTTPS. To highlight that it is exactly this DNS record called HTTPS we speak of, we try to always call it HTTPS RR using both words next to each other.

curl currently offers experimental support for HTTPS RR in git. Experimental means you need to enable it explicitly in the build to have it present.

cmake -DUSE_HTTPSRR=ON ...

or

configure --enable-httpsrr ...

What is HTTPS RR for?

It is a DNS field that provides service meta-data about a target hostname. In many ways this is an alternative record to SRV and URI that were never really used for the web and HTTP. It also kind of supersedes the HSTS and alt-svc headers.

Here is some data it offers:

ECH config

ECH is short for Encrypted Client Hello and is the established system for encrypting the SNI field in TLS handshakes. As you may recall, the SNI field is one of the last remaining pieces in a HTTPS connection that is sent in the clear and thus reveals to active listeners with which site the client intends to communicate. ECH hides this by encrypting the name.

For ECH to be able to work, the client needs information prior to actually doing the handshake and this field is provides this data. curl features experimental ECH support.

ECH has been in the works for several years, but has still not been published in an RFC.

ALPN list

A list of ALPN identifiers is provided. These identifiers basically tell the client which HTTP versions this server wants to use (over HTTPS). If this list contains HTTP/1.1 and we were asked to do a HTTP:// transfer, it implies the client should upgrade to HTTPS. Thus sort of replacing HSTS.

I think the key ALPN id provided here is ‘h3’ which tells the client this server supports HTTP/3 and we can try that already in the first flight. The previous option to properly upgrade to HTTP/3 would be to wait for a alt-svc response header that could instruct a subsequent connect attempt. That then delays the upgrade significantly since reusing the by then already existing HTTP/2 or HTTP/1 connection is typically preferred by clients.

Target

The service is offered on another hostname. Alt-svc style. It can also provide information about how to access this site for different “services” so that TCP/TLS connections go one way and QUIC connections another.

Port

It can tell the client that the service is hosted on a different port number.

IP addresses

It can provide a list of IPv4 and IPv6 addresses for the name. Supposedly to be used in case there are no A or AAAA fields retrieved at the same time.

Getting the DNS record

Adding support for HTTPS RR into an existing TCP client such as curl is unfortunately not as straight-forward as we would like.

A primary obstacle is that regular hostname resolves are done with the POSIX function getaddrinfo() and this function has no knowledge of or support for HTTPS RR and such support cannot expected to get added in a future either. The API does not really allow that. Clients like curl simply need to get the additional records using other means.

How curl does it

curl, or rather libcurl, has three different ways in source code to resolve hostnames out of which most builds feature two options:

- DoH (DNS-over-HTTPS) – curl sends DNS requests using HTTPS to a specified server. Users need to explicitly ask for this and point out the DoH URL.

- Using the local resolver. A libcurl build can then use either c-ares or getaddrinfo() to resolve host names, for when DoH or a proxy etc are not used.

DoH

The DoH code in libcurl is a native implementation (it does not use any third party libraries) and libcurl features code for both sending HTTPS RR requests and parsing the responses.

getaddrinfo

When using this API for name resolving, libcurl still needs to get built with c-ares as well to provide the service of asking for the HTTPS RR. libcurl then resolves the hostname “normally” with getaddrinfo() (fired off in a separate helper thread typically) and asks for the HTTPS RR using c-ares in parallel.

c-ares

When c-ares is used for name resolving, it also asks for the HTTPS RR at the same time. It means c-ares asks for A, AAAA and HTTPS records.

Existing shortcomings

We do not offer a runtime option to disable using HTTPS-RR yet, so if you build with it enabled it will always be attempted. I think we need to provide such an option for the times the HTTPS record is either just plain wrong or the user wants to debug or just play around differently.

curl also does not yet currently handle every aspect of the quite elaborate HTTPS RR. We have decided to go forward slowly and focus our implementation on the parts of the resource field that seem to be deployed and in actual use.

Deployed

There are sites using these records already today. Cloudflare seems to provide it for a lot of its hosted sites for example. If you enable HTTPS-RR today, there are plenty of opportunities to try it out. Both for regular service and protocol switching and also for ECH.

Please, go ahead and enable it in a build, try it out and tell us what works and what does not work. We would love to move forward on this and if we get confirmation from users that it actually works, we might be able transition it out from experimental status soon.

Soon, in the first weekend of May 2025, we are gathering curl enthusiasts in a room in the wonderful city Prague and we talk curl related topics over two full days. We call it curl up 2025.

curl up is our annual curl event and physical meetup. It is a low key and casual event that usually attract somewhere between twenty and thirty people.

We sit down in a room and talk about curl related topics. Share experiences, knowledge, ideas and insights.

Get all your questions answered. Get all your curiosity satisfied. Tell the others what you do with curl and what you think the curl project should know.

Attend!

We want broad attendance. The curl developers sure, but we also love to see newcomers and curl users show up and share their stories, join the conversations and get the opportunity to meet the team and maybe learn new stuff from the wonderful world of Internet transfers.

The event is free to attend, you just need to register ahead of time. You find the registration link on the curl up 2025 information page.

Sponsor!

curl is a small and independent Open Source project. Your sponsorship could help us pay to get core developers to this conference and help cover other costs we have to run it. And your company would get cool exposure while you help us improve a little piece of internet infrastructure. Win win!

Physical meetings have merit

This is the only meetup in the real world we organize in the curl project. In my experience it serves as an incredible energy and motivation boost and it helps us understand each other better. We all communicate a little smoother once we have gotten to know and “the persons” behind the online names or aliases.

After having met physically, we cooperate better.

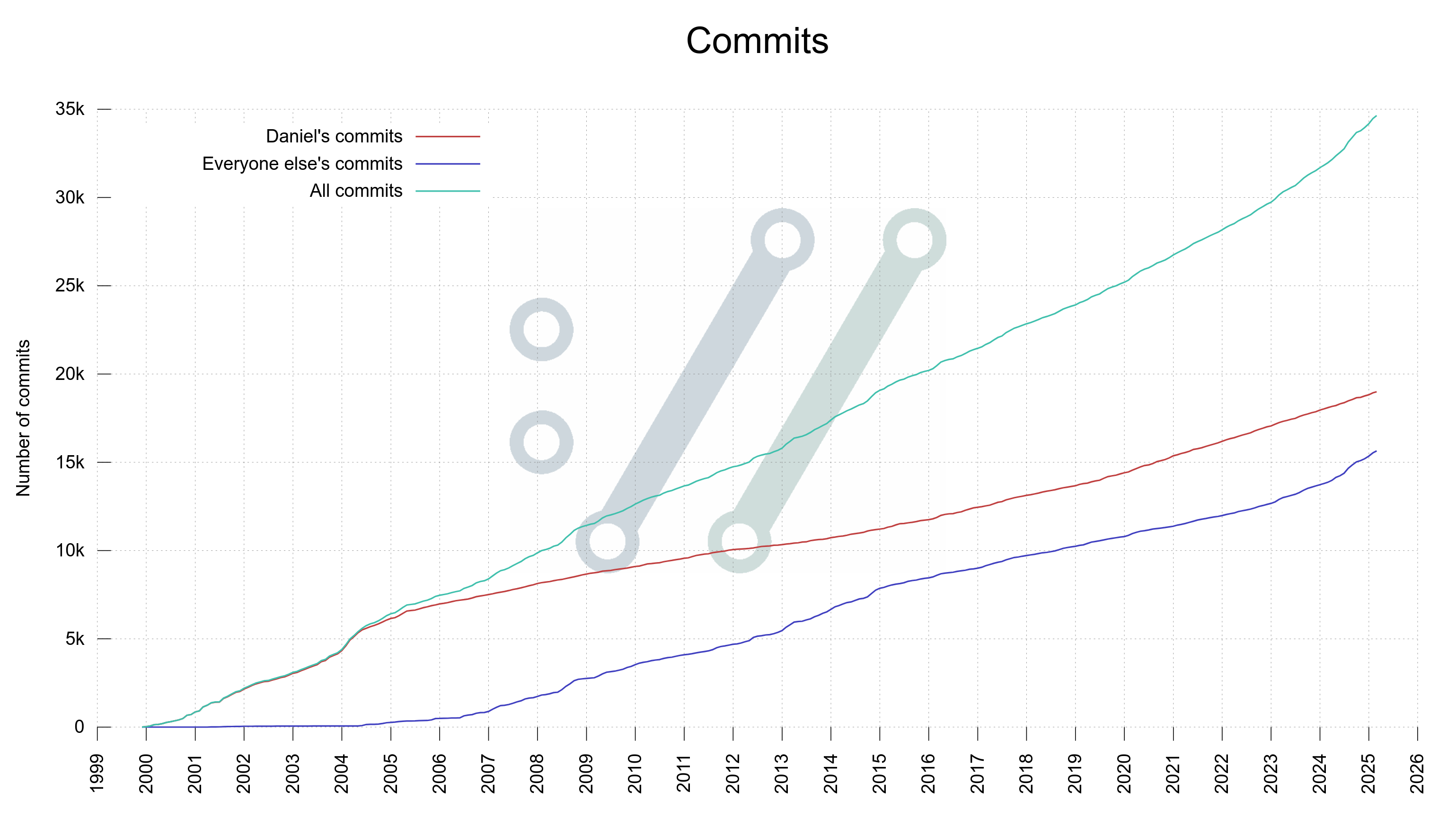

It has been 387 days since I announced having done 18k commits. This is commit number 19,000.

Looking at the graph below that is showing my curl commits and everyone else’s over time, it seems to imply that while I have kept my pace pretty consistently over the last few years, others in the curl project have stepped up their game and commit more.

Commits done in curl over time, by Daniel and by “everyone else”

This means that my share of the total number of commits keep shrinking and also that I personally am nowadays often not the developer doing the most curl commits per month.

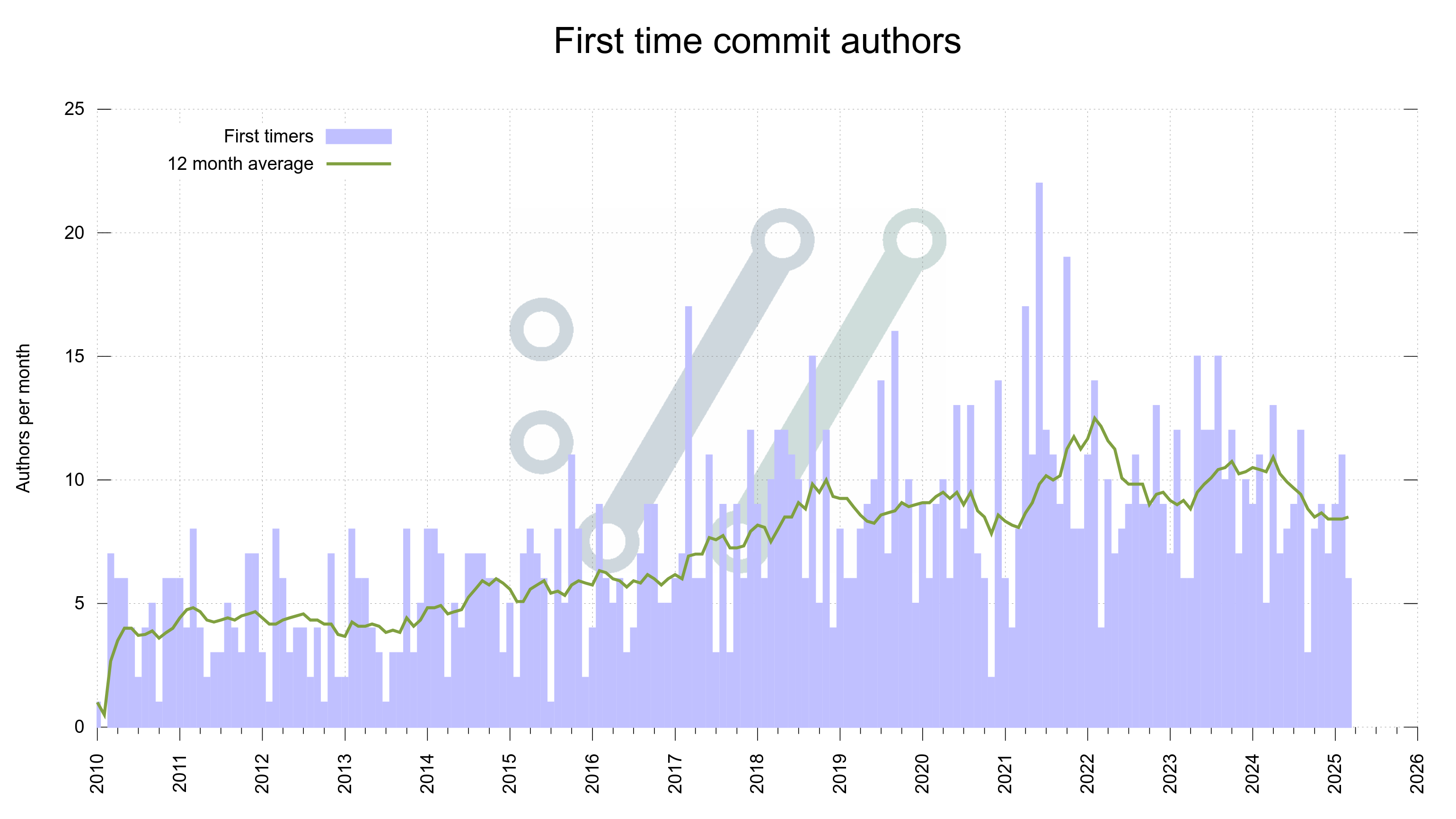

This is all good. We need even more contributors and people involved. We see on average about eight new commit authors every month.

Number of new commit authors per month in the curl project, over time.

The previous one thousand commits I did took me 422 days. These latest one thousand commits took me 387 days. If I manage to maintain this exact frequency, I will reach 20K commits on April 5 2026.

Before RFC 6265 was published in 2011, the world of cookies was a combination of anarchy and guesswork because the only “spec” there was was not actually a spec but just a brief text lacking a lot of details.

RFC 6265 brought order to a world of chaos. It was good. It made things a lot better. With this spec, it was suddenly much easier to write compliant and interoperable cookie implementations.

I think these are plain facts. I have written cookie code since 1998 and I thus know this from my own personal experience. Since I believe in open protocols and doing things right, I participated in the making of that first cookie spec. As a non-browser implementer I think I bring a slightly different perspective and different angle to what many of the other involved people have.

Consensus

The cookie spec was published by the IETF and it was created and written in a typical IETF process. Lots of the statements and paragraphs were discussed and even debated. Since there were many people and many opinions involved, of course not everything I think should have been included and stated in the spec ended up the way I wanted them to, but in a way that the consensus seemed to favor. That is just natural and the way of the process. Everyone involved accept this.

I have admitted defeat, twice

The primary detail in the spec, or should I say one of the important style decisions, is one that I disagree with. A detail that I have tried to bring up again when the cookie spec was up for a revision and a new draft was made (still known as 6265bis since it has not become an official RFC yet). A detail that I have failed the get others to agree with me about to an enough degree to have it altered. I have failed twice. The update will ship with this feature as well.

Cookie basics

Cookies are part of (all versions of ) HTTP but are documented and specified in a separate spec. It is a classic client-server setup where Set-Cookie: response headers are sent from a server to a client, the client stores the received cookies and sends them back to servers according to a set of rules and matching criteria using the Cookie: request header.

Set-Cookie

This is the key header involved here. So how does it work? What is the syntax for this header we need to learn so that we all can figure out how servers and clients should be implemented to do cookies interoperable?

As with most client-server protocols, one side generates this header, the other side consumes it. They need to agree on how this header works.

My problem is two

The problem is that this header’s syntax is defined twice in the spec. Differently.

Section 4.1 describes the header from server perspective while section 5.2 does it from a client perspective.

If you like me have implemented HTTP for almost thirty years you are used to reading protocol specifications and in particular HTTP related specification. HTTP has numerous headers described and documented. No other HTTP documents describe the syntax for header fields differently in separate places. Why would they? They are just single headers.

This double-syntax approach comes as a surprise to many readers, and I have many times discussed cookie syntax with people who have read the 6265 document but only stumbled over and read one of the places and then walked away with only a partial understanding of the syntax. I don’t blame them.

The spec insists that servers should send a rather conservative Set-Cookie header but knowing what the world looks like, it simultaneously recommends the client side for the same header to be much more liberal because servers might not be as conservative as this spec tells the server to be. Two different syntax.

The spec tries to be prescriptive for servers: thou shall do it like this, but we all know that cookies were wilder than this at the time 6265 was published and because we know servers won’t stick to these “new” rules, a client can’t trust that servers are that nice but instead need to accept a broader set of data. So clients are told to accept much more. A different syntax.

Servers do what works

As the spec tells clients to accept a certain syntax and widely deployed clients and cookie engines gladly accept this syntax, there is no incitement or motive for servers to change. The do this if you are a good server instruction serves as an ideal, but there is no particularly good way to push anyone in that direction because it works perfectly well to use the extended syntax that the spec says that the clients need to accept.

A spec explaining what is used

What I want? I want the Set-Cookie header to be described in a single place in the spec with a single unified syntax. The syntax that is used and that is interoperable on the web today.

It would probably even make the spec shorter, it would remove confusion and it would certainly remove the risk that people think just one of the places is the canonical syntax.

Will I bring this up again when the cookie spec is due for refresh again soon? Yes I will. Because I think it would make it a much better spec.

Do I accept defeat and accept that I am on the losing side in an argument when nobody else seems to agree with me? Yes to that as well. Just because I think like this does in no way mean that this is objectively right or that this is the way to go in a future cookie spec.

Heading towards curl release number 266 we have decided to spice up our release cycle with release candidates in an attempt to help us catch regressions better earlier.

It has become painfully obvious to us in the curl development team that over the past few years we have done several dot-zero releases in which we shipped quite terrible regressions. Several times those regressions have been so bad or annoying that we felt obligated to do quick follow-up releases a week later to reduce friction and pain among users.

Every such patch release have caused pain in our souls and have worked as proof that we to some degree failed in our mission.

We have thousands of tests. We run several hundred CI jobs for every change that verify them. We simply have too many knobs, features, build configs, users and combinations of them all to be able to catch all possible mistakes ourselves.

Release candidates

Decades ago we sometimes did release candidates, but we stopped. We have instead shipped daily snapshots, which is basically what a release would look like, packaged every day and made available. In theory this should remove the need and use of release candidates as people can always just get the latest snapshots, try those out and report problems back to us.

We are also acutely aware of the fact that only releases get tested properly.

Are release candidates really going to make a difference? I don’t know. I figure it is worth a shot. Maybe it is a matter of messaging and gathering the troops around these specific snapshots and by calling out the need for the testing to get done, maybe it will happen at least to some extent?

Let’s attempt this for a while and then come back in a few years and evaluate if it has seemed to help or otherwise improve the regression rate or not.

Release cycle

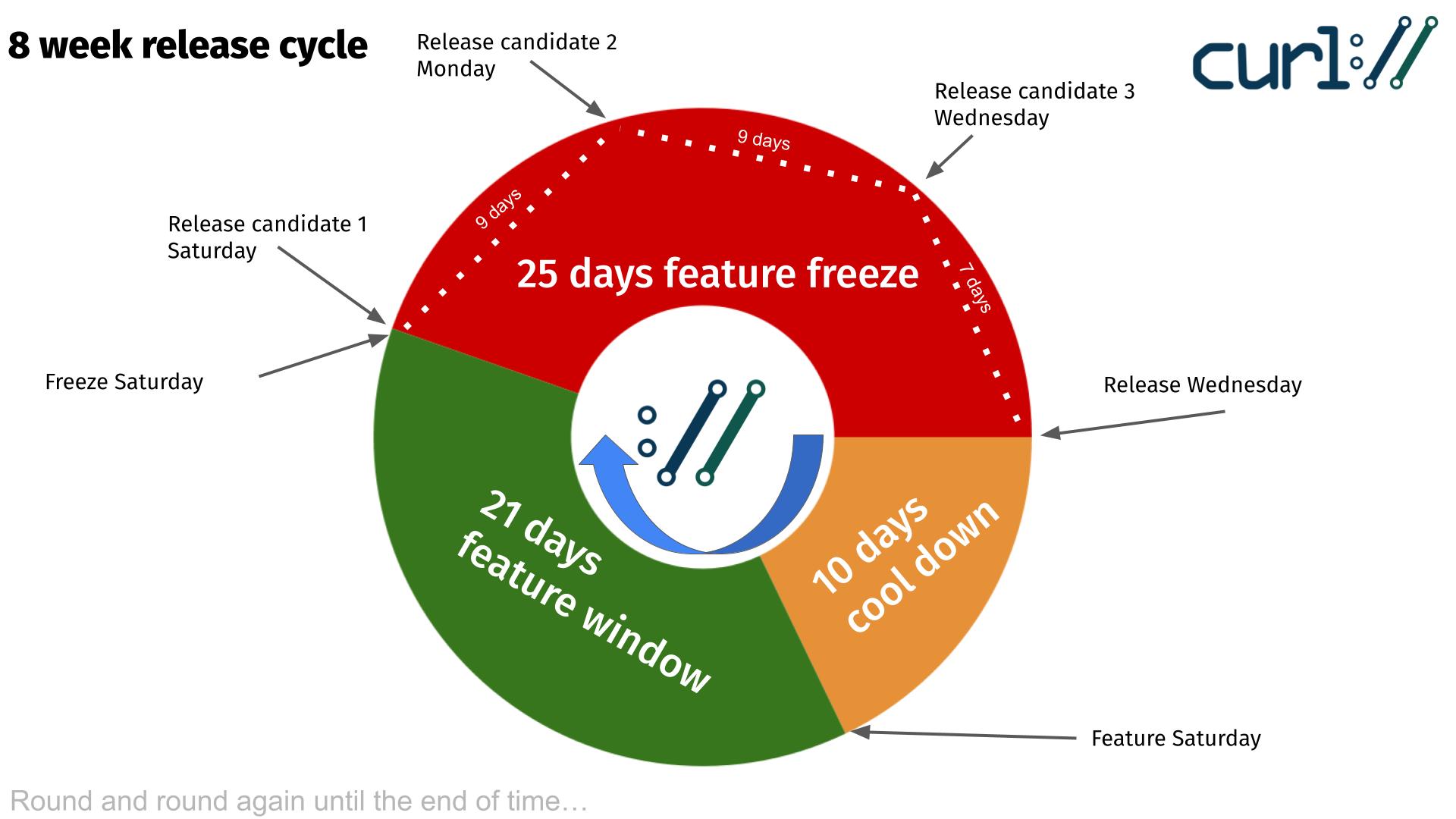

We have a standard release cycle in the curl project that is exactly eight weeks. When things run smoothly, we ship a new release on a Wednesday every 56 days.

The release cycle is divided into three periods, or phases, that control what kind of commits us maintainers are permitted to merge. Rules to help us ship solid software.

The curl release cycle, illustrated

Immediately after a release, we have a ten day cool down period during which we absorb reactions and reports from the release. We only merge bugfixes and we are prepared to do a patch release if we need to.

Ten days after the release, we open the feature window in which we allow new features and changes to the project. The larger things. Innovations, features etc. Typically these are the most risky things that may cause regressions. This is a three-week period and those changes that do not get merged within this window get another chance again next cycle.

The longest phase is the feature freeze that kicks in twenty-five days before the pending release. During this period we only merge bugfixes and is intended to calm things down again, smooth all the frictions and rough corners we can find to make the pending release as good as possible.

Adding three release candidates

The first release candidate (rc1) is planned to ship on the same day we enter feature freeze. From that day on, there will be no more new features before the release so all the new stuff can be checked out and tested. It does not really make any sense to do a release candidate before that date.

We will highlight this release candidate and ask that everyone who can (and want) tests this one out and report every possible issue they find with it. This should be the first good opportunity to catch any possible regressions caused by the new features.

Nine days later we ship rc2. This will be done no matter what bugreports we had on rc1 or what possible bugs are still pending etc. This candidate will have additional bugfixes merged.

The final and third release candidate (rc3) is then released exactly one week before the pending release. A final chance to find nits and perfect the pending release.

I hope I don’t have to say this, but you should not use the release candidates in production, and they may contain more bugs than what a regular curl release normally does.

Technically

The release candidates will be created exactly like a real release, except that there will not be any tags set in the git repository and they will not be archived. The release candidates are automatically removed after a few weeks.

They will be named curl-X.Y.Z-rcN, where x.y.z is the version of the pending release and N is the release candidate number. Running “curl -V” on this build will show “x.y.x-rcN” as the version. The libcurl includes will say it is version x.y.z, so that applications can test out preprocessor conditionals etc exactly as they will work in the real x.y.z release.

You can help!

You can most certainly help us here by getting one of the release candidates when they ship and try it out in your use cases, your applications, your pipelines or whatever. And let us know how it runs.

I will do something on the website to help highlight the release candidates once there is one to show, to help willing contributors find them.

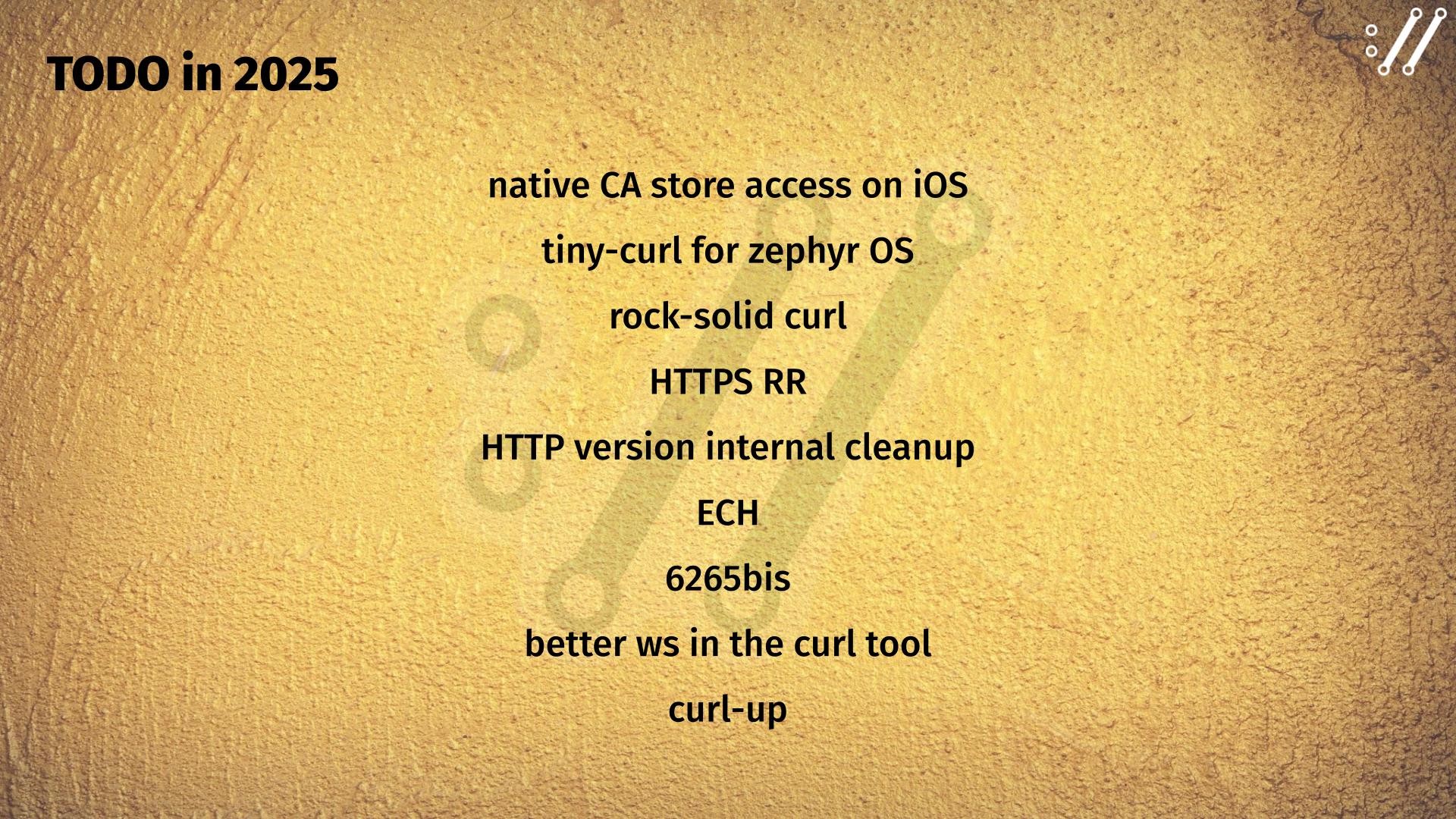

On March 6 2025 at 18:00 UTC, I am doing a curl roadmap webinar, talking through a set of features and things I want to see happen in curl during 2025.

This is an opportunity for you to both learn about the plans but also to provide feedback on said ideas. The roadmap is not carved in stone, nor is it a promise that these things truly will happen. Things and plans might change through the year. We might change our minds. The world might change.

The event will be done in parallel over twitch and Zoom. To join the Zoom version you need to sign up for it in advance. To join the live-streamed twitch version, you just need to show up.

Sign up here for Zoom

You will be able to ask questions and provide comments using either channel.

The planned roadmap overview as of a few days ago

Recording

We are doing a rerun of last year’s successful curl + distro online meeting. A two-hour discussion, meeting, workshop for curl developers and curl distro maintainers. Maybe this is the start of a new tradition?

Last year I think we had a very productive meeting that led to several good outcomes. In fact, just seeing some faces and hearing voices from involved people is good and helps to enhance communication and cooperation going forward.

The objective for the meeting is to make curl better in distros. To make distros do better curl. To improve curl in all and every way we think we can, together.

Everyone who feels this is a subject they care about is welcome to join. The meeting is planned to take place in the early evening European time, early morning west coast US time. With the hope that it covers a large enough amount of curl interested people.

The plan is to do this on April 10, and all the details, planning and discussion items are kept on the dedicated wiki page for the event.

Feel free to add your own proposed discussion items, and if you feel inclined, add yourself as an intended participant. Feel free to help make this invite reach the proper people.

See you in April.

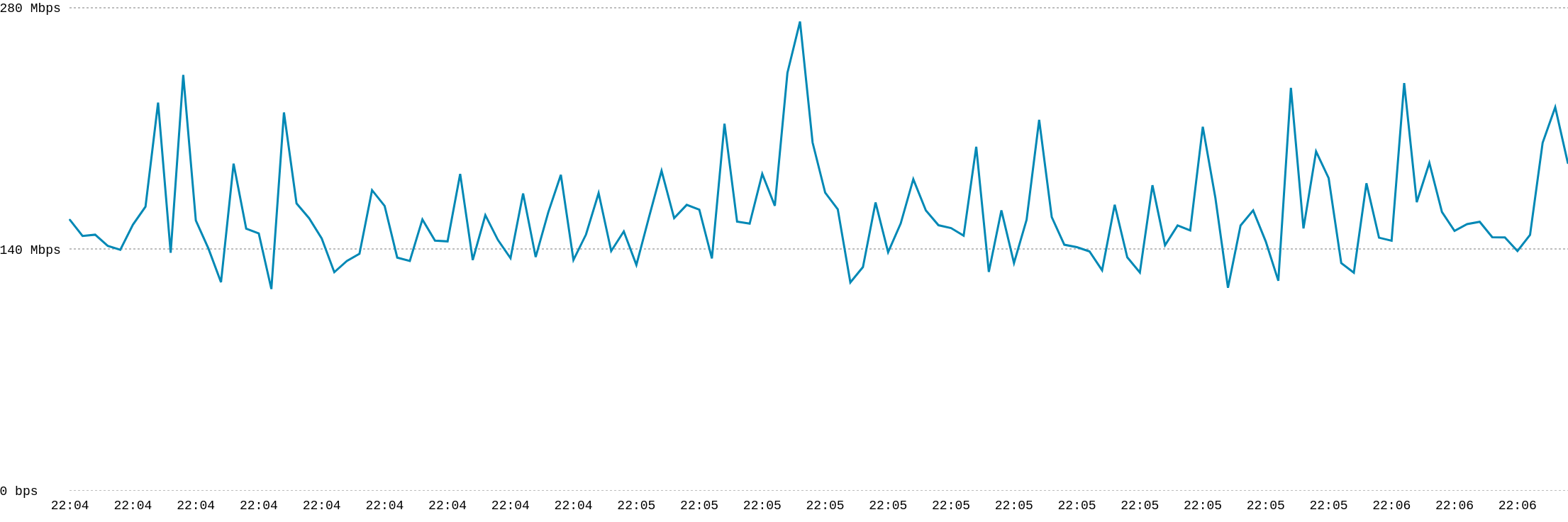

Data without logs sure leaves us open for speculations.

I took a quick look at what the curl.se website traffic situation looks like right now. Just as a curiosity.

Disclaimer: we don’t log website visitors at all, we don’t run any web analytics on the site so we basically don’t know a lot of who does what on the site. This is done both for privacy reasons, but also for practical reasons. Managing logs for this setup is work I rather avoid to do and to pay for.

What do we have, is a website that is hosted (fronted) by Fastly on their CDN network, and as part of that setup we have an admin interface that offers accumulated traffic data. We get some numbers, but without details and specifics.

Bandwidth

Over the last month, the site served 62.95 TB. This makes it average over 2TB/day. On the most active day in the period it sent away 3.41 TB.

This is the live bandwidth meter displayed while I was writing this blog post.

Requests

At 12.43 billion requests, it makes an average request transfer size 5568 bytes.

Downloads

Since we don’t have logs, we can’t count curl download perfectly. But we do have stats for request frequency for objects of different sizes from the site, and in the category 1MB-10MB we basically only have curl tarballs.

1.12 million such objects were downloaded over the last month. 37,000 downloads per day, or about one curl tarball downloaded every other second around the clock.

Of course most curl users never download it from curl.se. The source archives are also offered from github.com and users typically download curl from their distro or get it installed with their operating system etc.

But…?

The average curl tarball size from the last 22 releases is 4,182,317 bytes. 3.99 MB.

1.12 million x 3.99 MB is only 4,362 gigabytes. Not even ten percent of the total traffic.

Even if we count the average size of only the zip archives from recent releases, 6,603,978 bytes, it only makes 6,888 gigabytes in total. Far away from the almost 63 terabytes total amount.

This, combined with low average transfer size per request, seems to indicate that other things are transferred off the site at fairly extreme volumes.

Origin offload

99.77% of all requests were served by the CDN without reaching the origin site. I suppose this is one of the benefits of us having mostly a static site without cookies and dynamic choices. It allows us to get a really high degree of cache hits and content served directly from the CDN servers, leaving our origin server only a light load.

Regions

Fastly is a CDN with access points distributed over the globe, and the curl website is anycasted, so the theory is that users access servers near them. In the same region. If we assume this works, we can see from where most traffic to the curl website comes from.

The top-3:

- North America – 48% of the bandwidth

- Europe – 24%

- Asia – 22%

TLS

Now I’m not the expert on how exactly the TLS protocol negotiation works with Fastly, so I’m guessing a little here.

It is striking that 99% of the traffic uses TLS 1.2. It seems to imply that a vast amount of it is not browser-based, as all popular browsers these days mostly negotiate TLS 1.3.

HTTP

Seemingly agreeing with my TLS analysis, the HTTP version distribution also seem to point to a vast amount of traffic not being humans in front of browsers. They prefer HTTP/3 these days, and if that causes problems they use HTTP/2.

98.8% of the curl.se traffic uses HTTP/1, 1.1% use HTTP/2 and only the remaining tiny fraction of less than 0.1% uses HTTP/3.

Downloads by curl?

I have no idea how large share of the downloads that are actually done using curl. A fair share is my guess. The TLS + HTTP data imply a huge amount of bot traffic, but modern curl versions would at least select HTTP/2 unless the users guiding it specifically opted not to.

What is all the traffic then?

In the past, we have seen rather extreme traffic volumes from China downloading the CA cert store we provide, but these days the traffic load seems to be fairly evenly distributed over the world. And over time.

According to the stats, objects in the 100KB-1MB range were downloaded 207.31 million times. That is bigger than our images on the site and smaller than the curl downloads. Exactly the range for the CA cert PEM. The most recent one is at 247KB. Fits the reasoning.

A 247 KB file downloaded 207 million times equal 46 TB. Maybe that’s the explanation?

Sponsored

The curl website hosting is graciously sponsored by Fastly.