LayerNorm — PyTorch 2.4 documentation

Excerpt

Applies Layer Normalization over a mini-batch of inputs.

class torch.nn.LayerNorm(normalized_shape, eps=1e-05, elementwise_affine=True, bias=True, device=None, dtype=None)[source]

Applies Layer Normalization over a mini-batch of inputs.

This layer implements the operation as described in the paper Layer Normalization

The mean and standard-deviation are calculated over the last D dimensions, where D is the dimension of normalized_shape. For example, if normalized_shape is (3, 5) (a 2-dimensional shape), the mean and standard-deviation are computed over the last 2 dimensions of the input (i.e. input.mean((-2, -1))). and are learnable affine transform parameters of normalized_shape if elementwise_affine is True. The standard-deviation is calculated via the biased estimator, equivalent to torch.var(input, unbiased=False).

Note

Unlike Batch Normalization and Instance Normalization, which applies scalar scale and bias for each entire channel/plane with the affine option, Layer Normalization applies per-element scale and bias with elementwise_affine.

This layer uses statistics computed from input data in both training and evaluation modes.

Parameters

-

normalized_shape (int or list or torch.Size) –

input shape from an expected input of size

If a single integer is used, it is treated as a singleton list, and this module will normalize over the last dimension which is expected to be of that specific size.

-

eps (float) – a value added to the denominator for numerical stability. Default: 1e-5

-

elementwise_affine (bool) – a boolean value that when set to

True, this module has learnable per-element affine parameters initialized to ones (for weights) and zeros (for biases). Default:True. -

bias (bool) – If set to

False, the layer will not learn an additive bias (only relevant ifelementwise_affineisTrue). Default:True.

Variables

-

weight – the learnable weights of the module of shape when

elementwise_affineis set toTrue. The values are initialized to 1. -

bias – the learnable bias of the module of shape when

elementwise_affineis set toTrue. The values are initialized to 0.

Shape:

-

Input:

-

Output: (same shape as input)

Examples:

<span></span><span>>>> </span><span># NLP Example</span>

<span>>>> </span><span>batch</span><span>,</span> <span>sentence_length</span><span>,</span> <span>embedding_dim</span> <span>=</span> <span>20</span><span>,</span> <span>5</span><span>,</span> <span>10</span>

<span>>>> </span><span>embedding</span> <span>=</span> <span>torch</span><span>.</span><span>randn</span><span>(</span><span>batch</span><span>,</span> <span>sentence_length</span><span>,</span> <span>embedding_dim</span><span>)</span>

<span>>>> </span><span>layer_norm</span> <span>=</span> <span>nn</span><span>.</span><span>LayerNorm</span><span>(</span><span>embedding_dim</span><span>)</span>

<span>>>> </span><span># Activate module</span>

<span>>>> </span><span>layer_norm</span><span>(</span><span>embedding</span><span>)</span>

<span>>>></span>

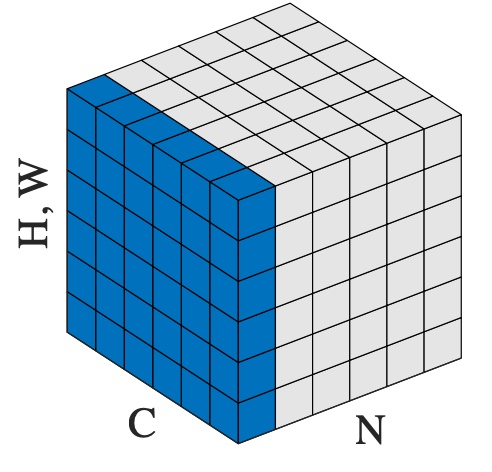

<span>>>> </span><span># Image Example</span>

<span>>>> </span><span>N</span><span>,</span> <span>C</span><span>,</span> <span>H</span><span>,</span> <span>W</span> <span>=</span> <span>20</span><span>,</span> <span>5</span><span>,</span> <span>10</span><span>,</span> <span>10</span>

<span>>>> </span><span>input</span> <span>=</span> <span>torch</span><span>.</span><span>randn</span><span>(</span><span>N</span><span>,</span> <span>C</span><span>,</span> <span>H</span><span>,</span> <span>W</span><span>)</span>

<span>>>> </span><span># Normalize over the last three dimensions (i.e. the channel and spatial dimensions)</span>

<span>>>> </span><span># as shown in the image below</span>

<span>>>> </span><span>layer_norm</span> <span>=</span> <span>nn</span><span>.</span><span>LayerNorm</span><span>([</span><span>C</span><span>,</span> <span>H</span><span>,</span> <span>W</span><span>])</span>

<span>>>> </span><span>output</span> <span>=</span> <span>layer_norm</span><span>(</span><span>input</span><span>)</span>